This project is offline lightweight DIY solution to monitor urban landscape. After installing this software on the specified hardware (Nvidia Jetson board + Logitech webcam), you will be able to count cars, pedestrians, motorbikes from your webcam live stream.

Behind the scenes, it feeds the webcam stream to a neural network (YOLO darknet) and make sense of the generated detections.

It is very alpha and we do not provide any guarantee that this will work for your use case, but we conceived it as a starting point from where you can build-on & improve.

- Hardware pre-requisite

- Exports documentation

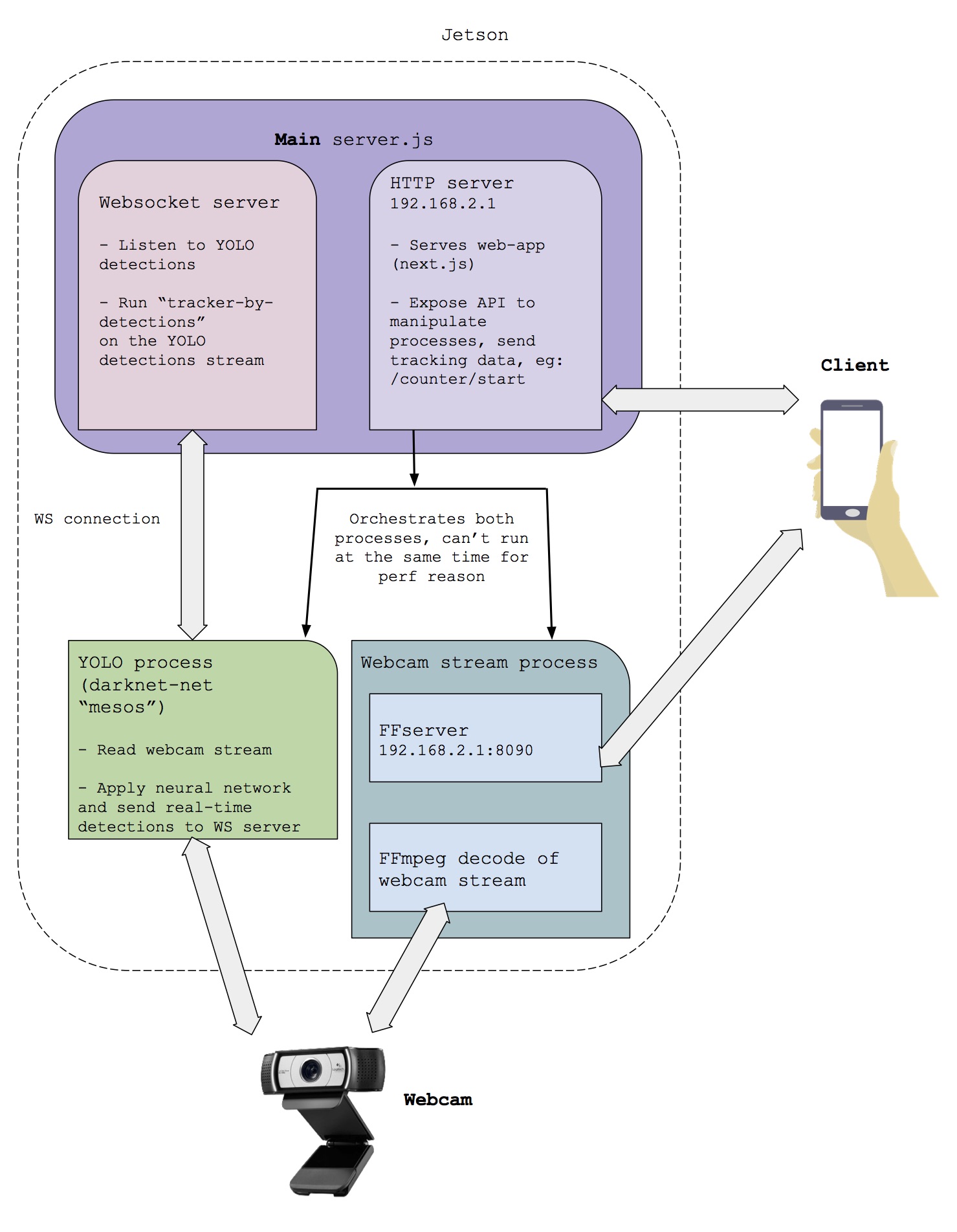

- System overview

- Step by Step install guide

- Flash Jetson Board:

- Prepare Jetson Board

- Configure Ubuntu to turn the jetson into a wifi access point

- Configure jetson to start in overclocking mode:

- Install Darknet-net:

- Install the open-data-cam node app

- Restart the jetson board and open http://IP-OF-THE-JETSON-BOARD:8080/

- Connect you device to the jetson

- You are done

- Automatic installation (experimental)

- Troubleshoothing

- Run open data cam on a video file instead of the webcam feed:

- Development notes

- Nvidia Jetson TX2

- Webcam Logitech C222 (or any usb webcam compatible with Ubuntu 16.04)

- A smartphone / tablet / laptop that you will use to operate the system

This export gives you the counters results along with the unique id of each object counted.

"Timestamp","Counter","ObjectClass","UniqueID"

"2018-08-23T09:25:18.946Z","turquoise","car",9

"2018-08-23T09:25:19.073Z","green","car",14

"2018-08-23T09:25:19.584Z","yellow","car",1

"2018-08-23T09:25:20.350Z","green","car",13

"2018-08-23T09:25:20.600Z","turquoise","car",6

"2018-08-23T09:25:20.734Z","yellow","car",32

"2018-08-23T09:25:21.737Z","green","car",11

"2018-08-23T09:25:22.890Z","turquoise","car",40

"2018-08-23T09:25:23.145Z","green","car",7

"2018-08-23T09:25:24.423Z","turquoise","car",4

"2018-08-23T09:25:24.548Z","yellow","car",0

"2018-08-23T09:25:24.548Z","turquoise","car",4

This export gives you the raw data of all objects tracked with frame timestamps and positionning.

[

// 1 Frame

{

"timestamp": "2018-08-23T08:46:59.677Z" // Time of the frame

// Objects in this frame

"objects": [{

"id": 13417, // unique id of this object

"x": 257, // position and size on a 1280x720 canvas

"y": 242,

"w": 55,

"h": 44,

"bearing": 230,

"name": "car"

},{

"id": 13418,

"x": 312,

"y": 354,

"w": 99,

"h": 101,

"bearing": 230,

"name": "car"

}]

},

//...

// Other frames ...

}See technical architecture for a more detailed overview

NOTE: lots of those steps needs to be automated by integrating them in a docker image or something similar, for now need to follow the full procedure

- Download JetPack to Flash your Jetson board with the linux base image and needed dependencies

- Follow the install guide provided by NVIDIA

NOTE: You also can find a detailed video tutorial for flashing the Jetson board here.

-

Update packages

sudo apt-get update

-

Install cURL

sudo apt-get install curl

-

install git-core

sudo apt-get install git-core

-

Install nodejs (v8):

curl -sL https://deb.nodesource.com/setup_8.x | sudo -E bash - sudo apt-get install -y nodejs sudo apt-get install -y build-essential -

Install ffmpeg (v3)

sudo add-apt-repository ppa:jonathonf/ffmpeg-3 # sudo add-apt-repository ppa:jonathonf/tesseract (ubuntu 14.04 only!!) sudo apt update && sudo apt upgrade sudo apt-get install ffmpeg

-

Optional: Install nano

sudo apt-get install nano

-

enable SSID broadcast

add the following line to

/etc/modprobe.d/bcmdhd.confoptions bcmdhd op_mode=2

further infos: here

-

Configure hotspot via UI

follow this guide: https://askubuntu.com/a/762885

-

Define Address range for the hotspot network

-

Go to the file named after your Hotspot SSID in

/etc/NetworkManager/system-connectionscd /etc/NetworkManager/system-connections sudo nano <YOUR-HOTSPOT-SSID-NAME>

-

Add the following line to this file:

[ipv4] dns-search= method=shared address1=192.168.2.1/24,192.168.2.1 <--- this line -

Restart the network-manager

sudo service network-manager restart

-

-

Add the following line to

/etc/rc.localbeforeexit 0:#Maximize performances ( sleep 60 && /home/ubuntu/jetson_clocks.sh )&

-

Enable

rc.local.servicechmod 755 /etc/init.d/rc.local sudo systemctl enable rc-local.service

IMPORTANT Make sure that openCV (v2) and CUDA will be installed via JetPack (post installation step) if not: (fallback :openCV 2: install script, CUDA: no easy way yet)

-

Install libwsclient:

git clone https://github.com/PTS93/libwsclient cd libwsclient ./autogen.sh ./configure && make && sudo make install

-

Install liblo:

wget https://github.com/radarsat1/liblo/releases/download/0.29/liblo-0.29.tar.gz --no-check-certificate tar xvfz liblo-0.29.tar.gz cd liblo-0.29 ./configure && make && sudo make install

-

Install json-c:

git clone https://github.com/json-c/json-c.git cd json-c sh autogen.sh ./configure && make && make check && sudo make install

-

Install darknet-net:

git clone https://github.com/meso-unimpressed/darknet-net.git

-

Download weight files:

link: yolo.weight-files

Copy

yolo-voc.weightstodarknet-netrepository path (root level)e.g.:

darknet-net |-cfg |-data |-examples |-include |-python |-scripts |-src |# ... other files |yolo-voc.weights <--- Weight file should be in the root directorywget https://pjreddie.com/media/files/yolo-voc.weights --no-check-certificate

-

Make darknet-net

cd darknet-net make

-

Install pm2 and next globally

sudo npm i -g pm2 sudo npm i -g next

-

Clone open_data_cam repo:

git clone https://github.com/moovel/lab-open-data-cam.git

-

Specify ABSOLUTE

PATH_TO_YOLO_DARKNETpath inlab-open-data-cam/config.json(open data cam repo)e.g.:

{ "PATH_TO_YOLO_DARKNET" : "/home/nvidia/darknet-net" } -

Install open data cam

cd <path/to/open-data-cam> npm install npm run build

-

Run open data cam on boot

cd <path/to/open-data-cam> # launch pm2 at startup # this command gives you instructions to configure pm2 to # start at ubuntu startup, follow them sudo pm2 startup # Once pm2 is configured to start at startup # Configure pm2 to start the Open Traffic Cam app sudo pm2 start npm --name "open-data-cam" -- start sudo pm2 save

💡 We should maybe set up a "captive portal" to avoid people needing to enter the ip of the jetson, didn't try yet 💡

When the jetson is started you should have a wifi "YOUR-HOTSPOT-NAME" available.

- Connect you device to the jetson wifi

- Open you browser and open http://IPOFTHEJETSON:8080

- In our case, IPOFJETSON is: http://192.168.2.1:8080

🚨 This alpha version of december is really alpha and you might need to restart ubuntu a lot as it doesn't clean up process well when you switch between the counting and the webcam view 🚨

You should be able to monitor the jetson from the UI we've build and count 🚗 🏍 🚚 !

The install script for autmatic installation

Setting up the access point is not automated yet! **follow this guide: https://askubuntu.com/a/762885 ** to set up the hotspot.

-

run the

install.shscript directly from GitHubwget -O - https://raw.githubusercontent.com/moovel/lab-opendatacam/master/install/install.sh | bash

To debug the app log onto the jetson board and inspect the logs from pm2 or stop the pm2 service (sudo pm2 stop <pid>) and start the app by using sudo npm start to see the console output directly.

-

Error:

please specify the path to the raw detections fileMake sure that

ffmpegis installed and is above version2.8.11 -

Error:

Could *not* find a valid build in the '.next' directory! Try building your app with '*next* build' before starting the serverRun

npm buildbefore starting the app -

Could not find darknet. Be sure to

makedarknet withoutsudootherwise it will abort mid installation. -

Error:

cannot open shared object file: No such file or directoryTry reinstalling the liblo package.

-

Error:

Error: Cannot stop process that is not running.It is possible that a process with the port

8090is causing the error. Try to kill the process and restart the board:sudo netstat -nlp | grep :8090 sudo kill <pid>

It is possible to run Open Data Cam on a video file instead of the webcam feed.

Before doing this you should be aware that the neural network (YOLO) will run on all the frames of the video file at ~7-8 FPS (best jetson speed) and do not play the file in real-time. If you want to simulate a real video feed you should drop the framerate of your video down to 7 FPS (or whatever frame rate your jetson board can run YOLO).

To switch the Open Data Cam to "video file reading" mode, you should go to the open-data-cam folder on the jetson.

-

cd <path/to/open-data-cam> -

Then open YOLO.js, and uncomment those lines:

YOLO.process = new forever.Monitor(

[

"./darknet",

"detector",

"demo",

"cfg/voc.data",

"cfg/yolo-voc.cfg",

"yolo-voc.weights",

"-filename",

"YOUR_FILE_PATH_RELATIVE_TO_DARK_NET_FOLDER.mp4",

"-address",

"ws://localhost",

"-port",

"8080"

],

{

max: 1,

cwd: config.PATH_TO_YOLO_DARKNET,

killTree: true

}

);- Copy the video file you want to run open data cam on in the

darknet-netfolder on the Jetson (if you did auto-install, it is this path: ~/darknet-net)

// For example, your file is `video-street-moovelab.mp4`, you will end up with the following in the darknet-net folder:

darknet-net

|-cfg

|-data

|-examples

|-include

|-python

|-scripts

|-src

|# ... other files

|video-street-moovellab.mp4 <--- Video file

- Then replace

YOUR_FILE_PATH_RELATIVE_TO_DARK_NET_FOLDER.mp4placeholder in YOLO.js with your file name, in this casevideo-street-moovellab.mp4

// In our example you should end up with the following:

YOLO.process = new forever.Monitor(

[

"./darknet",

"detector",

"demo",

"cfg/voc.data",

"cfg/yolo-voc.cfg",

"yolo-voc.weights",

"-filename",

"video-street-moovellab.mp4",

"-address",

"ws://localhost",

"-port",

"8080"

],

{

max: 1,

cwd: config.PATH_TO_YOLO_DARKNET,

killTree: true

}

);- After doing this you should re-build the Open Data Cam node app.

npm run build

You should be able to use any video file that are readable by OpenCV, which is what YOLO implementation use behind the hoods to decode the video stream

By default, the opendatacam will track all the classes that the neural network is trained to track. In our case, YOLO is trained with the VOC dataset, here is the complete list of classes

You can restrict the opendatacam to some specific classes with the VALID_CLASSES option in the config.json file .

For example, here is a way to only track buses and person:

{

"VALID_CLASSES": ["bus","car"]

}If you change this config option, you will need to re-build the project by running npm run build.

In order to track all the classes (default value), you need to set it to:

{

"VALID_CLASSES": ["*"]

}Extra note: the tracking algorithm might work better by allowing all the classes, in our test we saw that for some classes like Bike/Motorbike, YOLO had a hard time distinguishing them well, and was switching between classes across frames for the same object. By keeping all the detections and ignoring the class switch while tracking we saw that we can avoid losing some objects, this is discussed here

sshfs -o allow_other,defer_permissions [email protected]:/home/nvidia/Desktop/lab-traffic-cam /Users/tdurand/Documents/ProjetFreelance/Moovel/remote-lab-traffic-cam/

yarn install

yarn run dev