Online questionnaire data and data processing, supporting:

Evans, T., Retzlaff, C., Geißler, C., Kargl, M., Plass, M., & Müller, H. et al. (2022). The explainability paradox: Challenges for xAI in digital pathology. Future Generation Computer Systems. doi: 10.1016/j.future.2022.03.009

Data analysis is available in the accompanying Jupyter notebook

- User profiling questions, collecting data on usage of and familiarity with AI applications in pathology, and with machine learning in general

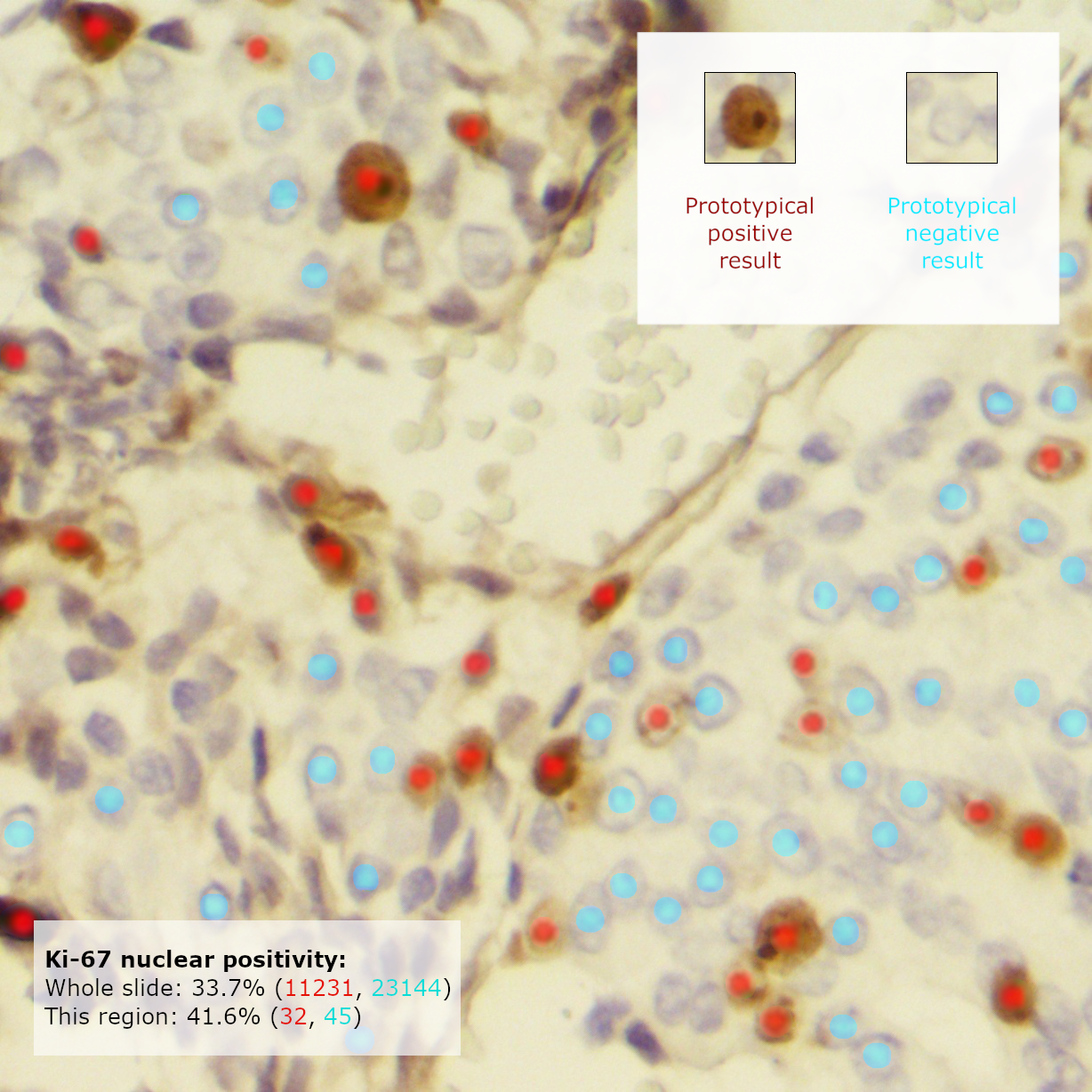

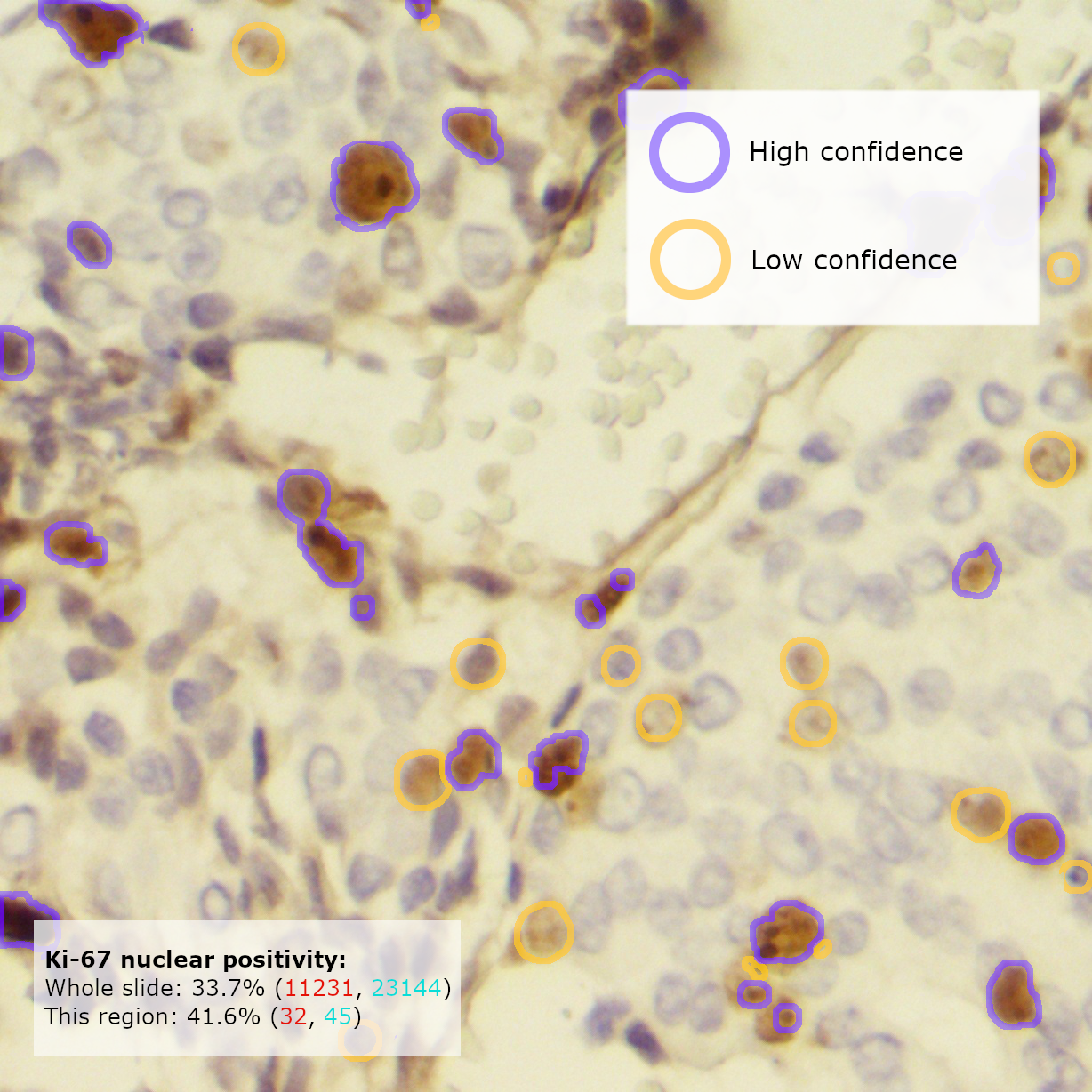

- 7 example implementations of explainability methods on a sample Ki-67 app output, with 4 Likert-scale feedback questions to evaluate intelligibility, informativeness and value to user.

git clone https://github.com/theodore-evans/xai-in-digital-pathology.git

cd xai-in-digital-pathology/frontend

npm i

npm start

Open http://localhost:3000/ in your web browser

Sample Ki-67 model: PathnoNet, trained for 20 epochs on the training set of SHIDC-B-Ki-67-V1.0 and demonstrated with the test set of the same dataset.

GradCAM heatmap generated using Neuroscope-1.0

Example interpolations mocked up using DiffMorph

All other graphics created with GIMP

For more detail on example creation, please refer to Method > Questionnaire design in Evans et Al (2022)

This survey is build in React.js using survey.js. The project was adapted from SurveyJS for React quickstart project Public code repo for FGCS Special Issue on xAI in healthcare