-

Notifications

You must be signed in to change notification settings - Fork 762

Creating a Job

You can create a new job using one of three options:

1. Based on an existing job

This option allows a new job to be created based on an existing job,

regardless of whether the job has been crawled or not. This option can

be useful for repeating crawls or recovering crawls that had problems.

See Recovery of Frontier State and

frontier.recover.gz.

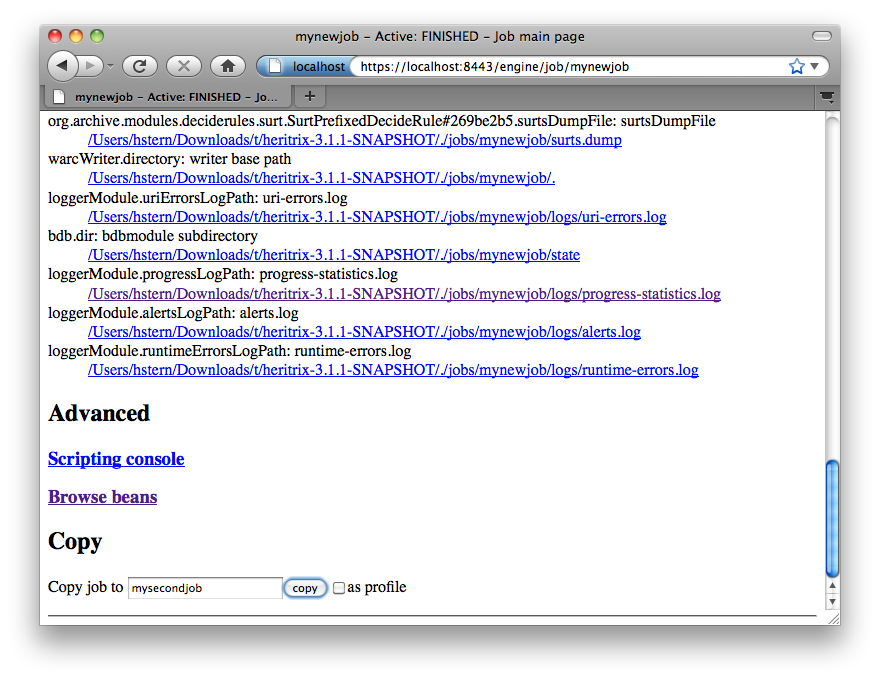

To base a new job on an existing job, use the "copy" button on the job

page of the parent job.

2. Based on a profile

This option allows a new job to be created based on an existing

profile. To create a job based on a profile, use the "copy" button on

the profile page.

3. With defaults

This option creates a crawl job based on the default profile. To create

a job from the default profile, enter a job name on the Main Console

screen and click the "create" button.

Note

-

Changes made to a profile or job will not change a derived job.

-

Jobs based on the default profile provided with Heritrix are not ready to run as is. The

metadata.operatorContactUrlneeds to be set to a valid value.

![]() copyjob.png (image/png)

copyjob.png (image/png)

![]() createnewjob.png (image/png)

createnewjob.png (image/png)

![]() createjobbasedonprofile.png

(image/png)

createjobbasedonprofile.png

(image/png)

![]() mainconsolenewjob.png (image/png)

mainconsolenewjob.png (image/png)

![]() createnewjobdefault.png (image/png)

createnewjobdefault.png (image/png)

Structured Guides:

User Guide

- Introduction

- New Features in 3.0 and 3.1

- Your First Crawl

- Checkpointing

- Main Console Page

- Profiles

- Heritrix Output

- Common Heritrix Use Cases

- Jobs

- Configuring Jobs and Profiles

- Processing Chains

- Credentials

- Creating Jobs and Profiles

- Outside the User Interface

- A Quick Guide to Creating a Profile

- Job Page

- Frontier

- Spring Framework

- Multiple Machine Crawling

- Heritrix3 on Mac OS X

- Heritrix3 on Windows

- Responsible Crawling

- Adding URIs mid-crawl

- Politeness parameters

- BeanShell Script For Downloading Video

- crawl manifest

- JVM Options

- Frontier queue budgets

- BeanShell User Notes

- Facebook and Twitter Scroll-down

- Deduping (Duplication Reduction)

- Force speculative embed URIs into single queue.

- Heritrix3 Useful Scripts

- How-To Feed URLs in bulk to a crawler

- MatchesListRegexDecideRule vs NotMatchesListRegexDecideRule

- WARC (Web ARChive)

- When taking a snapshot Heritrix renames crawl.log

- YouTube

- H3 Dev Notes for Crawl Operators

- Development Notes

- Spring Crawl Configuration

- Build Box

- Potential Cleanup-Refactorings

- Future Directions Brainstorming

- Documentation Wishlist

- Web Spam Detection for Heritrix

- Style Guide

- HOWTO Ship a Heritrix Release

- Heritrix in Eclipse