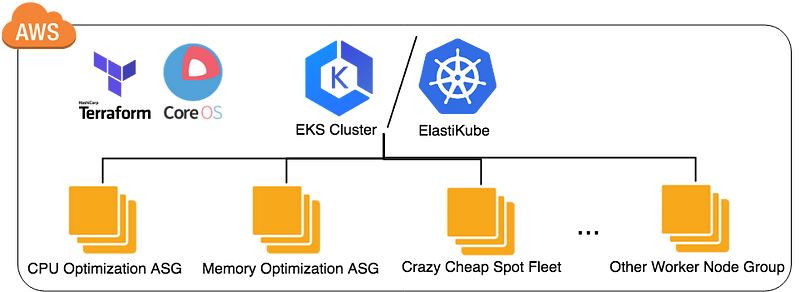

Vishwakarma can be used to create a Kubernetes cluster in AWS by leveraging HashiCorp Terraform and CoreOS. Of course, we didn't develop it from scratch, we refer to CoreOS Tectonic, before starting to dive into the detail, let's experience it first.

- Kubernetes v1.27.2+.

- Supported AWS VPC CNI, flannel, cilium networking.

- RBAC-enabled, Audit log, and etcd data encryption.

- etcd v3.5.0+.

- On-cluster etcd with TLS.

-

Terraform: All of the AWS resource will be create by Terraform, hence, you need to install it and confirm the permission setup correctly, then Terraform have the permission to create AWS resource automatically. Minimum required version of Terraform is v1.2.0.

-

kubectl: After the cluster created completely, there is a Kubernetes ConfigMap aws-auth need to be created through kubectl, so need to install it. Minimum required version of Kubernetes is v1.27.0.

-

aws-iam-authenticator: The clsuter access permission integrate with AWS IAM, in order to let the cluster know whether you have the right to access, aws-iam-authenticator need to be installed in the client side.

-

Key Pair: In order to access worker node through ssh protocol, please create a key pair in example region US West (Oregon) us-west-2.

-

jq: It's a necessary command-line for filtering JSON in many operations.

-

python: In order to support node rolling udate, need the lambda function, hence, this tf module need python 3.7 and python package management tool pip

First, acquire Vishwakarma from github:

$ git clone https://github.com/getamis/vishwakarma.gitSecond, before the operation, user need to create a AWS EC2 key pairs first, and input it when there is command line prompt during the operation.

# need to input the key pair name

var.key_pair_name

The key pair name for access bastion ec2

Enter a value:Please create a ssh key pair in ~/.ssh/ with the name id_rsa.pub and id_rsa, this example use the key pair for the etcd, Kubernetes master, Kubernetes node EC2 instance (refer to Here for the more detail information).

# switch to kubernetes-cluster example folder

$ cd examples/kubernetes-cluster

# initial for sync terraform module and install provider plugins

$ terraform init

# create the network infrastructure

$ terraform apply -target=module.network

# create the kubernetes master compoment

$ terraform apply -target=module.master

# create the general and spot Kubernetes worker group

$ terraform applyVerify the Kubernetes cluster is up! (Still keep in the same folder):

# Get the kubeconfig from S3 (The bucket name is demo-elastikube-xxxxxxxx.

# The prefix demo-elastikube is the cluster name defined in main.tf and the rest part is an MD5.

# setup kubeconfig for kubectl to access Kubernetes cluster

$ export KUBECONFIG=#{The Path You Put kubeconfig}/kubeconfig

# check whether there is 4 worker register successfully, it will takes several minutes...

$ kubectl get node

NAME STATUS ROLES AGE VERSION

ip-10-0-48-247.ec2.internal Ready master 9m v1.27.2

ip-10-0-48-117.ec2.internal Ready master 9m v1.27.2

ip-10-0-66-127.ec2.internal Ready on-demand 5m v1.27.2

ip-10-0-66-127.ec2.internal Ready on-demand 6m v1.27.2

ip-10-0-71-121.ec2.internal Ready spot 3m v1.27.2

ip-10-0-86-182.ec2.internal Ready spot 4m v1.27.2You have completed one Kubernetes cluster the same as below picture, and let me briefly explain how Vishwakarma achieves it.

Vishwakarma includes serveral major modules:

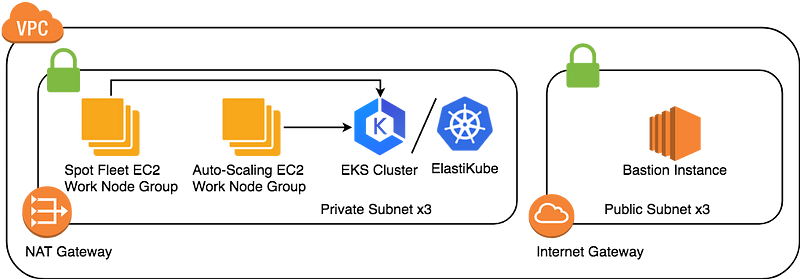

Create one AWS VPC including private and public subnet, and one ec2 instance called bastion hosts in public subnet, hence, one can access the resource hosting in the private subnet, refer aws/network for the detail variable inputs.

This module creates the Kubernetes control plane, Terraform is responsible for the complicated Kubernetes compoments, and it takes about 10~15 minutes to complete, refer aws/elastikube for the detail variable inputs.

Create a AWS auto-scaling group with CoreOS container linux and leverage ignition to provision and register to ElastiKube automatically.

Due to using AWS launch template, hence, it's up to user to choose spot or on demand instance type by changing the variable, refer aws/kube-worker for the detail variable inputs.

There are several ways to contribute to this project:

- Find bug: create an issue in our Github issue tracker.

- Fix a bug: check our issue tracker, leave comments and send a pull request to us to fix a bug.

- Make new feature: leave your idea in the issue tracker and discuss with us then send a pull request!

The Changelog captures all important release notes.

This project is licensed under the Apache 2.0 License - see the LICENSE file for details.