Zeta is a tool for automatically generating a graphical DSL from a range of text DSLs. In combination with a suitable meta-model, you render these text definitions for a generator that creates a graphical editor for the web.

The basic structure is given by the DSLs which were defined specifically for the creation of graphical DSLs.

- Diagram - The Diagram language is the base DSL, which is sufficient for very simple domain-specific graphical editors. It defines the mapping of simple shapes, styles, and the behavior of elements to meta-model classes.

- Shape - The Shape language is needed for the definition of forms of any complexity. This DSL offers different basic forms such as for example, rectangles, ellipses and polygons in any width and depth. An element can be biuld from any number and combination from the existing basic forms.

- Style - The style language provides the functionality for defining different representations (layouts or design features) for elements. This could be seen similar to cascading style sheets (CSS).

- Concept - The graphical DSL is also called Meta Model. With this DSL the underlying data structure for the later model instance can be defined.

To find out more, please check out the Snowplow website and the Snowplow wiki.

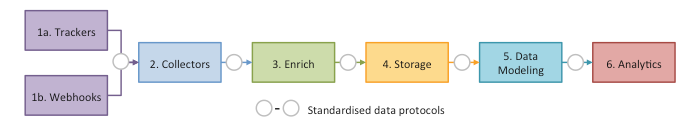

The repository structure follows the conceptual architecture of Snowplow, which consists of six loosely-coupled sub-systems connected by five standardized data protocols/formats:

To briefly explain these six sub-systems:

- Trackers fire Snowplow events. Currently we have 12 trackers, covering web, mobile, desktop, server and IoT

- Collectors receive Snowplow events from trackers. Currently we have three different event collectors, sinking events either to Amazon S3, Apache Kafka or Amazon Kinesis

- Enrich cleans up the raw Snowplow events, enriches them and puts them into storage. Currently we have a Hadoop-based enrichment process, and a Kinesis- or Kafka-based process

- Storage is where the Snowplow events live. Currently we store the Snowplow events in a flatfile structure on S3, and in the Redshift and Postgres databases

- Data modeling is where event-level data is joined with other data sets and aggregated into smaller data sets, and business logic is applied. This produces a clean set of tables which make it easier to perform analysis on the data. We have data models for Redshift and Looker

- Analytics are performed on the Snowplow events or on the aggregate tables.

For more information on the current Snowplow architecture, please see the Technical architecture.

Assuming git and SBT installed:

$ git clone https://github.com/snowplow/snowplow.git

$ cd snowplow/3-enrich/scala-common-enrich

$ sbt test| Technical Docs | Setup Guide | Roadmap | Contributing |

|---|---|---|---|

|

|

|

|

We're committed to a loosely-coupled architecture for Snowplow and would love to get your contributions within each of the six sub-systems.

If you would like help implementing a new tracker, adding an additional enrichment or loading Snowplow events into an alternative database, check out our Contributing page on the wiki!

Check out the Talk to us page on our wiki.

Snowplow is copyright 2012-2019 Snowplow Analytics Ltd.

Licensed under the Apache License, Version 2.0 (the "License"); you may not use this software except in compliance with the License.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.