This project entitled “Development of a Mobile Application to Control the P-Guard Robot” was part of my internship with Enova Robotics.

The app offers a fully remote solution for security guards to control the robot, send command requests and receive responses and alerts in real time.

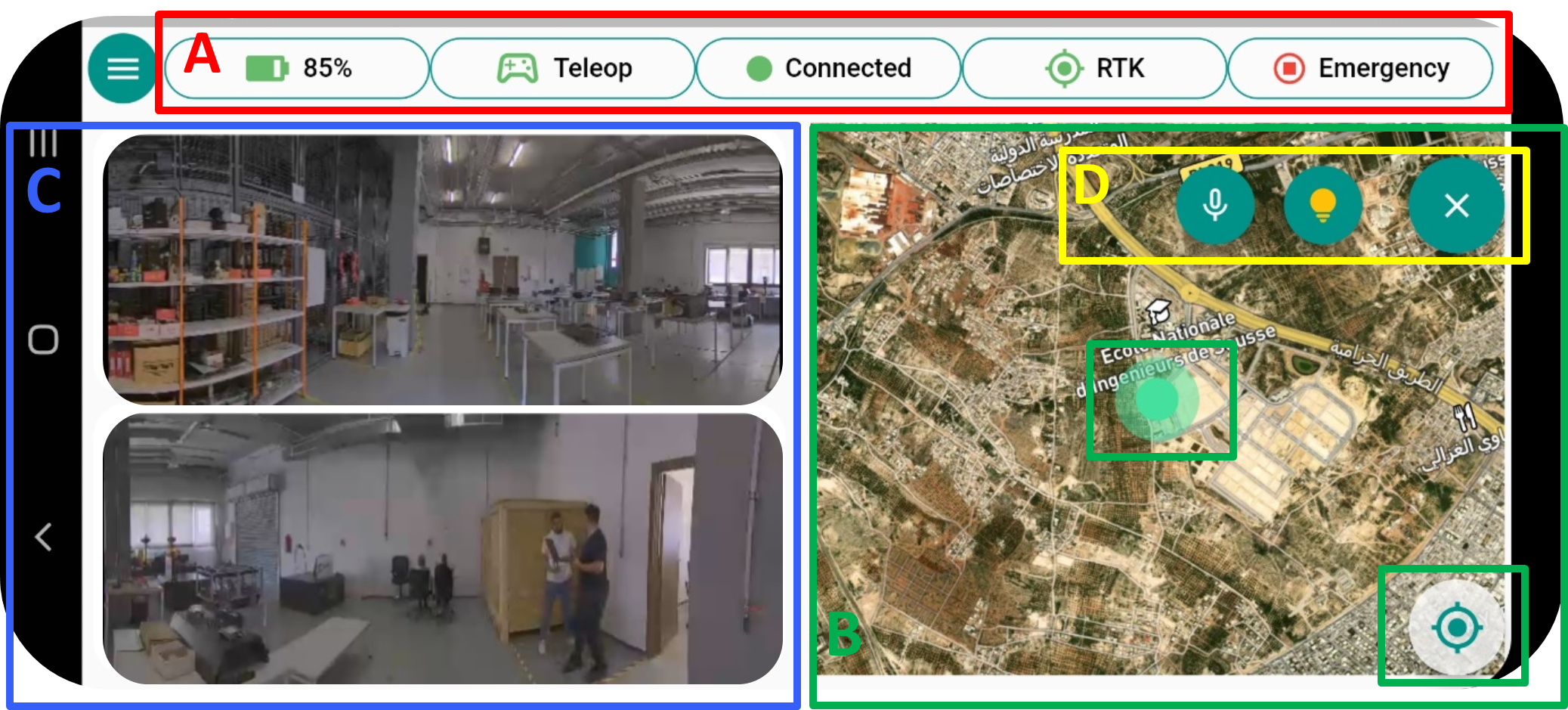

The frames drawn on some of the screens are not actually part of the UI. They are used to give a better understanding of each screen's components. Click more details for more info.

The Home screen consists of four main parts, they are explained in more detail below :

more details

• The Robot's status panel (A).

• The Map and robot's current position (B).

• The Cameras’ video streams (C).

• the robot’s lights and alerts command menu (D) .

A mission is a set of GPS points that define a path which the robot will navigate autonomously. To create a mission the user will need to enter the new mission’s name (A) and then select the Joystick mode or the Map mode and tap the “Start” button (B) to start recording.

more details

The user will direct the robot with the app’s joystick (A) and for each desired point he wants to add, (B) he needs to enter the coordinates of the point (Y), set its speed (X): each tap = +1, confirm (A) the point and finally save the scenario (B).

more details

The user long presses on the map where they want to add a point (A), then they specify the speed (B). Finally, they can save or cancel the new mission (C).

A scenario consists of one or more missions.

more details

The scenarios' screen allows users to see all available patrol scenarios (A). When a scenario is selected , its path is drawn on the map (B) and then the user can choose to launch it and track the robot's movement on the map in real time. The Add Scenario button (C) takes the user to the Create Scenario screen.

more details

This only shows the first part of the screen where the user can create a new scenario. They will first need to enter the scenario’s name (A), they also can check the random option (the scenario’s missions will be played in random order). And they can set the scenario’s iteration to either 1 (loop) or 0 (B).

more details

This shows the second part of the Create a new scenario screen. The user can add one or more missions (A), and for each mission, they need to specify a pause time: the robot will pause for x seconds between missions (A).

Live streams and control of the robot's cameras.

more details

The Camera Screen contains the video streams coming from the robot’s two 360° cameras (A). Meaning the user can rotate these cameras using the slider (B) with the option to change the rotation direction: either front or back (C). They can also zoom in or out (D).

The user can double tap on each video to go into full-screen mode. They can zoom in or out by pinching the screen (A), and they can exit the full screen (B).

more details

The following figure shows the Settings Screen in which the user can check his account information (A) and change his app settings. Mainly the user can turn on/off their notifications (B) to receive all different types of alert messages (mission creation, the emergency button changed, scenario launched…), and log out of the app (C).

Project is following MVC pattern. For managing state I used GETX. All the UI components are inside the views folder. Business logic is handled inside the controller folder. Robot data Model is used to parse the incoming data stream.

└── lib/

├── controller/

│ └── business logic layer

├── model/

│ └── data layer

├── view/

│ └── presentation layer

├── services/

│ └── helper classes

└── constant

├── Login & Sign up using Email and Password

├── Track connected robot's current state and position.

├── Add, Update, Delete missions.

├── Create and Launch patrol scenarios.

├── Monitor and control robot's cameras.

└── Enable and receive alert notifications.

To learn more about GetX:

https://blog.logrocket.com/ultimate-guide-getx-state-management-flutter/

To learn more about MVC pattern:

https://medium.com/follow-flutter/flutter-mvc-at-last-275a0dc1e730

To learn more about Websockets in flutter:

https://blog.logrocket.com/using-websockets-flutter/

PS.

If you're intrested I can provide access to my report which outlines the full development process of the app upon request.