-

Notifications

You must be signed in to change notification settings - Fork 0

Integration

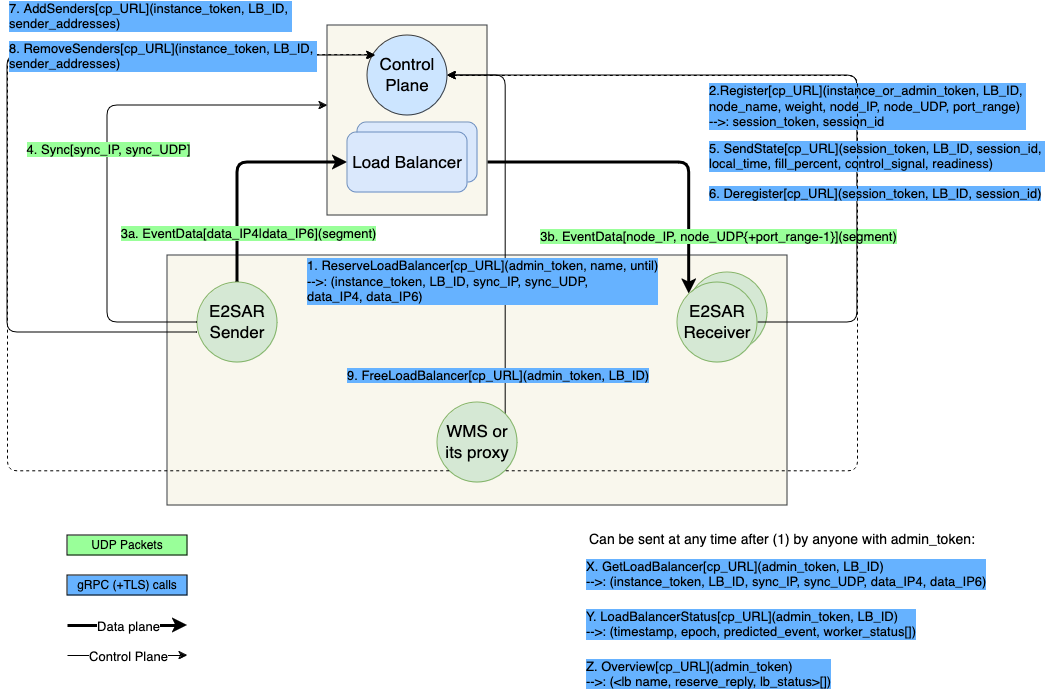

E2SAR uses a combination of UDP packets and gRPC+TLS to communicate with loadbalancer control plane and dataplane.

All interactions are captured in the figure above.

The process of using the load balancer begins with (1) reserving a load balancer using an out-of-band information like the ejfat URI of the Control Plane agent with embedded admin token. This can be done by Workflow Management System (WMS) or its proxy.

Once the load balancer is reserved and the details of the reservation communicated out of band to the worker nodes via another EJFAT URI, they can register themselves with the control plane (2). Alternatively the registration can be peformed by WMS or its proxy assuming the details of the workers are known to it.

All senders are required to be registered with the Control Plane by using AddSenders gRPC call (7). Otherwise their traffic will be rejected at the Load Balancer. When a sender is no longer needed, it can be unregistered using RemoveSenders call [8]. Either call can be invoked by the sender node or by the WMS.

At this point data can begin traversing the dataplane in segments (3a and 3b).

Periodic sync messages (4) are required to be sent to the Control Plane by the sender. Similarly the receiving nodes or WMS on their behalf must periodically use SendState gRPC call (5) to update queue occupancy information in the control plane.

After the workflow completes or if worker software fails, the workers can be deregistered (6) - themselves or via WMS. The load balancer can be freed (9).

In addition at any time someone with admin token can request repeat reservation reply via GetLoadBalancer call (X), get detailed information about load balancer and its worker node clients via LoadBalancerStatus (Y) and get an overview of all provisioned load balancers and their associated worker node clients via Overview call (Z).

EJFAT defines its own URI format to convey necessary information to different actors in different phases of the workflow. The general format of the URI is:

ejfat[s]://[<token>@]<cp_host>:<cp_port>/[lb/<lb_id>][?[data=<data_host:[<data_port>]>][&sync=<sync_host>:<sync_port>][&sessionid=<session_id>]].

Where:

-

ejfatorejfatsis the schema name, withejfatsrequiring the use of TLS when speaking gRPC to the Control Plane (CP) -

tokenis either admin, instance or session token, depending on the context (discussed below) needed to communicate over gRPC (it is not used for any other communications) -

cp_host:cp_portis either an address or a name of the CP gRPC server and the port it is listening on -

lb_idis the identifier of the virtual load balancer that was reserved (discussed below) -

data_hostand optionaldata_portare the address (hostnames are not allowed) of the Load Balancer - this is where the segments of events are sent to. When not specified,data_portdefaults to 19522. Tyically the URI may contain twodata=elements - one specifying IPv4 and the other - IPv6 address of the Load Balancer. -

sync_addr:sync_portis the address of the Control Plane where UDP Sync packets must be sent by the sending site. Note that this is not required to be the same as the hostname or address used for gRPC communications. -

session_idis the session ID issued when a worker is registered, see discussion below.

Different control plane commands use different portions of the EJFAT URI and this section attempts to explain which portions of the URI is relevant at different stages as it relates to the diagram above.

Step 1 (ReserveLoadBalancer): ejfat[s]://<admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/

Step 2 (Register worker node): ejfat[s]://<instance or admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>

- instance token and lbid come from Step 1

- Note that can be done from worker node or centrally by the WMS

Steps 3a, 3b, 4, 7, and 8 (Sender sending data and sync packets and using AddSender/RemoveSender gRPC): ejfat[s]://<instance or admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>?sync=<sync IP address>:<sync UDP port>&data=<data IPv4>[&data=<data IPv6>]

- Note that instance token and CP name and address and lb id aren't actually used, unless the uses Steps 7,8 Add/Remove sender gRPC calls

- Note that up to two dataplane addresses of the loadbalancer (where to send the data) can be specified - an IPv4 and an IPv6 address

- Note that 7 and 8 (addSenders/removeSenders) can be done from sender or centrally by the WMS

Step 5 (SendState from worker node): ejfat[s]://<session or instance token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>?sessionid=<session id>

- session id and session token come from the return of Step 2

- sync and data addresses are not relevant

Step 6 (Deregister worker): ejfat[s]://<session or admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>?sessionid=<session id>

- session id and session token come from Step 2

- sync and data addresses are not relevant

- Note that can be done from worker node or centrally by WMS

Step 9 (FreeLoadBalancer): ejfat[s]://<admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>/

Steps X and Y (GetLoadBalancer and LoadBalancerStatus): ejfat[s]://<instance or admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/lb/<lbid>

- instance token and lbid come from Step 1

Step Z (Overview): ejfat[s]://<admin token>@<cp name or IPv4 or IPv6 address>:<cp port>/

- same as in Step 1

Note that EjfatURI object in the code can maintain multiple tokens internally and it knows which token to use in which sitation when using gRPC. Classes Segmenter and Reassembler also know how to ask EjfatURI object for the information they need. When loading a new EjfatURI object from a URI string (or environment variable) the constructor has a flag telling the object how to interpret the passed in token (admin, instance or session).

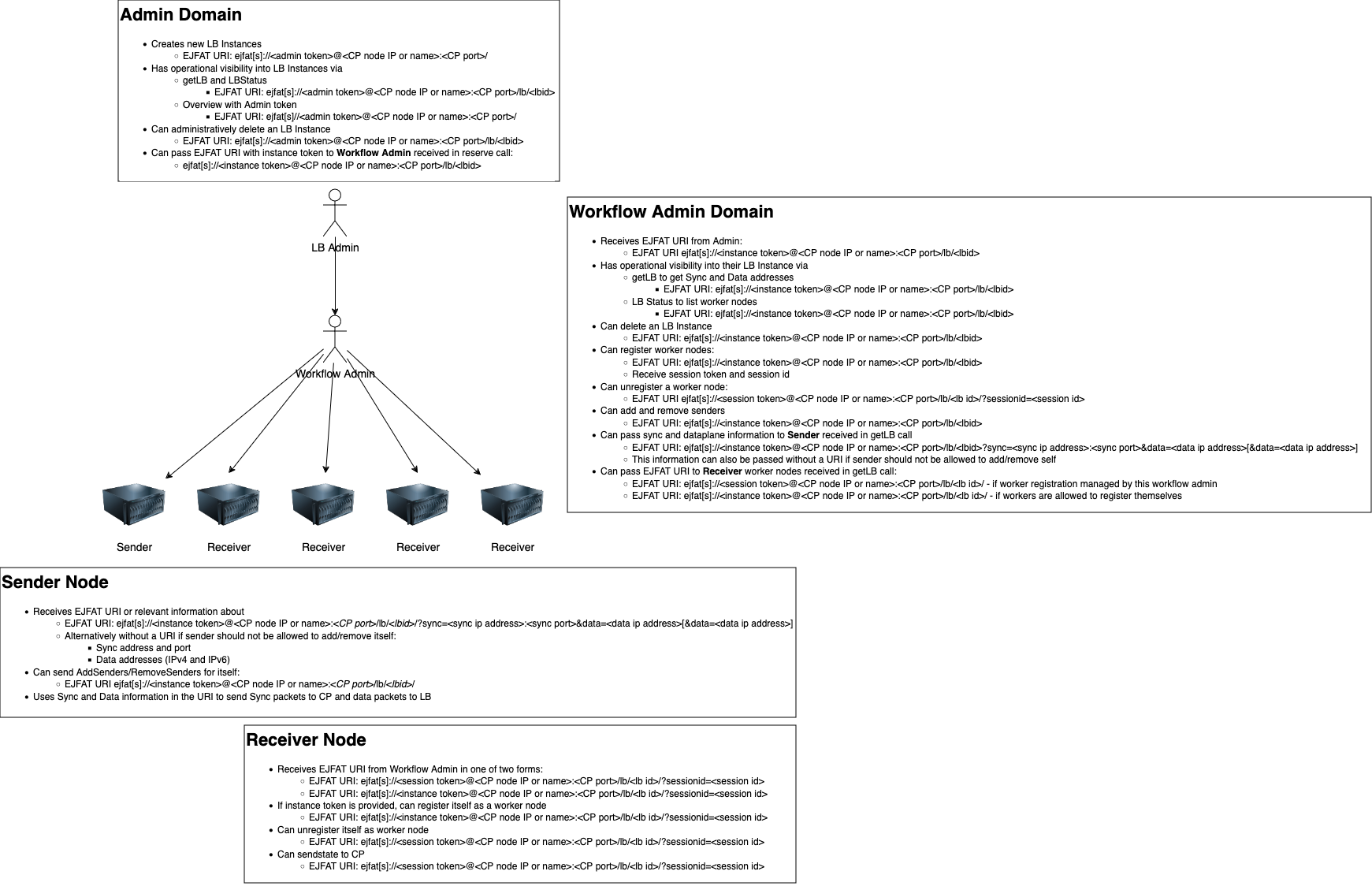

EJFAT presumes multiple deployment options with different administrative entities being potentially responsible for managing a load-balancer farm, instances of virtual loadbalancers instantiated on that farm and even individual worker nodes communicating with a specific previously provisioned LB instance. The figure below attempts to capture these possibilities.

The authority to perform certain operations is rooted in the possession of an admin, instance or session token.

This section is informational use only and is not authoritative. See UDPLBd documentation for more.

Each load balancer is issued a pool of N (currently N <= 8)

- IPv4 addresses

- IPv6 addresses

- UDP ports for Sync messages

In addition the control plane uses one or two IP addresses - one for receiving Sync messages (on one of the ports) and one for communicating over gRPC, although under a typical installation those two are the same.

This way each virtual load balancer (up to 8) reserved and allocated on the hardware gets an IPv4 address, an IPv6 address and a UDP port for Sync messages. The FPGA firmware configures the IPv4 and IPv6 addresses once the virtual instance is created, such that the instantiated load balancer can respond to ICMP requests on those, for example. Thus when reserving a new instance, the EjfatURI for it, as returned by gRPC reserve() call, reflects that information like shown in this typical example:

ejfats://<auto-generated instance token>@<gRPC IP Address or Name>:<cp_port>/lb/<ID of virtual LB>?data=<IPv4 address>&data=<IPv6 address>&sync=<Sync address>:<Sync message port>

EJFAT Control plane has a few timing rules and best practices for implementation:

- Sync messages should be sent about 1 time per second by the sender (can be more often). The control plane uses a ringbuffer to calculate the rate slope. E2SAR also includes a low-pass filter for this purpose (you can set the sync period and the number of sync periods over which the reported rate is computed).

- After a worker is registered,

sendStatemust be sent within 10 seconds. In general a period of 100ms is recommended for doingsendState - When reserving a load balancer with

reserveLBif the passed in duration time is 0 (i.e. UNIX epoch) the reservation never expires.

Additional details are contained in this document