diff --git a/.github/workflows/ccpp.yml b/.github/workflows/ccpp.yml

index 077b3cf99f8..c8d65b796b4 100644

--- a/.github/workflows/ccpp.yml

+++ b/.github/workflows/ccpp.yml

@@ -1,6 +1,10 @@

name: Darknet Continuous Integration

-on: [push, pull_request, workflow_dispatch]

+on:

+ push:

+ workflow_dispatch:

+ schedule:

+ - cron: '0 0 * * *'

env:

VCPKG_BINARY_SOURCES: 'clear;nuget,vcpkgbinarycache,readwrite'

@@ -17,24 +21,13 @@ jobs:

run: sudo apt install libopencv-dev

- name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

run: |

- sudo apt update

- sudo apt-get dist-upgrade -y

- sudo wget -O /etc/apt/preferences.d/cuda-repository-pin-600 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

- sudo apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/7fa2af80.pub

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/ /"

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu2004/x86_64/ /"

- sudo apt-get install -y --no-install-recommends cuda-compiler-11-2 cuda-libraries-dev-11-2 cuda-driver-dev-11-2 cuda-cudart-dev-11-2

- sudo apt-get install -y --no-install-recommends libcudnn8-dev

- sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

- sudo ln -s /usr/local/cuda-11.2 /usr/local/cuda

- export PATH=/usr/local/cuda/bin:$PATH

- export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

- nvcc --version

- gcc --version

- name: 'LIBSO=1 GPU=0 CUDNN=0 OPENCV=0'

run: |

@@ -72,50 +65,38 @@ jobs:

make clean

- ubuntu-vcpkg-cuda:

+ ubuntu-vcpkg-opencv4-cuda:

runs-on: ubuntu-20.04

steps:

- uses: actions/checkout@v2

+ - uses: lukka/get-cmake@latest

+

- name: Update apt

run: sudo apt update

- name: Install dependencies

run: sudo apt install yasm nasm

- - uses: lukka/get-cmake@latest

-

- name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

run: |

- sudo apt update

- sudo apt-get dist-upgrade -y

- sudo wget -O /etc/apt/preferences.d/cuda-repository-pin-600 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

- sudo apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/7fa2af80.pub

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/ /"

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu2004/x86_64/ /"

- sudo apt-get install -y --no-install-recommends cuda-compiler-11-2 cuda-libraries-dev-11-2 cuda-driver-dev-11-2 cuda-cudart-dev-11-2

- sudo apt-get install -y --no-install-recommends libcudnn8-dev

- sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

- sudo ln -s /usr/local/cuda-11.2 /usr/local/cuda

- export PATH=/usr/local/cuda/bin:$PATH

- export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

- nvcc --version

- gcc --version

- name: 'Setup vcpkg and NuGet artifacts backend'

shell: bash

run: >

- git clone https://github.com/microsoft/vcpkg;

- ./vcpkg/bootstrap-vcpkg.sh;

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

mono $(./vcpkg/vcpkg fetch nuget | tail -n 1)

- sources add

- -source "https://nuget.pkg.github.com/cenit/index.json"

- -storepasswordincleartext

- -name "vcpkgbinarycache"

- -username "cenit"

- -password "${{ secrets.GITHUB_TOKEN }}"

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

- name: 'Build'

shell: pwsh

@@ -124,7 +105,7 @@ jobs:

CUDA_PATH: "/usr/local/cuda"

CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

- run: ./build.ps1 -UseVCPKG -EnableOPENCV -EnableCUDA -ForceStaticLib

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -144,6 +125,92 @@ jobs:

path: ${{ github.workspace }}/uselib*

+ ubuntu-vcpkg-opencv3-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install yasm nasm

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1)

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -ForceOpenCVVersion 3 -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-vcpkg-opencv2-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install yasm nasm

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1)

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -ForceOpenCVVersion 2 -DisableInteractive -DoNotUpdateDARKNET

+

+

ubuntu:

runs-on: ubuntu-20.04

steps:

@@ -163,7 +230,7 @@ jobs:

CUDA_PATH: "/usr/local/cuda"

CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

- run: ./build.ps1 -EnableOPENCV

+ run: ./build.ps1 -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -196,24 +263,13 @@ jobs:

- uses: lukka/get-cmake@latest

- name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

run: |

- sudo apt update

- sudo apt-get dist-upgrade -y

- sudo wget -O /etc/apt/preferences.d/cuda-repository-pin-600 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/cuda-ubuntu2004.pin

- sudo apt-key adv --fetch-keys https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/7fa2af80.pub

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/cuda/repos/ubuntu2004/x86_64/ /"

- sudo add-apt-repository "deb http://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu2004/x86_64/ /"

- sudo apt-get install -y --no-install-recommends cuda-compiler-11-2 cuda-libraries-dev-11-2 cuda-driver-dev-11-2 cuda-cudart-dev-11-2

- sudo apt-get install -y --no-install-recommends libcudnn8-dev

- sudo rm -rf /usr/local/cuda

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

- sudo ln -s /usr/local/cuda-11.2 /usr/local/cuda

- export PATH=/usr/local/cuda/bin:$PATH

- export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

- nvcc --version

- gcc --version

- name: 'Build'

shell: pwsh

@@ -222,7 +278,7 @@ jobs:

CUDA_PATH: "/usr/local/cuda"

CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

- run: ./build.ps1 -EnableOPENCV -EnableCUDA

+ run: ./build.ps1 -EnableOPENCV -EnableCUDA -EnableCUDNN -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -251,7 +307,29 @@ jobs:

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -ForceCPP

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-setup-sh:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1)

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Setup'

+ shell: bash

+ run: ./scripts/setup.sh -InstallCUDA -BypassDRIVER

osx-vcpkg:

@@ -267,19 +345,18 @@ jobs:

- name: 'Setup vcpkg and NuGet artifacts backend'

shell: bash

run: >

- git clone https://github.com/microsoft/vcpkg;

- ./vcpkg/bootstrap-vcpkg.sh;

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

mono $(./vcpkg/vcpkg fetch nuget | tail -n 1)

- sources add

- -source "https://nuget.pkg.github.com/cenit/index.json"

- -storepasswordincleartext

- -name "vcpkgbinarycache"

- -username "cenit"

- -password "${{ secrets.GITHUB_TOKEN }}"

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -UseVCPKG

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -311,7 +388,7 @@ jobs:

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -EnableOPENCV

+ run: ./build.ps1 -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -340,7 +417,7 @@ jobs:

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -ForceCPP

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

win-vcpkg:

@@ -353,19 +430,18 @@ jobs:

- name: 'Setup vcpkg and NuGet artifacts backend'

shell: bash

run: >

- git clone https://github.com/microsoft/vcpkg;

- ./vcpkg/bootstrap-vcpkg.sh;

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

$(./vcpkg/vcpkg fetch nuget | tail -n 1)

- sources add

- -source "https://nuget.pkg.github.com/cenit/index.json"

- -storepasswordincleartext

- -name "vcpkgbinarycache"

- -username "cenit"

- -password "${{ secrets.GITHUB_TOKEN }}"

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -UseVCPKG -EnableOPENCV

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -382,13 +458,35 @@ jobs:

- uses: actions/upload-artifact@v2

with:

name: darknet-vcpkg-${{ runner.os }}

- path: ${{ runner.workspace }}/buildDirectory/Release/*.dll

+ path: ${{ github.workspace }}/build_release/*.dll

- uses: actions/upload-artifact@v2

with:

name: darknet-vcpkg-${{ runner.os }}

path: ${{ github.workspace }}/uselib*

+ win-vcpkg-port:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1)

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -UseVCPKG -InstallDARKNETthroughVCPKG -ForceVCPKGDarknetHEAD -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

+

+

win-intlibs:

runs-on: windows-latest

steps:

@@ -398,7 +496,7 @@ jobs:

- name: 'Build'

shell: pwsh

- run: ./build.ps1

+ run: ./build.ps1 -DisableInteractive -DoNotUpdateDARKNET

- uses: actions/upload-artifact@v2

with:

@@ -422,6 +520,28 @@ jobs:

path: ${{ github.workspace }}/uselib*

+ win-setup-ps1:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1)

+ setapikey ${{ secrets.BAGET_API_KEY }}

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Setup'

+ shell: pwsh

+ run: ./scripts/setup.ps1 -InstallCUDA

+

+

win-intlibs-cpp:

runs-on: windows-latest

steps:

@@ -431,7 +551,19 @@ jobs:

- name: 'Build'

shell: pwsh

- run: ./build.ps1 -ForceCPP

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-csharp:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -EnableCSharpWrapper -DisableInteractive -DoNotUpdateDARKNET

win-intlibs-cuda:

@@ -439,22 +571,17 @@ jobs:

steps:

- uses: actions/checkout@v2

- name: 'Install CUDA'

- run: |

- choco install cuda --version=10.2.89.20191206 -y

- $env:ChocolateyInstall = Convert-Path "$((Get-Command choco).Path)\..\.."

- Import-Module "$env:ChocolateyInstall\helpers\chocolateyProfile.psm1"

- refreshenv

+ run: ./scripts/deploy-cuda.ps1

- uses: lukka/get-cmake@latest

- name: 'Build'

env:

- CUDA_PATH: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v10.2"

- CUDA_PATH_V10_2: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v10.2"

- CUDA_TOOLKIT_ROOT_DIR: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v10.2"

- CUDACXX: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v10.2\\bin\\nvcc.exe"

+ CUDA_PATH: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3"

+ CUDA_TOOLKIT_ROOT_DIR: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3"

+ CUDACXX: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3\\bin\\nvcc.exe"

shell: pwsh

- run: ./build.ps1 -EnableCUDA

+ run: ./build.ps1 -EnableCUDA -DisableInteractive -DoNotUpdateDARKNET

mingw:

diff --git a/.github/workflows/on_pr.yml b/.github/workflows/on_pr.yml

new file mode 100644

index 00000000000..9f0a664ebcb

--- /dev/null

+++ b/.github/workflows/on_pr.yml

@@ -0,0 +1,429 @@

+name: Darknet Pull Requests

+

+on: [pull_request]

+

+env:

+ VCPKG_BINARY_SOURCES: 'clear;nuget,vcpkgbinarycache,read'

+

+jobs:

+ ubuntu-makefile:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install libopencv-dev

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'LIBSO=1 GPU=0 CUDNN=0 OPENCV=0'

+ run: |

+ make LIBSO=1 GPU=0 CUDNN=0 OPENCV=0 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=0 CUDNN=0 OPENCV=0 DEBUG=1'

+ run: |

+ make LIBSO=1 GPU=0 CUDNN=0 OPENCV=0 DEBUG=1 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=0 CUDNN=0 OPENCV=0 AVX=1'

+ run: |

+ make LIBSO=1 GPU=0 CUDNN=0 OPENCV=0 AVX=1 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=0 CUDNN=0 OPENCV=1'

+ run: |

+ make LIBSO=1 GPU=0 CUDNN=0 OPENCV=1 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=1 CUDNN=1 OPENCV=1'

+ run: |

+ export PATH=/usr/local/cuda/bin:$PATH

+ export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

+ make LIBSO=1 GPU=1 CUDNN=1 OPENCV=1 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=1 CUDNN=1 OPENCV=1 CUDNN_HALF=1'

+ run: |

+ export PATH=/usr/local/cuda/bin:$PATH

+ export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

+ make LIBSO=1 GPU=1 CUDNN=1 OPENCV=1 CUDNN_HALF=1 -j 8

+ make clean

+ - name: 'LIBSO=1 GPU=1 CUDNN=1 OPENCV=1 CUDNN_HALF=1 USE_CPP=1'

+ run: |

+ export PATH=/usr/local/cuda/bin:$PATH

+ export LD_LIBRARY_PATH=/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH

+ make LIBSO=1 GPU=1 CUDNN=1 OPENCV=1 CUDNN_HALF=1 USE_CPP=1 -j 8

+ make clean

+

+

+ ubuntu-vcpkg-opencv4-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install yasm nasm

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-vcpkg-opencv3-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install yasm nasm

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -ForceOpenCVVersion 3 -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-vcpkg-opencv2-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install yasm nasm

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -EnableCUDA -EnableCUDNN -ForceOpenCVVersion 2 -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install libopencv-dev

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-cuda:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: Update apt

+ run: sudo apt update

+ - name: Install dependencies

+ run: sudo apt install libopencv-dev

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.sh

+

+ - name: 'Create softlinks for CUDA'

+ run: |

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/stubs/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so.1

+ sudo ln -s /usr/local/cuda-11.2/lib64/stubs/libcuda.so /usr/local/cuda-11.2/lib64/libcuda.so

+

+ - name: 'Build'

+ shell: pwsh

+ env:

+ CUDACXX: "/usr/local/cuda/bin/nvcc"

+ CUDA_PATH: "/usr/local/cuda"

+ CUDA_TOOLKIT_ROOT_DIR: "/usr/local/cuda"

+ LD_LIBRARY_PATH: "/usr/local/cuda/lib64:/usr/local/cuda/lib64/stubs:$LD_LIBRARY_PATH"

+ run: ./build.ps1 -EnableOPENCV -EnableCUDA -EnableCUDNN -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-no-ocv-cpp:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

+

+

+ ubuntu-setup-sh:

+ runs-on: ubuntu-20.04

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Setup'

+ shell: bash

+ run: ./scripts/setup.sh -InstallCUDA -BypassDRIVER

+

+

+ osx-vcpkg:

+ runs-on: macos-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: Install dependencies

+ run: brew install libomp yasm nasm

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ mono $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -DisableInteractive -DoNotUpdateDARKNET

+

+

+ osx:

+ runs-on: macos-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: Install dependencies

+ run: brew install opencv libomp

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

+

+

+ osx-no-ocv-no-omp-cpp:

+ runs-on: macos-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-vcpkg:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -UseVCPKG -DoNotUpdateVCPKG -EnableOPENCV -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-intlibs:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-setup-ps1:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - name: 'Setup vcpkg and NuGet artifacts backend'

+ shell: bash

+ run: >

+ git clone https://github.com/microsoft/vcpkg ;

+ ./vcpkg/bootstrap-vcpkg.sh ;

+ $(./vcpkg/vcpkg fetch nuget | tail -n 1) sources add

+ -Name "vcpkgbinarycache"

+ -Source http://93.49.111.10:5555/v3/index.json

+

+ - name: 'Setup'

+ shell: pwsh

+ run: ./scripts/setup.ps1 -InstallCUDA

+

+

+ win-intlibs-cpp:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -ForceCPP -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-csharp:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ shell: pwsh

+ run: ./build.ps1 -EnableCSharpWrapper -DisableInteractive -DoNotUpdateDARKNET

+

+

+ win-intlibs-cuda:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+ - name: 'Install CUDA'

+ run: ./scripts/deploy-cuda.ps1

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build'

+ env:

+ CUDA_PATH: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3"

+ CUDA_TOOLKIT_ROOT_DIR: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3"

+ CUDACXX: "C:\\Program Files\\NVIDIA GPU Computing Toolkit\\CUDA\\v11.3\\bin\\nvcc.exe"

+ shell: pwsh

+ run: ./build.ps1 -EnableCUDA -DisableInteractive -DoNotUpdateDARKNET

+

+

+ mingw:

+ runs-on: windows-latest

+ steps:

+ - uses: actions/checkout@v2

+

+ - uses: lukka/get-cmake@latest

+

+ - name: 'Build with CMake'

+ uses: lukka/run-cmake@v3

+ with:

+ cmakeListsOrSettingsJson: CMakeListsTxtAdvanced

+ cmakeListsTxtPath: '${{ github.workspace }}/CMakeLists.txt'

+ useVcpkgToolchainFile: true

+ buildDirectory: '${{ runner.workspace }}/buildDirectory'

+ cmakeAppendedArgs: "-G\"MinGW Makefiles\" -DCMAKE_BUILD_TYPE=Release -DENABLE_CUDA=OFF -DENABLE_CUDNN=OFF -DENABLE_OPENCV=OFF"

+ cmakeBuildType: 'Release'

+ buildWithCMakeArgs: '--config Release --target install'

diff --git a/.github/workflows/rebase.yml b/.github/workflows/rebase.yml

new file mode 100644

index 00000000000..251a259ffcf

--- /dev/null

+++ b/.github/workflows/rebase.yml

@@ -0,0 +1,19 @@

+name: Automatic Rebase

+on:

+ issue_comment:

+ types: [created]

+jobs:

+ rebase:

+ name: Rebase

+ if: github.event.issue.pull_request != '' && contains(github.event.comment.body, '/rebase') && (github.event.comment.author_association == 'MEMBER' || github.event.comment.author_association == 'OWNER' || github.event.comment.author_association == 'CONTRIBUTOR')

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout the latest code

+ uses: actions/checkout@v2

+ with:

+ token: ${{ secrets.GITHUB_TOKEN }}

+ fetch-depth: 0 # otherwise, you will fail to push refs to dest repo

+ - name: Automatic Rebase

+ uses: cirrus-actions/rebase@1.5

+ env:

+ GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

diff --git a/.gitignore b/.gitignore

index 174f0b5a378..a1d890429b3 100644

--- a/.gitignore

+++ b/.gitignore

@@ -9,6 +9,7 @@

*.dll

*.lib

*.dylib

+*.pyc

mnist/

data/

caffe/

@@ -22,6 +23,8 @@ cfg/

temp/

build/darknet/*

build_*/

+ninja/

+ninja.zip

vcpkg_installed/

!build/darknet/YoloWrapper.cs

.fuse*

@@ -36,6 +39,8 @@ build/.ninja_deps

build/.ninja_log

build/Makefile

*/vcpkg-manifest-install.log

+build.log

+__pycache__/

# OS Generated #

.DS_Store*

diff --git a/.travis.yml b/.travis.yml

index 447a72a179d..f208498dbcd 100644

--- a/.travis.yml

+++ b/.travis.yml

@@ -16,32 +16,6 @@ matrix:

- additional_defines=" -DENABLE_CUDA=OFF -DENABLE_CUDNN=OFF -DENABLE_OPENCV=OFF"

- MATRIX_EVAL=""

- - os: osx

- compiler: gcc

- name: macOS - gcc (llvm backend) - opencv@2

- osx_image: xcode12.3

- env:

- - OpenCV_DIR="/usr/local/opt/opencv@2/"

- - additional_defines="-DOpenCV_DIR=${OpenCV_DIR} -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv@2"

-

- - os: osx

- compiler: gcc

- name: macOS - gcc (llvm backend) - opencv@3

- osx_image: xcode12.3

- env:

- - OpenCV_DIR="/usr/local/opt/opencv@3/"

- - additional_defines="-DOpenCV_DIR=${OpenCV_DIR} -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv@3"

-

- - os: osx

- compiler: gcc

- name: macOS - gcc (llvm backend) - opencv(latest)

- osx_image: xcode12.3

- env:

- - additional_defines=" -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv"

-

- os: osx

compiler: clang

name: macOS - clang

@@ -58,40 +32,6 @@ matrix:

- additional_defines="-DBUILD_AS_CPP:BOOL=TRUE -DENABLE_CUDA=OFF -DENABLE_CUDNN=OFF -DENABLE_OPENCV=OFF"

- MATRIX_EVAL=""

- - os: osx

- compiler: clang

- name: macOS - clang - opencv@2

- osx_image: xcode12.3

- env:

- - OpenCV_DIR="/usr/local/opt/opencv@2/"

- - additional_defines="-DOpenCV_DIR=${OpenCV_DIR} -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv@2"

-

- - os: osx

- compiler: clang

- name: macOS - clang - opencv@3

- osx_image: xcode12.3

- env:

- - OpenCV_DIR="/usr/local/opt/opencv@3/"

- - additional_defines="-DOpenCV_DIR=${OpenCV_DIR} -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv@3"

-

- - os: osx

- compiler: clang

- name: macOS - clang - opencv(latest)

- osx_image: xcode12.3

- env:

- - additional_defines=" -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv"

-

- - os: osx

- compiler: clang

- name: macOS - clang - opencv(latest) - libomp

- osx_image: xcode12.3

- env:

- - additional_defines=" -DENABLE_CUDA=OFF"

- - MATRIX_EVAL="brew install opencv libomp"

-

- os: linux

compiler: clang

dist: bionic

diff --git a/CMakeLists.txt b/CMakeLists.txt

index 00f446fcccf..0e1abf32d9c 100644

--- a/CMakeLists.txt

+++ b/CMakeLists.txt

@@ -7,6 +7,8 @@ set(Darknet_PATCH_VERSION 5)

set(Darknet_TWEAK_VERSION 4)

set(Darknet_VERSION ${Darknet_MAJOR_VERSION}.${Darknet_MINOR_VERSION}.${Darknet_PATCH_VERSION}.${Darknet_TWEAK_VERSION})

+message("Darknet_VERSION: ${Darknet_VERSION}")

+

option(CMAKE_VERBOSE_MAKEFILE "Create verbose makefile" ON)

option(CUDA_VERBOSE_BUILD "Create verbose CUDA build" ON)

option(BUILD_SHARED_LIBS "Create dark as a shared library" ON)

@@ -19,30 +21,49 @@ option(ENABLE_CUDNN "Enable CUDNN" ON)

option(ENABLE_CUDNN_HALF "Enable CUDNN Half precision" ON)

option(ENABLE_ZED_CAMERA "Enable ZED Camera support" ON)

option(ENABLE_VCPKG_INTEGRATION "Enable VCPKG integration" ON)

+option(ENABLE_CSHARP_WRAPPER "Enable building a csharp wrapper" OFF)

+option(VCPKG_BUILD_OPENCV_WITH_CUDA "Build OpenCV with CUDA extension integration" ON)

+option(VCPKG_USE_OPENCV2 "Use legacy OpenCV 2" OFF)

+option(VCPKG_USE_OPENCV3 "Use legacy OpenCV 3" OFF)

+option(VCPKG_USE_OPENCV4 "Use OpenCV 4" ON)

-if(ENABLE_OPENCV_WITH_CUDA AND NOT APPLE)

- list(APPEND VCPKG_MANIFEST_FEATURES "opencv-cuda")

+if(VCPKG_USE_OPENCV4 AND VCPKG_USE_OPENCV2)

+ message(STATUS "You required vcpkg feature related to OpenCV 2 but forgot to turn off those for OpenCV 4, doing that for you")

+ set(VCPKG_USE_OPENCV4 OFF CACHE BOOL "Use OpenCV 4" FORCE)

+endif()

+if(VCPKG_USE_OPENCV4 AND VCPKG_USE_OPENCV3)

+ message(STATUS "You required vcpkg feature related to OpenCV 3 but forgot to turn off those for OpenCV 4, doing that for you")

+ set(VCPKG_USE_OPENCV4 OFF CACHE BOOL "Use OpenCV 4" FORCE)

+endif()

+if(VCPKG_USE_OPENCV2 AND VCPKG_USE_OPENCV3)

+ message(STATUS "You required vcpkg features related to both OpenCV 2 and OpenCV 3. Impossible to satisfy, keeping only OpenCV 3")

+ set(VCPKG_USE_OPENCV2 OFF CACHE BOOL "Use legacy OpenCV 2" FORCE)

endif()

+

if(ENABLE_CUDA AND NOT APPLE)

list(APPEND VCPKG_MANIFEST_FEATURES "cuda")

endif()

-if(ENABLE_OPENCV)

- list(APPEND VCPKG_MANIFEST_FEATURES "opencv-base")

-endif()

if(ENABLE_CUDNN AND ENABLE_CUDA AND NOT APPLE)

list(APPEND VCPKG_MANIFEST_FEATURES "cudnn")

endif()

-

-if(CMAKE_COMPILER_IS_GNUCC OR "${CMAKE_CXX_COMPILER_ID}" MATCHES "Clang")

- set(CMAKE_COMPILER_IS_GNUCC_OR_CLANG TRUE)

- if("${CMAKE_CXX_COMPILER_ID}" MATCHES "Clang")

- set(CMAKE_COMPILER_IS_CLANG TRUE)

+if(ENABLE_OPENCV)

+ if(VCPKG_BUILD_OPENCV_WITH_CUDA AND NOT APPLE)

+ if(VCPKG_USE_OPENCV4)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv-cuda")

+ elseif(VCPKG_USE_OPENCV3)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv3-cuda")

+ elseif(VCPKG_USE_OPENCV2)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv2-cuda")

+ endif()

else()

- set(CMAKE_COMPILER_IS_CLANG FALSE)

+ if(VCPKG_USE_OPENCV4)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv-base")

+ elseif(VCPKG_USE_OPENCV3)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv3-base")

+ elseif(VCPKG_USE_OPENCV2)

+ list(APPEND VCPKG_MANIFEST_FEATURES "opencv2-base")

+ endif()

endif()

-else()

- set(CMAKE_COMPILER_IS_GNUCC_OR_CLANG FALSE)

- set(CMAKE_COMPILER_IS_CLANG FALSE)

endif()

if(NOT CMAKE_HOST_SYSTEM_PROCESSOR AND NOT WIN32)

@@ -87,6 +108,18 @@ enable_language(CXX)

set(CMAKE_CXX_STANDARD 11)

set(CMAKE_MODULE_PATH "${CMAKE_CURRENT_LIST_DIR}/cmake/Modules/" ${CMAKE_MODULE_PATH})

+if(CMAKE_COMPILER_IS_GNUCC OR "${CMAKE_C_COMPILER_ID}" MATCHES "Clang" OR "${CMAKE_CXX_COMPILER_ID}" MATCHES "Clang")

+ set(CMAKE_COMPILER_IS_GNUCC_OR_CLANG TRUE)

+ if("${CMAKE_CXX_COMPILER_ID}" MATCHES "Clang" OR "${CMAKE_CXX_COMPILER_ID}" MATCHES "clang")

+ set(CMAKE_COMPILER_IS_CLANG TRUE)

+ else()

+ set(CMAKE_COMPILER_IS_CLANG FALSE)

+ endif()

+else()

+ set(CMAKE_COMPILER_IS_GNUCC_OR_CLANG FALSE)

+ set(CMAKE_COMPILER_IS_CLANG FALSE)

+endif()

+

set(default_build_type "Release")

if(NOT CMAKE_BUILD_TYPE AND NOT CMAKE_CONFIGURATION_TYPES)

message(STATUS "Setting build type to '${default_build_type}' as none was specified.")

@@ -201,12 +234,14 @@ endif()

set(ADDITIONAL_CXX_FLAGS "-Wall -Wno-unused-result -Wno-unknown-pragmas -Wfatal-errors -Wno-deprecated-declarations -Wno-write-strings")

set(ADDITIONAL_C_FLAGS "-Wall -Wno-unused-result -Wno-unknown-pragmas -Wfatal-errors -Wno-deprecated-declarations -Wno-write-strings")

+if(UNIX AND BUILD_SHARED_LIBS AND NOT CMAKE_COMPILER_IS_CLANG)

+ set(SHAREDLIB_CXX_FLAGS "-Wl,-Bsymbolic")

+ set(SHAREDLIB_C_FLAGS "-Wl,-Bsymbolic")

+endif()

if(MSVC)

set(ADDITIONAL_CXX_FLAGS "/wd4013 /wd4018 /wd4028 /wd4047 /wd4068 /wd4090 /wd4101 /wd4113 /wd4133 /wd4190 /wd4244 /wd4267 /wd4305 /wd4477 /wd4996 /wd4819 /fp:fast")

set(ADDITIONAL_C_FLAGS "/wd4013 /wd4018 /wd4028 /wd4047 /wd4068 /wd4090 /wd4101 /wd4113 /wd4133 /wd4190 /wd4244 /wd4267 /wd4305 /wd4477 /wd4996 /wd4819 /fp:fast")

- set(CMAKE_CXX_FLAGS "${ADDITIONAL_CXX_FLAGS} ${CMAKE_CXX_FLAGS}")

- set(CMAKE_C_FLAGS "${ADDITIONAL_C_FLAGS} ${CMAKE_C_FLAGS}")

string(REGEX REPLACE "/O2" "/Ox" CMAKE_CXX_FLAGS_RELEASE ${CMAKE_CXX_FLAGS_RELEASE})

string(REGEX REPLACE "/O2" "/Ox" CMAKE_C_FLAGS_RELEASE ${CMAKE_C_FLAGS_RELEASE})

endif()

@@ -218,8 +253,6 @@ if(CMAKE_COMPILER_IS_GNUCC_OR_CLANG)

set(CMAKE_C_FLAGS "-pthread ${CMAKE_C_FLAGS}")

endif()

endif()

- set(CMAKE_CXX_FLAGS "${ADDITIONAL_CXX_FLAGS} ${CMAKE_CXX_FLAGS}")

- set(CMAKE_C_FLAGS "${ADDITIONAL_C_FLAGS} ${CMAKE_C_FLAGS}")

string(REGEX REPLACE "-O0" "-Og" CMAKE_CXX_FLAGS_DEBUG ${CMAKE_CXX_FLAGS_DEBUG})

string(REGEX REPLACE "-O3" "-Ofast" CMAKE_CXX_FLAGS_RELEASE ${CMAKE_CXX_FLAGS_RELEASE})

string(REGEX REPLACE "-O0" "-Og" CMAKE_C_FLAGS_DEBUG ${CMAKE_C_FLAGS_DEBUG})

@@ -230,18 +263,23 @@ if(CMAKE_COMPILER_IS_GNUCC_OR_CLANG)

endif()

endif()

+set(CMAKE_CXX_FLAGS "${ADDITIONAL_CXX_FLAGS} ${SHAREDLIB_CXX_FLAGS} ${CMAKE_CXX_FLAGS}")

+set(CMAKE_C_FLAGS "${ADDITIONAL_C_FLAGS} ${SHAREDLIB_C_FLAGS} ${CMAKE_C_FLAGS}")

+

if(OpenCV_FOUND)

- if(ENABLE_CUDA AND NOT OpenCV_CUDA_VERSION)

- set(BUILD_USELIB_TRACK "FALSE" CACHE BOOL "Build uselib_track" FORCE)

- message(STATUS " -> darknet is fine for now, but uselib_track has been disabled!")

- message(STATUS " -> Please rebuild OpenCV from sources with CUDA support to enable it")

- elseif(ENABLE_CUDA AND OpenCV_CUDA_VERSION)

+ if(ENABLE_CUDA AND OpenCV_CUDA_VERSION)

if(TARGET opencv_cudaoptflow)

list(APPEND OpenCV_LINKED_COMPONENTS "opencv_cudaoptflow")

endif()

if(TARGET opencv_cudaimgproc)

list(APPEND OpenCV_LINKED_COMPONENTS "opencv_cudaimgproc")

endif()

+ elseif(ENABLE_CUDA AND NOT OpenCV_CUDA_VERSION)

+ set(BUILD_USELIB_TRACK "FALSE" CACHE BOOL "Build uselib_track" FORCE)

+ message(STATUS " -> darknet is fine for now, but uselib_track has been disabled!")

+ message(STATUS " -> Please rebuild OpenCV from sources with CUDA support to enable it")

+ else()

+ set(BUILD_USELIB_TRACK "FALSE" CACHE BOOL "Build uselib_track" FORCE)

endif()

endif()

@@ -539,3 +577,7 @@ install(FILES

"${PROJECT_BINARY_DIR}/DarknetConfigVersion.cmake"

DESTINATION "${INSTALL_CMAKE_DIR}"

)

+

+if(ENABLE_CSHARP_WRAPPER)

+ add_subdirectory(src/csharp)

+endif()

diff --git a/Makefile b/Makefile

index fc851c50f4a..a0560789e2c 100644

--- a/Makefile

+++ b/Makefile

@@ -151,7 +151,7 @@ LDFLAGS+= -L/usr/local/zed/lib -lsl_zed

endif

endif

-OBJ=image_opencv.o http_stream.o gemm.o utils.o dark_cuda.o convolutional_layer.o list.o image.o activations.o im2col.o col2im.o blas.o crop_layer.o dropout_layer.o maxpool_layer.o softmax_layer.o data.o matrix.o network.o connected_layer.o cost_layer.o parser.o option_list.o darknet.o detection_layer.o captcha.o route_layer.o writing.o box.o nightmare.o normalization_layer.o avgpool_layer.o coco.o dice.o yolo.o detector.o layer.o compare.o classifier.o local_layer.o swag.o shortcut_layer.o activation_layer.o rnn_layer.o gru_layer.o rnn.o rnn_vid.o crnn_layer.o demo.o tag.o cifar.o go.o batchnorm_layer.o art.o region_layer.o reorg_layer.o reorg_old_layer.o super.o voxel.o tree.o yolo_layer.o gaussian_yolo_layer.o upsample_layer.o lstm_layer.o conv_lstm_layer.o scale_channels_layer.o sam_layer.o

+OBJ=image_opencv.o http_stream.o gemm.o utils.o dark_cuda.o convolutional_layer.o list.o image.o activations.o im2col.o col2im.o blas.o crop_layer.o dropout_layer.o maxpool_layer.o softmax_layer.o data.o matrix.o network.o connected_layer.o cost_layer.o parser.o option_list.o darknet.o detection_layer.o captcha.o route_layer.o writing.o box.o nightmare.o normalization_layer.o avgpool_layer.o coco.o dice.o yolo.o detector.o layer.o compare.o classifier.o local_layer.o swag.o shortcut_layer.o representation_layer.o activation_layer.o rnn_layer.o gru_layer.o rnn.o rnn_vid.o crnn_layer.o demo.o tag.o cifar.o go.o batchnorm_layer.o art.o region_layer.o reorg_layer.o reorg_old_layer.o super.o voxel.o tree.o yolo_layer.o gaussian_yolo_layer.o upsample_layer.o lstm_layer.o conv_lstm_layer.o scale_channels_layer.o sam_layer.o

ifeq ($(GPU), 1)

LDFLAGS+= -lstdc++

OBJ+=convolutional_kernels.o activation_kernels.o im2col_kernels.o col2im_kernels.o blas_kernels.o crop_layer_kernels.o dropout_layer_kernels.o maxpool_layer_kernels.o network_kernels.o avgpool_layer_kernels.o

diff --git a/README.md b/README.md

index 2de8c7bf980..bb545cf097a 100644

--- a/README.md

+++ b/README.md

@@ -6,16 +6,18 @@ Paper YOLO v4: https://arxiv.org/abs/2004.10934

Paper Scaled YOLO v4: https://arxiv.org/abs/2011.08036 use to reproduce results: [ScaledYOLOv4](https://github.com/WongKinYiu/ScaledYOLOv4)

-More details in articles on medium:

- * [Scaled_YOLOv4](https://alexeyab84.medium.com/scaled-yolo-v4-is-the-best-neural-network-for-object-detection-on-ms-coco-dataset-39dfa22fa982?source=friends_link&sk=c8553bfed861b1a7932f739d26f487c8)

- * [YOLOv4](https://medium.com/@alexeyab84/yolov4-the-most-accurate-real-time-neural-network-on-ms-coco-dataset-73adfd3602fe?source=friends_link&sk=6039748846bbcf1d960c3061542591d7)

+More details in articles on medium:

+

+- [Scaled_YOLOv4](https://alexeyab84.medium.com/scaled-yolo-v4-is-the-best-neural-network-for-object-detection-on-ms-coco-dataset-39dfa22fa982?source=friends_link&sk=c8553bfed861b1a7932f739d26f487c8)

+- [YOLOv4](https://medium.com/@alexeyab84/yolov4-the-most-accurate-real-time-neural-network-on-ms-coco-dataset-73adfd3602fe?source=friends_link&sk=6039748846bbcf1d960c3061542591d7)

Manual: https://github.com/AlexeyAB/darknet/wiki

-Discussion:

- - [Reddit](https://www.reddit.com/r/MachineLearning/comments/gydxzd/p_yolov4_the_most_accurate_realtime_neural/)

- - [Google-groups](https://groups.google.com/forum/#!forum/darknet)

- - [Discord](https://discord.gg/zSq8rtW)

+Discussion:

+

+- [Reddit](https://www.reddit.com/r/MachineLearning/comments/gydxzd/p_yolov4_the_most_accurate_realtime_neural/)

+- [Google-groups](https://groups.google.com/forum/#!forum/darknet)

+- [Discord](https://discord.gg/zSq8rtW)

About Darknet framework: http://pjreddie.com/darknet/

@@ -26,76 +28,77 @@ About Darknet framework: http://pjreddie.com/darknet/

[](https://github.com/AlexeyAB/darknet/blob/master/LICENSE)

[](https://zenodo.org/badge/latestdoi/75388965)

[](https://arxiv.org/abs/2004.10934)

+[](https://arxiv.org/abs/2011.08036)

[](https://colab.research.google.com/drive/12QusaaRj_lUwCGDvQNfICpa7kA7_a2dE)

[](https://colab.research.google.com/drive/1_GdoqCJWXsChrOiY8sZMr_zbr_fH-0Fg)

-

-* [YOLOv4 model zoo](https://github.com/AlexeyAB/darknet/wiki/YOLOv4-model-zoo)

-* [Requirements (and how to install dependecies)](#requirements)

-* [Pre-trained models](#pre-trained-models)

-* [FAQ - frequently asked questions](https://github.com/AlexeyAB/darknet/wiki/FAQ---frequently-asked-questions)

-* [Explanations in issues](https://github.com/AlexeyAB/darknet/issues?q=is%3Aopen+is%3Aissue+label%3AExplanations)

-* [Yolo v4 in other frameworks (TensorRT, TensorFlow, PyTorch, OpenVINO, OpenCV-dnn, TVM,...)](#yolo-v4-in-other-frameworks)

-* [Datasets](#datasets)

+- [YOLOv4 model zoo](https://github.com/AlexeyAB/darknet/wiki/YOLOv4-model-zoo)

+- [Requirements (and how to install dependencies)](#requirements-for-windows-linux-and-macos)

+- [Pre-trained models](#pre-trained-models)

+- [FAQ - frequently asked questions](https://github.com/AlexeyAB/darknet/wiki/FAQ---frequently-asked-questions)

+- [Explanations in issues](https://github.com/AlexeyAB/darknet/issues?q=is%3Aopen+is%3Aissue+label%3AExplanations)

+- [Yolo v4 in other frameworks (TensorRT, TensorFlow, PyTorch, OpenVINO, OpenCV-dnn, TVM,...)](#yolo-v4-in-other-frameworks)

+- [Datasets](#datasets)

- [Yolo v4, v3 and v2 for Windows and Linux](#yolo-v4-v3-and-v2-for-windows-and-linux)

- [(neural networks for object detection)](#neural-networks-for-object-detection)

- - [GeForce RTX 2080 Ti:](#geforce-rtx-2080-ti)

+ - [GeForce RTX 2080 Ti](#geforce-rtx-2080-ti)

- [Youtube video of results](#youtube-video-of-results)

- [How to evaluate AP of YOLOv4 on the MS COCO evaluation server](#how-to-evaluate-ap-of-yolov4-on-the-ms-coco-evaluation-server)

- [How to evaluate FPS of YOLOv4 on GPU](#how-to-evaluate-fps-of-yolov4-on-gpu)

- [Pre-trained models](#pre-trained-models)

- - [Requirements](#requirements)

+ - [Requirements for Windows, Linux and macOS](#requirements-for-windows-linux-and-macos)

- [Yolo v4 in other frameworks](#yolo-v4-in-other-frameworks)

- [Datasets](#datasets)

- [Improvements in this repository](#improvements-in-this-repository)

- [How to use on the command line](#how-to-use-on-the-command-line)

- [For using network video-camera mjpeg-stream with any Android smartphone](#for-using-network-video-camera-mjpeg-stream-with-any-android-smartphone)

- [How to compile on Linux/macOS (using `CMake`)](#how-to-compile-on-linuxmacos-using-cmake)

- - [Using `vcpkg`](#using-vcpkg)

- - [Using libraries manually provided](#using-libraries-manually-provided)

+ - [Using also PowerShell](#using-also-powershell)

- [How to compile on Linux (using `make`)](#how-to-compile-on-linux-using-make)

- [How to compile on Windows (using `CMake`)](#how-to-compile-on-windows-using-cmake)

- [How to compile on Windows (using `vcpkg`)](#how-to-compile-on-windows-using-vcpkg)

- [How to train with multi-GPU](#how-to-train-with-multi-gpu)

- [How to train (to detect your custom objects)](#how-to-train-to-detect-your-custom-objects)

- - [How to train tiny-yolo (to detect your custom objects):](#how-to-train-tiny-yolo-to-detect-your-custom-objects)

- - [When should I stop training:](#when-should-i-stop-training)

- - [Custom object detection:](#custom-object-detection)

- - [How to improve object detection:](#how-to-improve-object-detection)

- - [How to mark bounded boxes of objects and create annotation files:](#how-to-mark-bounded-boxes-of-objects-and-create-annotation-files)

+ - [How to train tiny-yolo (to detect your custom objects)](#how-to-train-tiny-yolo-to-detect-your-custom-objects)

+ - [When should I stop training](#when-should-i-stop-training)

+ - [Custom object detection](#custom-object-detection)

+ - [How to improve object detection](#how-to-improve-object-detection)

+ - [How to mark bounded boxes of objects and create annotation files](#how-to-mark-bounded-boxes-of-objects-and-create-annotation-files)

- [How to use Yolo as DLL and SO libraries](#how-to-use-yolo-as-dll-and-so-libraries)

+- [Citation](#citation)

-

+

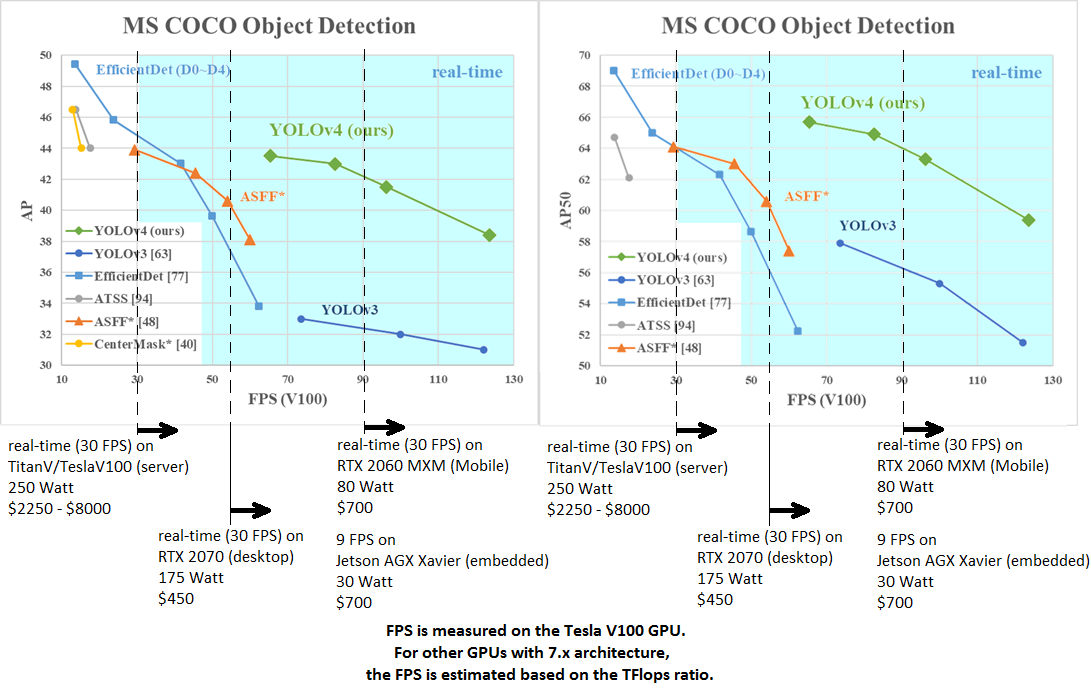

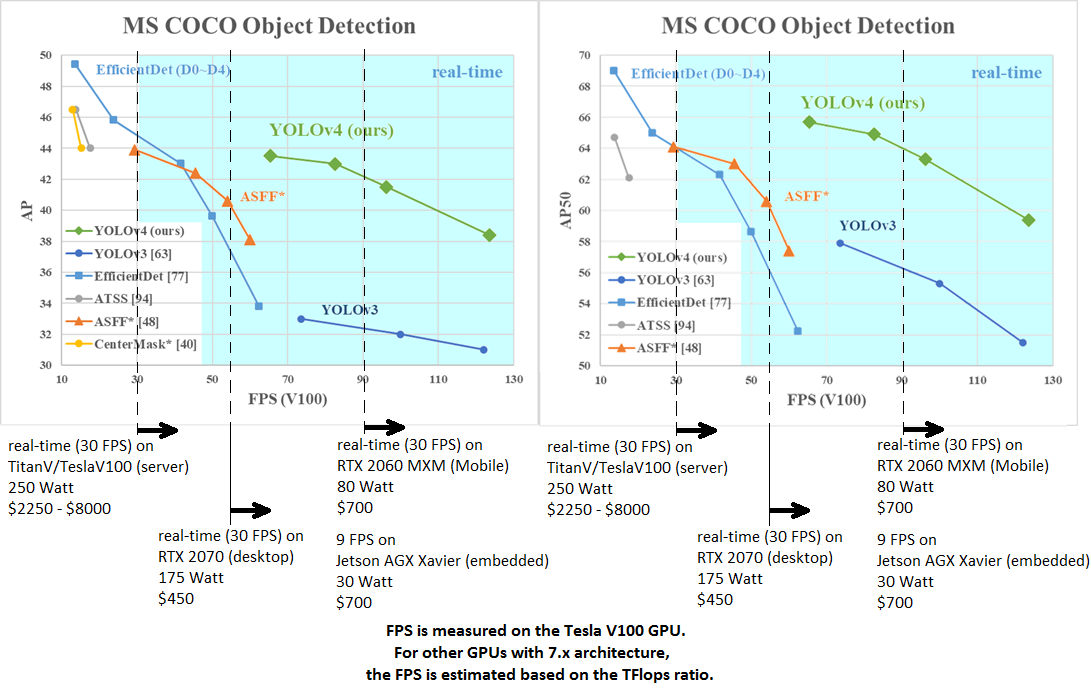

AP50:95 - FPS (Tesla V100) Paper: https://arxiv.org/abs/2011.08036

----

- AP50:95 / AP50 - FPS (Tesla V100) Paper: https://arxiv.org/abs/2004.10934

-

+ AP50:95 / AP50 - FPS (Tesla V100) Paper: https://arxiv.org/abs/2004.10934

tkDNN-TensorRT accelerates YOLOv4 **~2x** times for batch=1 and **3x-4x** times for batch=4.

-* tkDNN: https://github.com/ceccocats/tkDNN

-* OpenCV: https://gist.github.com/YashasSamaga/48bdb167303e10f4d07b754888ddbdcf

-

-#### GeForce RTX 2080 Ti:

-| Network Size | Darknet, FPS (avg)| tkDNN TensorRT FP32, FPS | tkDNN TensorRT FP16, FPS | OpenCV FP16, FPS | tkDNN TensorRT FP16 batch=4, FPS | OpenCV FP16 batch=4, FPS | tkDNN Speedup |

-|:-----:|:--------:|--------:|--------:|--------:|--------:|--------:|------:|

-|320 | 100 | 116 | **202** | 183 | 423 | **430** | **4.3x** |

-|416 | 82 | 103 | **162** | 159 | 284 | **294** | **3.6x** |

-|512 | 69 | 91 | 134 | **138** | 206 | **216** | **3.1x** |

-|608 | 53 | 62 | 103 | **115**| 150 | **150** | **2.8x** |

-|Tiny 416 | 443 | 609 | **790** | 773 | **1774** | 1353 | **3.5x** |

-|Tiny 416 CPU Core i7 7700HQ | 3.4 | - | - | 42 | - | 39 | **12x** |

-

-* Yolo v4 Full comparison: [map_fps](https://user-images.githubusercontent.com/4096485/80283279-0e303e00-871f-11ea-814c-870967d77fd1.png)

-* Yolo v4 tiny comparison: [tiny_fps](https://user-images.githubusercontent.com/4096485/85734112-6e366700-b705-11ea-95d1-fcba0de76d72.png)

-* CSPNet: [paper](https://arxiv.org/abs/1911.11929) and [map_fps](https://user-images.githubusercontent.com/4096485/71702416-6645dc00-2de0-11ea-8d65-de7d4b604021.png) comparison: https://github.com/WongKinYiu/CrossStagePartialNetworks

-* Yolo v3 on MS COCO: [Speed / Accuracy (mAP@0.5) chart](https://user-images.githubusercontent.com/4096485/52151356-e5d4a380-2683-11e9-9d7d-ac7bc192c477.jpg)

-* Yolo v3 on MS COCO (Yolo v3 vs RetinaNet) - Figure 3: https://arxiv.org/pdf/1804.02767v1.pdf

-* Yolo v2 on Pascal VOC 2007: https://hsto.org/files/a24/21e/068/a2421e0689fb43f08584de9d44c2215f.jpg

-* Yolo v2 on Pascal VOC 2012 (comp4): https://hsto.org/files/3a6/fdf/b53/3a6fdfb533f34cee9b52bdd9bb0b19d9.jpg

+

+- tkDNN: https://github.com/ceccocats/tkDNN

+- OpenCV: https://gist.github.com/YashasSamaga/48bdb167303e10f4d07b754888ddbdcf

+

+### GeForce RTX 2080 Ti

+

+| Network Size | Darknet, FPS (avg) | tkDNN TensorRT FP32, FPS | tkDNN TensorRT FP16, FPS | OpenCV FP16, FPS | tkDNN TensorRT FP16 batch=4, FPS | OpenCV FP16 batch=4, FPS | tkDNN Speedup |

+|:--------------------------:|:------------------:|-------------------------:|-------------------------:|-----------------:|---------------------------------:|-------------------------:|--------------:|

+|320 | 100 | 116 | **202** | 183 | 423 | **430** | **4.3x** |

+|416 | 82 | 103 | **162** | 159 | 284 | **294** | **3.6x** |

+|512 | 69 | 91 | 134 | **138** | 206 | **216** | **3.1x** |

+|608 | 53 | 62 | 103 | **115** | 150 | **150** | **2.8x** |

+|Tiny 416 | 443 | 609 | **790** | 773 | **1774** | 1353 | **3.5x** |

+|Tiny 416 CPU Core i7 7700HQ | 3.4 | - | - | 42 | - | 39 | **12x** |

+

+- Yolo v4 Full comparison: [map_fps](https://user-images.githubusercontent.com/4096485/80283279-0e303e00-871f-11ea-814c-870967d77fd1.png)

+- Yolo v4 tiny comparison: [tiny_fps](https://user-images.githubusercontent.com/4096485/85734112-6e366700-b705-11ea-95d1-fcba0de76d72.png)

+- CSPNet: [paper](https://arxiv.org/abs/1911.11929) and [map_fps](https://user-images.githubusercontent.com/4096485/71702416-6645dc00-2de0-11ea-8d65-de7d4b604021.png) comparison: https://github.com/WongKinYiu/CrossStagePartialNetworks

+- Yolo v3 on MS COCO: [Speed / Accuracy (mAP@0.5) chart](https://user-images.githubusercontent.com/4096485/52151356-e5d4a380-2683-11e9-9d7d-ac7bc192c477.jpg)

+- Yolo v3 on MS COCO (Yolo v3 vs RetinaNet) - Figure 3: https://arxiv.org/pdf/1804.02767v1.pdf

+- Yolo v2 on Pascal VOC 2007: https://hsto.org/files/a24/21e/068/a2421e0689fb43f08584de9d44c2215f.jpg

+- Yolo v2 on Pascal VOC 2012 (comp4): https://hsto.org/files/3a6/fdf/b53/3a6fdfb533f34cee9b52bdd9bb0b19d9.jpg

#### Youtube video of results

@@ -107,7 +110,7 @@ Others: https://www.youtube.com/user/pjreddie/videos

#### How to evaluate AP of YOLOv4 on the MS COCO evaluation server

1. Download and unzip test-dev2017 dataset from MS COCO server: http://images.cocodataset.org/zips/test2017.zip

-2. Download list of images for Detection taks and replace the paths with yours: https://raw.githubusercontent.com/AlexeyAB/darknet/master/scripts/testdev2017.txt

+2. Download list of images for Detection tasks and replace the paths with yours: https://raw.githubusercontent.com/AlexeyAB/darknet/master/scripts/testdev2017.txt

3. Download `yolov4.weights` file 245 MB: [yolov4.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights) (Google-drive mirror [yolov4.weights](https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT) )

4. Content of the file `cfg/coco.data` should be

@@ -132,9 +135,9 @@ eval=coco

3. Get any .avi/.mp4 video file (preferably not more than 1920x1080 to avoid bottlenecks in CPU performance)

4. Run one of two commands and look at the AVG FPS:

-* include video_capturing + NMS + drawing_bboxes:

+- include video_capturing + NMS + drawing_bboxes:

`./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights test.mp4 -dont_show -ext_output`

-* exclude video_capturing + NMS + drawing_bboxes:

+- exclude video_capturing + NMS + drawing_bboxes:

`./darknet detector demo cfg/coco.data cfg/yolov4.cfg yolov4.weights test.mp4 -benchmark`

#### Pre-trained models

@@ -143,52 +146,58 @@ There are weights-file for different cfg-files (trained for MS COCO dataset):

FPS on RTX 2070 (R) and Tesla V100 (V):

-* [yolov4x-mish.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4x-mish.cfg) - 640x640 - **67.9% mAP@0.5 (49.4% AP@0.5:0.95) - 23(R) FPS / 50(V) FPS** - 221 BFlops (110 FMA) - 381 MB: [yolov4x-mish.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4x-mish.weights)

- * pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4x-mish.conv.166

+- [yolov4-p6.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-p6.cfg) - 1280x1280 - **72.1% mAP@0.5 (54.0% AP@0.5:0.95) - 32(V) FPS** - xxx BFlops (xxx FMA) - 487 MB: [yolov4-p6.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-p6.weights)

+ - pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-p6.conv.289

+

+- [yolov4-p5.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-p5.cfg) - 896x896 - **70.0% mAP@0.5 (51.6% AP@0.5:0.95) - 43(V) FPS** - xxx BFlops (xxx FMA) - 271 MB: [yolov4-p5.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-p5.weights)

+ - pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-p5.conv.232

-* [yolov4-csp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-csp.cfg) - 202 MB: [yolov4-csp.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-csp.weights) paper [Scaled Yolo v4](https://arxiv.org/abs/2011.08036)

+- [yolov4x-mish.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4x-mish.cfg) - 640x640 - **68.5% mAP@0.5 (50.1% AP@0.5:0.95) - 23(R) FPS / 50(V) FPS** - 221 BFlops (110 FMA) - 381 MB: [yolov4x-mish.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4x-mish.weights)

+ - pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4x-mish.conv.166

+

+- [yolov4-csp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-csp.cfg) - 202 MB: [yolov4-csp.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-csp.weights) paper [Scaled Yolo v4](https://arxiv.org/abs/2011.08036)

just change `width=` and `height=` parameters in `yolov4-csp.cfg` file and use the same `yolov4-csp.weights` file for all cases:

- * `width=640 height=640` in cfg: **66.2% mAP@0.5 (47.5% AP@0.5:0.95) - 70(V) FPS** - 120 (60 FMA) BFlops

- * `width=512 height=512` in cfg: **64.8% mAP@0.5 (46.2% AP@0.5:0.95) - 93(V) FPS** - 77 (39 FMA) BFlops

- * pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-csp.conv.142

-

-* [yolov4.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg) - 245 MB: [yolov4.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights) (Google-drive mirror [yolov4.weights](https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT) ) paper [Yolo v4](https://arxiv.org/abs/2004.10934)

+ - `width=640 height=640` in cfg: **67.4% mAP@0.5 (48.7% AP@0.5:0.95) - 70(V) FPS** - 120 (60 FMA) BFlops

+ - `width=512 height=512` in cfg: **64.8% mAP@0.5 (46.2% AP@0.5:0.95) - 93(V) FPS** - 77 (39 FMA) BFlops

+ - pre-trained weights for training: https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-csp.conv.142

+

+- [yolov4.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4.cfg) - 245 MB: [yolov4.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v3_optimal/yolov4.weights) (Google-drive mirror [yolov4.weights](https://drive.google.com/open?id=1cewMfusmPjYWbrnuJRuKhPMwRe_b9PaT) ) paper [Yolo v4](https://arxiv.org/abs/2004.10934)

just change `width=` and `height=` parameters in `yolov4.cfg` file and use the same `yolov4.weights` file for all cases:

- * `width=608 height=608` in cfg: **65.7% mAP@0.5 (43.5% AP@0.5:0.95) - 34(R) FPS / 62(V) FPS** - 128.5 BFlops

- * `width=512 height=512` in cfg: **64.9% mAP@0.5 (43.0% AP@0.5:0.95) - 45(R) FPS / 83(V) FPS** - 91.1 BFlops

- * `width=416 height=416` in cfg: **62.8% mAP@0.5 (41.2% AP@0.5:0.95) - 55(R) FPS / 96(V) FPS** - 60.1 BFlops

- * `width=320 height=320` in cfg: **60% mAP@0.5 ( 38% AP@0.5:0.95) - 63(R) FPS / 123(V) FPS** - 35.5 BFlops

+ - `width=608 height=608` in cfg: **65.7% mAP@0.5 (43.5% AP@0.5:0.95) - 34(R) FPS / 62(V) FPS** - 128.5 BFlops

+ - `width=512 height=512` in cfg: **64.9% mAP@0.5 (43.0% AP@0.5:0.95) - 45(R) FPS / 83(V) FPS** - 91.1 BFlops

+ - `width=416 height=416` in cfg: **62.8% mAP@0.5 (41.2% AP@0.5:0.95) - 55(R) FPS / 96(V) FPS** - 60.1 BFlops

+ - `width=320 height=320` in cfg: **60% mAP@0.5 ( 38% AP@0.5:0.95) - 63(R) FPS / 123(V) FPS** - 35.5 BFlops

-* [yolov4-tiny.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-tiny.cfg) - **40.2% mAP@0.5 - 371(1080Ti) FPS / 330(RTX2070) FPS** - 6.9 BFlops - 23.1 MB: [yolov4-tiny.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-tiny.weights)

+- [yolov4-tiny.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov4-tiny.cfg) - **40.2% mAP@0.5 - 371(1080Ti) FPS / 330(RTX2070) FPS** - 6.9 BFlops - 23.1 MB: [yolov4-tiny.weights](https://github.com/AlexeyAB/darknet/releases/download/darknet_yolo_v4_pre/yolov4-tiny.weights)

-* [enet-coco.cfg (EfficientNetB0-Yolov3)](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/enet-coco.cfg) - **45.5% mAP@0.5 - 55(R) FPS** - 3.7 BFlops - 18.3 MB: [enetb0-coco_final.weights](https://drive.google.com/file/d/1FlHeQjWEQVJt0ay1PVsiuuMzmtNyv36m/view)

+- [enet-coco.cfg (EfficientNetB0-Yolov3)](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/enet-coco.cfg) - **45.5% mAP@0.5 - 55(R) FPS** - 3.7 BFlops - 18.3 MB: [enetb0-coco_final.weights](https://drive.google.com/file/d/1FlHeQjWEQVJt0ay1PVsiuuMzmtNyv36m/view)

-* [yolov3-openimages.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-openimages.cfg) - 247 MB - 18(R) FPS - OpenImages dataset: [yolov3-openimages.weights](https://pjreddie.com/media/files/yolov3-openimages.weights)

+- [yolov3-openimages.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-openimages.cfg) - 247 MB - 18(R) FPS - OpenImages dataset: [yolov3-openimages.weights](https://pjreddie.com/media/files/yolov3-openimages.weights)

CLICK ME - Yolo v3 models

-* [csresnext50-panet-spp-original-optimal.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/csresnext50-panet-spp-original-optimal.cfg) - **65.4% mAP@0.5 (43.2% AP@0.5:0.95) - 32(R) FPS** - 100.5 BFlops - 217 MB: [csresnext50-panet-spp-original-optimal_final.weights](https://drive.google.com/open?id=1_NnfVgj0EDtb_WLNoXV8Mo7WKgwdYZCc)

+- [csresnext50-panet-spp-original-optimal.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/csresnext50-panet-spp-original-optimal.cfg) - **65.4% mAP@0.5 (43.2% AP@0.5:0.95) - 32(R) FPS** - 100.5 BFlops - 217 MB: [csresnext50-panet-spp-original-optimal_final.weights](https://drive.google.com/open?id=1_NnfVgj0EDtb_WLNoXV8Mo7WKgwdYZCc)

-* [yolov3-spp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-spp.cfg) - **60.6% mAP@0.5 - 38(R) FPS** - 141.5 BFlops - 240 MB: [yolov3-spp.weights](https://pjreddie.com/media/files/yolov3-spp.weights)

+- [yolov3-spp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-spp.cfg) - **60.6% mAP@0.5 - 38(R) FPS** - 141.5 BFlops - 240 MB: [yolov3-spp.weights](https://pjreddie.com/media/files/yolov3-spp.weights)

-* [csresnext50-panet-spp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/csresnext50-panet-spp.cfg) - **60.0% mAP@0.5 - 44 FPS** - 71.3 BFlops - 217 MB: [csresnext50-panet-spp_final.weights](https://drive.google.com/file/d/1aNXdM8qVy11nqTcd2oaVB3mf7ckr258-/view?usp=sharing)

+- [csresnext50-panet-spp.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/csresnext50-panet-spp.cfg) - **60.0% mAP@0.5 - 44 FPS** - 71.3 BFlops - 217 MB: [csresnext50-panet-spp_final.weights](https://drive.google.com/file/d/1aNXdM8qVy11nqTcd2oaVB3mf7ckr258-/view?usp=sharing)

-* [yolov3.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3.cfg) - **55.3% mAP@0.5 - 66(R) FPS** - 65.9 BFlops - 236 MB: [yolov3.weights](https://pjreddie.com/media/files/yolov3.weights)

+- [yolov3.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3.cfg) - **55.3% mAP@0.5 - 66(R) FPS** - 65.9 BFlops - 236 MB: [yolov3.weights](https://pjreddie.com/media/files/yolov3.weights)

-* [yolov3-tiny.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-tiny.cfg) - **33.1% mAP@0.5 - 345(R) FPS** - 5.6 BFlops - 33.7 MB: [yolov3-tiny.weights](https://pjreddie.com/media/files/yolov3-tiny.weights)

+- [yolov3-tiny.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-tiny.cfg) - **33.1% mAP@0.5 - 345(R) FPS** - 5.6 BFlops - 33.7 MB: [yolov3-tiny.weights](https://pjreddie.com/media/files/yolov3-tiny.weights)

-* [yolov3-tiny-prn.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-tiny-prn.cfg) - **33.1% mAP@0.5 - 370(R) FPS** - 3.5 BFlops - 18.8 MB: [yolov3-tiny-prn.weights](https://drive.google.com/file/d/18yYZWyKbo4XSDVyztmsEcF9B_6bxrhUY/view?usp=sharing)

+- [yolov3-tiny-prn.cfg](https://raw.githubusercontent.com/AlexeyAB/darknet/master/cfg/yolov3-tiny-prn.cfg) - **33.1% mAP@0.5 - 370(R) FPS** - 3.5 BFlops - 18.8 MB: [yolov3-tiny-prn.weights](https://drive.google.com/file/d/18yYZWyKbo4XSDVyztmsEcF9B_6bxrhUY/view?usp=sharing)

CLICK ME - Yolo v2 models

-* `yolov2.cfg` (194 MB COCO Yolo v2) - requires 4 GB GPU-RAM: https://pjreddie.com/media/files/yolov2.weights

-* `yolo-voc.cfg` (194 MB VOC Yolo v2) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo-voc.weights

-* `yolov2-tiny.cfg` (43 MB COCO Yolo v2) - requires 1 GB GPU-RAM: https://pjreddie.com/media/files/yolov2-tiny.weights

-* `yolov2-tiny-voc.cfg` (60 MB VOC Yolo v2) - requires 1 GB GPU-RAM: http://pjreddie.com/media/files/yolov2-tiny-voc.weights

-* `yolo9000.cfg` (186 MB Yolo9000-model) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo9000.weights

+- `yolov2.cfg` (194 MB COCO Yolo v2) - requires 4 GB GPU-RAM: https://pjreddie.com/media/files/yolov2.weights

+- `yolo-voc.cfg` (194 MB VOC Yolo v2) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo-voc.weights

+- `yolov2-tiny.cfg` (43 MB COCO Yolo v2) - requires 1 GB GPU-RAM: https://pjreddie.com/media/files/yolov2-tiny.weights

+- `yolov2-tiny-voc.cfg` (60 MB VOC Yolo v2) - requires 1 GB GPU-RAM: http://pjreddie.com/media/files/yolov2-tiny-voc.weights

+- `yolo9000.cfg` (186 MB Yolo9000-model) - requires 4 GB GPU-RAM: http://pjreddie.com/media/files/yolo9000.weights