-

Notifications

You must be signed in to change notification settings - Fork 1

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Main ideas for this demostration and steps to follow #1

Comments

|

One possible idea of a demo is:

|

|

Talking with @smcdiaz, we've reached to a conclusion of a possible demo.

|

|

Pending of buy a tray to start and get to work with this demo |

|

Unblocked thanks to https://github.com/roboticslab-uc3m/teo-hardware-issues/issues/22 |

|

Unblocked thanks to https://github.com/roboticslab-uc3m/teo-hardware-issues/issues/14#issuecomment-392781382 but blocked anyway by https://github.com/roboticslab-uc3m/teo-hardware-issues/issues/26 |

|

Next to do:

|

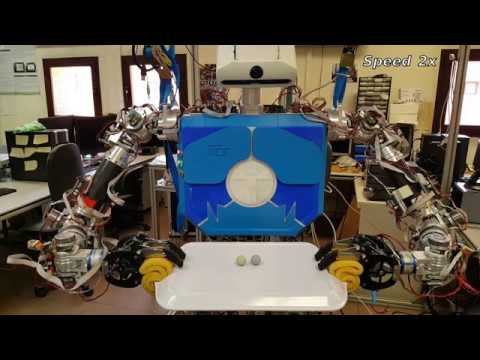

In order to close the control loop, I've done some advances. I've understood the performance of the JR3 sensor. I have linked the output of the sensor with the movement of the arm in Cartesian positions, so when we push the hand in one direction of the three axes, the arm will follow that direction in the Cartesian axes, using position mode. --> See video ! 😃 |

|

I have already made several advances, which were on standby due to various changes that have had to be made in the robot. Changes at the software level, such as the review and unify joint limits , the change of the direction of rotations of various joints in order to unify all in the same sense with respect to their axes of coordinates, the change and unify TEO kinematic model issue, and the test of different operating modes in position (Make position direct mode usable) with the aim of achieving the best movement in cartesian coordinates. Also, there have been several problems at the hardware level that have stopped the tests, such as the break of the right forearm or problems with values obtained by some absolute encoders. Some notes:

|

We are interested of create a demostration which we can see Teo manipulating an object with two arms. It can be something with handles or something that it's necessary to grasp with two hands at the same time.

The objective is to check the correct functionality of the robot, manipulating with two arms.

It's not necessary to use path-planning. We can grab waypoints (see this old issue for that) like

teo-self-presentationand make something simple and nice to see.UPDATED:

Next to do:

The text was updated successfully, but these errors were encountered: