diff --git a/README.md b/README.md

index 08586df612..4b49c3cfbe 100644

--- a/README.md

+++ b/README.md

@@ -52,155 +52,146 @@ limitations under the License.

-A CPU runtime that takes advantage of sparsity within neural networks to reduce compute. Read [more about sparsification](https://docs.neuralmagic.com/user-guides/sparsification).

-Neural Magic's DeepSparse is able to integrate into popular deep learning libraries (e.g., Hugging Face, Ultralytics) allowing you to leverage DeepSparse for loading and deploying sparse models with ONNX.

-ONNX gives the flexibility to serve your model in a framework-agnostic environment.

-Support includes [PyTorch,](https://pytorch.org/docs/stable/onnx.html) [TensorFlow,](https://github.com/onnx/tensorflow-onnx) [Keras,](https://github.com/onnx/keras-onnx) and [many other frameworks](https://github.com/onnx/onnxmltools).

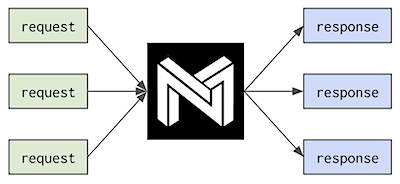

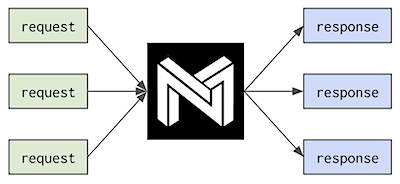

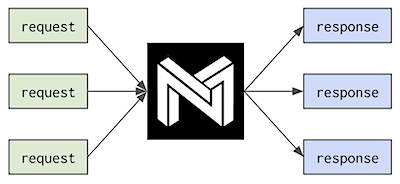

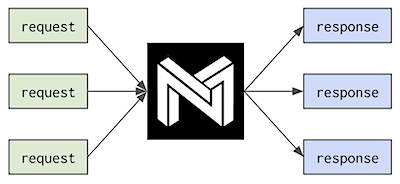

+[DeepSparse](https://github.com/neuralmagic/deepsparse) is a CPU inference runtime that takes advantage of sparsity within neural networks to execute inference quickly. Coupled with [SparseML](https://github.com/neuralmagic/sparseml), an open-source optimization library, DeepSparse enables you to achieve GPU-class performance on commodity hardware.

+

+

+  +

+

+

+For details of training a sparse model for deployment with DeepSparse, [check out SparseML](https://github.com/neuralmagic/sparseml).

## Installation

-Install DeepSparse Community as follows:

+DeepSparse is available in two editions:

+1. DeepSparse Community is free for evaluation, research, and non-production use with our [DeepSparse Community License](https://neuralmagic.com/legal/engine-license-agreement/).

+2. DeepSparse Enterprise requires a [trial license](https://neuralmagic.com/deepsparse-free-trial/) or [can be fully licensed](https://neuralmagic.com/legal/master-software-license-and-service-agreement/) for production, commercial applications.

+

+#### Install via Docker (Recommended)

+

+DeepSparse Community is available as a container image hosted on [GitHub container registry](https://github.com/neuralmagic/deepsparse/pkgs/container/deepsparse).

```bash

-pip install deepsparse

+docker pull ghcr.io/neuralmagic/deepsparse:1.4.2

+docker tag ghcr.io/neuralmagic/deepsparse:1.4.2 deepsparse-docker

+docker run -it deepsparse-docker

```

-DeepSparse is available in two editions:

-1. [**DeepSparse Community**](#installation) is open-source and free for evaluation, research, and non-production use with our [DeepSparse Community License](https://neuralmagic.com/legal/engine-license-agreement/).

-2. [**DeepSparse Enterprise**](https://docs.neuralmagic.com/products/deepsparse-ent) requires a Trial License or [can be fully licensed](https://neuralmagic.com/legal/master-software-license-and-service-agreement/) for production, commercial applications.

-

-## 🧰 Hardware Support and System Requirements

+- [Check out the Docker page](https://github.com/neuralmagic/deepsparse/tree/main/docker/) for more details.

-To ensure that your CPU is compatible with DeepSparse, it is recommended to review the [Supported Hardware for DeepSparse](https://docs.neuralmagic.com/user-guides/deepsparse-engine/hardware-support) documentation.

+#### Install via PyPI

+DeepSparse Community is also available via PyPI. We recommend using a virtual enviornment.

-To ensure that you get the best performance from DeepSparse, it has been thoroughly tested on Python versions 3.7-3.10, ONNX versions 1.5.0-1.12.0, ONNX opset version 11 or higher, and manylinux compliant systems. It is highly recommended to use a [virtual environment](https://docs.python.org/3/library/venv.html) when running DeepSparse. Please note that DeepSparse is only supported natively on Linux. For those using Mac or Windows, running Linux in a Docker or virtual machine is necessary to use DeepSparse.

+```bash

+pip install deepsparse

+```

-## Features

+- [Check out the Installation page](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/installation.md) for optional dependencies.

-- 👩💻 Pipelines for [NLP](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/transformers), [CV Classification](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/image_classification), [CV Detection](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/yolo), [CV Segmentation](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/yolact) and more!

-- 🔌 [DeepSparse Server](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/server)

-- 📜 [DeepSparse Benchmark](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/benchmark)

-- ☁️ [Cloud Deployments and Demos](https://github.com/neuralmagic/deepsparse/tree/main/examples)

+## Hardware Support and System Requirements

-### 👩💻 Pipelines

+[Supported Hardware for DeepSparse](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/hardware-support.md)

-Pipelines are a high-level Python interface for running inference with DeepSparse across select tasks in NLP and CV:

+DeepSparse is tested on Python versions 3.7-3.10, ONNX versions 1.5.0-1.12.0, ONNX opset version 11 or higher, and manylinux compliant systems. Please note that DeepSparse is only supported natively on Linux. For those using Mac or Windows, running Linux in a Docker or virtual machine is necessary to use DeepSparse.

-| NLP | CV |

-|-----------------------|---------------------------|

-| Text Classification `"text_classification"` | Image Classification `"image_classification"` |

-| Token Classification `"token_classification"` | Object Detection `"yolo"` |

-| Sentiment Analysis `"sentiment_analysis"` | Instance Segmentation `"yolact"` |

-| Question Answering `"question_answering"` | Keypoint Detection `"open_pif_paf"` |

-| MultiLabel Text Classification `"text_classification"` | |

-| Document Classification `"text_classification"` | |

-| Zero-Shot Text Classification `"zero_shot_text_classification"` | |

+## Deployment APIs

+DeepSparse includes three deployment APIs:

-**NLP Example** | Question Answering

-```python

-from deepsparse import Pipeline

+- **Engine** is the lowest-level API. With Engine, you pass tensors and receive the raw logits.

+- **Pipeline** wraps the Engine with pre- and post-processing. With Pipeline, you pass raw data and receive the prediction.

+- **Server** wraps Pipelines with a REST API using FastAPI. With Server, you send raw data over HTTP and receive the prediction.

-qa_pipeline = Pipeline.create(

- task="question-answering",

- model_path="zoo:nlp/question_answering/bert-base/pytorch/huggingface/squad/12layer_pruned80_quant-none-vnni",

-)

+### Engine

-inference = qa_pipeline(question="What's my name?", context="My name is Snorlax")

-```

-**CV Example** | Image Classification

+The example below downloads a 90% pruned-quantized BERT model for sentiment analysis in ONNX format from SparseZoo, compiles the model, and runs inference on randomly generated input.

```python

-from deepsparse import Pipeline

+from deepsparse import Engine

+from deepsparse.utils import generate_random_inputs, model_to_path

-cv_pipeline = Pipeline.create(

- task='image_classification',

- model_path='zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95-none',

-)

+# download onnx, compile

+zoo_stub = "zoo:nlp/sentiment_analysis/obert-base/pytorch/huggingface/sst2/pruned90_quant-none"

+batch_size = 1

+compiled_model = Engine(model=zoo_stub, batch_size=batch_size)

+

+# run inference (input is raw numpy tensors, output is raw scores)

+inputs = generate_random_inputs(model_to_path(zoo_stub), batch_size)

+output = compiled_model(inputs)

+print(output)

-input_image = "my_image.png"

-inference = cv_pipeline(images=input_image)

+# > [array([[-0.3380675 , 0.09602544]], dtype=float32)] << raw scores

```

+### DeepSparse Pipelines

-### 🔌 DeepSparse Server

+Pipeline is the default API for interacting with DeepSparse. Similar to Hugging Face Pipelines, DeepSparse Pipelines wrap Engine with pre- and post-processing (as well as other utilities), enabling you to send raw data to DeepSparse and receive the post-processed prediction.

-DeepSparse Server is a tool that enables you to serve your models and pipelines directly from your terminal.

+The example below downloads a 90% pruned-quantized BERT model for sentiment analysis in ONNX format from SparseZoo, sets up a pipeline, and runs inference on sample data.

-The server is built on top of two powerful libraries: the FastAPI web framework and the Uvicorn web server. This combination ensures that DeepSparse Server delivers excellent performance and reliability. Install with this command:

+```python

+from deepsparse import Pipeline

-```bash

-pip install deepsparse[server]

+# download onnx, set up pipeline

+zoo_stub = "zoo:nlp/sentiment_analysis/obert-base/pytorch/huggingface/sst2/pruned90_quant-none"

+sentiment_analysis_pipeline = Pipeline.create(

+ task="sentiment-analysis", # name of the task

+ model_path=zoo_stub, # zoo stub or path to local onnx file

+)

+

+# run inference (input is a sentence, output is the prediction)

+prediction = sentiment_analysis_pipeline("I love using DeepSparse Pipelines")

+print(prediction)

+# > labels=['positive'] scores=[0.9954759478569031]

```

-#### Single Model

+#### Additional Resources

+- Check out the [Use Cases Page](https://github.com/neuralmagic/deepsparse/tree/main/docs/use-cases) for more details on supported tasks.

+- Check out the [Pipelines User Guide](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/deepsparse-pipelines.md) for more usage details.

-Once installed, the following example CLI command is available for running inference with a single BERT model:

+### DeepSparse Server

-```bash

-deepsparse.server \

- task question_answering \

- --model_path "zoo:nlp/question_answering/bert-base/pytorch/huggingface/squad/12layer_pruned80_quant-none-vnni"

-```

+Server wraps Pipelines with REST APIs, enabling you to stand up model serving endpoint running DeepSparse. This enables you to send raw data to DeepSparse over HTTP and receive the post-processed predictions.

-To look up arguments run: `deepsparse.server --help`.

-

-#### Multiple Models

-To deploy multiple models in your setup, a `config.yaml` file should be created. In the example provided, two BERT models are configured for the question-answering task:

-

-```yaml

-num_workers: 1

-endpoints:

- - task: question_answering

- route: /predict/question_answering/base

- model: zoo:nlp/question_answering/bert-base/pytorch/huggingface/squad/base-none

- batch_size: 1

- - task: question_answering

- route: /predict/question_answering/pruned_quant

- model: zoo:nlp/question_answering/bert-base/pytorch/huggingface/squad/12layer_pruned80_quant-none-vnni

- batch_size: 1

-```

+DeepSparse Server is launched from the command line, configured via arguments or a server configuration file. The following downloads a 90% pruned-quantized BERT model for sentiment analysis in ONNX format from SparseZoo and launches a sentiment analysis endpoint:

-After the `config.yaml` file has been created, the server can be started by passing the file path as an argument:

```bash

-deepsparse.server config config.yaml

+deepsparse.server \

+ --task sentiment-analysis \

+ --model_path zoo:nlp/sentiment_analysis/obert-base/pytorch/huggingface/sst2/pruned90_quant-none

```

-Read the [DeepSparse Server](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/server) README for further details.

-

-### 📜 DeepSparse Benchmark

+Sending a request:

-DeepSparse Benchmark, a command-line (CLI) tool, is used to evaluate the DeepSparse Engine's performance with ONNX models. This tool processes arguments, downloads and compiles the network into the engine, creates input tensors, and runs the model based on the selected scenario.

-

-Run `deepsparse.benchmark -h` to look up arguments:

+```python

+import requests

-```shell

-deepsparse.benchmark [-h] [-b BATCH_SIZE] [-i INPUT_SHAPES] [-ncores NUM_CORES] [-s {async,sync,elastic}] [-t TIME]

- [-w WARMUP_TIME] [-nstreams NUM_STREAMS] [-pin {none,core,numa}] [-e ENGINE] [-q] [-x EXPORT_PATH]

- model_path

+url = "http://localhost:5543/predict" # Server's port default to 5543

+obj = {"sequences": "Snorlax loves my Tesla!"}

+response = requests.post(url, json=obj)

+print(response.text)

+# {"labels":["positive"],"scores":[0.9965094327926636]}

```

+#### Additional Resources

+- Check out the [Use Cases Page](https://github.com/neuralmagic/deepsparse/tree/main/docs/use-cases) for more details on supported tasks.

+- Check out the [Server User Guide](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/deepsparse-server.md) for more usage details.

-Refer to the [Benchmark](https://github.com/neuralmagic/deepsparse/tree/main/src/deepsparse/benchmark) README for examples of specific inference scenarios.

+## ONNX

-### 🦉 Custom ONNX Model Support

+DeepSparse accepts models in the ONNX format. ONNX models can be passed in one of two ways:

-DeepSparse is capable of accepting ONNX models from two sources:

+- **SparseZoo Stub**: [SparseZoo](https://sparsezoo.neuralmagic.com/) is an open-source repository of sparse models. The examples on this page use SparseZoo stubs to identify models and download them for deployment in DeepSparse.

-**SparseZoo ONNX**: This is an open-source repository of sparse models available for download. [SparseZoo](https://github.com/neuralmagic/sparsezoo) offers inference-optimized models, which are trained using repeatable sparsification recipes and state-of-the-art techniques from [SparseML](https://github.com/neuralmagic/sparseml).

-

-**Custom ONNX**: Users can provide their own ONNX models, whether dense or sparse. By plugging in a custom model, users can compare its performance with other solutions.

+- **Local ONNX File**: Users can provide their own ONNX models, whether dense or sparse. For example:

```bash

-> wget https://github.com/onnx/models/raw/main/vision/classification/mobilenet/model/mobilenetv2-7.onnx

-Saving to: ‘mobilenetv2-7.onnx’

+wget https://github.com/onnx/models/raw/main/vision/classification/mobilenet/model/mobilenetv2-7.onnx

```

-Custom ONNX Benchmark example:

```python

-from deepsparse import compile_model

+from deepsparse import Engine

from deepsparse.utils import generate_random_inputs

onnx_filepath = "mobilenetv2-7.onnx"

batch_size = 16

@@ -209,34 +200,35 @@ batch_size = 16

inputs = generate_random_inputs(onnx_filepath, batch_size)

# Compile and run

-engine = compile_model(onnx_filepath, batch_size)

-outputs = engine.run(inputs)

+compiled_model = Engine(model=onnx_filepath, batch_size=batch_size)

+outputs = compiled_model(inputs)

+print(outputs[0].shape)

+# (16, 1000) << batch, num_classes

```

-The [GitHub repository](https://github.com/neuralmagic/deepsparse) repository contains package APIs and examples that help users swiftly begin benchmarking and performing inference on sparse models.

-

-### Scheduling Single-Stream, Multi-Stream, and Elastic Inference

+## Inference Modes

-DeepSparse offers different inference scenarios based on your use case. Read more details here: [Inference Types](https://github.com/neuralmagic/deepsparse/blob/main/docs/source/scheduler.md).

+DeepSparse offers different inference scenarios based on your use case.

-⚡ **Single-stream** scheduling: the latency/synchronous scenario, requests execute serially. [`default`]

+**Single-stream** scheduling: the latency/synchronous scenario, requests execute serially. [`default`]

It's highly optimized for minimum per-request latency, using all of the system's resources provided to it on every request it gets.

-⚡ **Multi-stream** scheduling: the throughput/asynchronous scenario, requests execute in parallel.

+**Multi-stream** scheduling: the throughput/asynchronous scenario, requests execute in parallel.

It's highly optimized for minimum per-request latency, using all of the system's resources provided to it on every request it gets.

-⚡ **Multi-stream** scheduling: the throughput/asynchronous scenario, requests execute in parallel.

+**Multi-stream** scheduling: the throughput/asynchronous scenario, requests execute in parallel.

The most common use cases for the multi-stream scheduler are where parallelism is low with respect to core count, and where requests need to be made asynchronously without time to batch them.

-## Resources

-#### Libraries

-- [DeepSparse](https://docs.neuralmagic.com/deepsparse/)

-- [SparseML](https://docs.neuralmagic.com/sparseml/)

-- [SparseZoo](https://docs.neuralmagic.com/sparsezoo/)

-- [Sparsify](https://docs.neuralmagic.com/sparsify/)

+- [Check out the Scheduler User Guide](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/scheduler.md) for more details.

+

+## Additional Resources

+- [Benchmarking Performance](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide/deepsparse-benchmarking.md)

+- [User Guide](https://github.com/neuralmagic/deepsparse/tree/main/docs/user-guide)

+- [Use Cases](https://github.com/neuralmagic/deepsparse/tree/main/docs/use-cases)

+- [Cloud Deployments and Demos](https://github.com/neuralmagic/deepsparse/tree/main/examples/)

#### Versions

- [DeepSparse](https://pypi.org/project/deepsparse) | stable

@@ -251,7 +243,6 @@ The most common use cases for the multi-stream scheduler are where parallelism i

### Be Part of the Future... And the Future is Sparse!

-

Contribute with code, examples, integrations, and documentation as well as bug reports and feature requests! [Learn how here.](https://github.com/neuralmagic/deepsparse/blob/main/CONTRIBUTING.md)

For user help or questions about DeepSparse, sign up or log in to our **[Deep Sparse Community Slack](https://join.slack.com/t/discuss-neuralmagic/shared_invite/zt-q1a1cnvo-YBoICSIw3L1dmQpjBeDurQ)**. We are growing the community member by member and happy to see you there. Bugs, feature requests, or additional questions can also be posted to our [GitHub Issue Queue.](https://github.com/neuralmagic/deepsparse/issues) You can get the latest news, webinar and event invites, research papers, and other ML Performance tidbits by [subscribing](https://neuralmagic.com/subscribe/) to the Neural Magic community.

diff --git a/docs/neural-magic-workflow.png b/docs/neural-magic-workflow.png

new file mode 100644

index 0000000000..f870b4e97c

Binary files /dev/null and b/docs/neural-magic-workflow.png differ

diff --git a/docs/_static/css/nm-theme-adjustment.css b/docs/old/_static/css/nm-theme-adjustment.css

similarity index 100%

rename from docs/_static/css/nm-theme-adjustment.css

rename to docs/old/_static/css/nm-theme-adjustment.css

diff --git a/docs/_templates/versions.html b/docs/old/_templates/versions.html

similarity index 100%

rename from docs/_templates/versions.html

rename to docs/old/_templates/versions.html

diff --git a/docs/api/.gitkeep b/docs/old/api/.gitkeep

similarity index 100%

rename from docs/api/.gitkeep

rename to docs/old/api/.gitkeep

diff --git a/docs/api/deepsparse.rst b/docs/old/api/deepsparse.rst

similarity index 100%

rename from docs/api/deepsparse.rst

rename to docs/old/api/deepsparse.rst

diff --git a/docs/api/deepsparse.transformers.rst b/docs/old/api/deepsparse.transformers.rst

similarity index 100%

rename from docs/api/deepsparse.transformers.rst

rename to docs/old/api/deepsparse.transformers.rst

diff --git a/docs/api/deepsparse.utils.rst b/docs/old/api/deepsparse.utils.rst

similarity index 100%

rename from docs/api/deepsparse.utils.rst

rename to docs/old/api/deepsparse.utils.rst

diff --git a/docs/api/modules.rst b/docs/old/api/modules.rst

similarity index 100%

rename from docs/api/modules.rst

rename to docs/old/api/modules.rst

diff --git a/docs/conf.py b/docs/old/conf.py

similarity index 100%

rename from docs/conf.py

rename to docs/old/conf.py

diff --git a/docs/debugging-optimizing/diagnostics-debugging.md b/docs/old/debugging-optimizing/diagnostics-debugging.md

similarity index 100%

rename from docs/debugging-optimizing/diagnostics-debugging.md

rename to docs/old/debugging-optimizing/diagnostics-debugging.md

diff --git a/docs/debugging-optimizing/example-log.md b/docs/old/debugging-optimizing/example-log.md

similarity index 100%

rename from docs/debugging-optimizing/example-log.md

rename to docs/old/debugging-optimizing/example-log.md

diff --git a/docs/debugging-optimizing/index.rst b/docs/old/debugging-optimizing/index.rst

similarity index 100%

rename from docs/debugging-optimizing/index.rst

rename to docs/old/debugging-optimizing/index.rst

diff --git a/docs/debugging-optimizing/numactl-utility.md b/docs/old/debugging-optimizing/numactl-utility.md

similarity index 100%

rename from docs/debugging-optimizing/numactl-utility.md

rename to docs/old/debugging-optimizing/numactl-utility.md

diff --git a/docs/favicon.ico b/docs/old/favicon.ico

similarity index 100%

rename from docs/favicon.ico

rename to docs/old/favicon.ico

diff --git a/docs/index.rst b/docs/old/index.rst

similarity index 100%

rename from docs/index.rst

rename to docs/old/index.rst

diff --git a/docs/source/c++api-overview.md b/docs/old/source/c++api-overview.md

similarity index 100%

rename from docs/source/c++api-overview.md

rename to docs/old/source/c++api-overview.md

diff --git a/docs/source/hardware.md b/docs/old/source/hardware.md

similarity index 100%

rename from docs/source/hardware.md

rename to docs/old/source/hardware.md

diff --git a/docs/source/icon-deepsparse.png b/docs/old/source/icon-deepsparse.png

similarity index 100%

rename from docs/source/icon-deepsparse.png

rename to docs/old/source/icon-deepsparse.png

diff --git a/docs/source/multi-stream.png b/docs/old/source/multi-stream.png

similarity index 100%

rename from docs/source/multi-stream.png

rename to docs/old/source/multi-stream.png

diff --git a/docs/source/scheduler.md b/docs/old/source/scheduler.md

similarity index 100%

rename from docs/source/scheduler.md

rename to docs/old/source/scheduler.md

diff --git a/docs/source/single-stream.png b/docs/old/source/single-stream.png

similarity index 100%

rename from docs/source/single-stream.png

rename to docs/old/source/single-stream.png

diff --git a/docs/use-cases/README.md b/docs/use-cases/README.md

new file mode 100644

index 0000000000..8d7532d398

--- /dev/null

+++ b/docs/use-cases/README.md

@@ -0,0 +1,90 @@

+

+

+# Use Cases

+

+There are three interfaces for interacting with DeepSparse:

+

+- **Engine** is the lowest-level API that enables you to compile a model and run inference on raw input tensors.

+

+- **Pipeline** is the default DeepSparse API. Similar to Hugging Face Pipelines, it wraps Engine with task-specific pre-processing and post-processing steps, allowing you to make requests on raw data and receive post-processed predictions.

+

+- **Server** is a REST API wrapper around Pipelines built on FastAPI and Uvicorn. It enables you to start a model serving endpoint running DeepSparse with a single CLI.

+

+This directory offers examples using each API in various supported tasks.

+

+### Supported Tasks

+

+DeepSparse supports the following tasks out of the box:

+

+| NLP | CV |

+|-----------------------|---------------------------|

+| [Text Classification `"text-classification"`](nlp/text-classification.md) | [Image Classification `"image_classification"`](cv/image-classification.md) |

+| [Token Classification `"token-classification"`](nlp/token-classification.md) | [Object Detection `"yolo"`](cv/object-detection-yolov5.md) |

+| [Sentiment Analysis `"sentiment-analysis"`](nlp/sentiment-analysis.md) | [Instance Segmentation `"yolact"`](cv/image-segmentation-yolact.md) |

+| [Question Answering `"question-answering"`](nlp/question-answering.md) | |

+| [Zero-Shot Text Classification `"zero-shot-text-classification"`](nlp/zero-shot-text-classification.md) | |

+| [Embedding Extraction `"transformers_embedding_extraction"`](nlp/transformers-embedding-extraction.md) | |

+

+### Examples

+

+**Pipeline Example** | Sentiment Analysis

+

+Here's an example of how a task is used to create a Pipeline:

+

+```python

+from deepsparse import Pipeline

+

+pipeline = Pipeline.create(

+ task="sentiment_analysis",

+ model_path="zoo:nlp/sentiment_analysis/obert-base/pytorch/huggingface/sst2/pruned90_quant-none")

+

+print(pipeline("I love DeepSparse Pipelines!"))

+# labels=['positive'] scores=[0.998009443283081]

+```

+

+**Server Example** | Sentiment Analysis

+

+Here's an example of how a task is used to create a Server:

+

+```bash

+deepsparse.server \

+ --task sentiment_analysis \

+ --model_path zoo:nlp/sentiment_analysis/obert-base/pytorch/huggingface/sst2/pruned90_quant-none

+```

+

+Making a request:

+

+```python

+import requests

+

+# Uvicorn is running on this port

+url = 'http://0.0.0.0:5543/predict'

+

+# send the data

+obj = {"sequences": "Sending requests to DeepSparse Server is fast and easy!"}

+resp = requests.post(url=url, json=obj)

+

+# recieve the post-processed output

+print(resp.text)

+# >> {"labels":["positive"],"scores":[0.9330279231071472]}

+```

+

+### Additional Resources

+

+- [Custom Tasks](../user-guide/deepsparse-pipelines.md#custom-use-case)

+- [Pipeline User Guide](../user-guide/deepsparse-pipelines.md)

+- [Server User Guide](../user-guide/deepsparse-server.md)

diff --git a/docs/use-cases/cv/embedding-extraction.md b/docs/use-cases/cv/embedding-extraction.md

new file mode 100644

index 0000000000..ff7e9f7ad1

--- /dev/null

+++ b/docs/use-cases/cv/embedding-extraction.md

@@ -0,0 +1,130 @@

+

+

+# Deploying Embedding Extraction Models With DeepSparse

+This page explains how to deploy an Embedding Extraction Pipeline with DeepSparse.

+

+## Installation Requirements

+This use case requires the installation of [DeepSparse Server](../../user-guide/installation.md).

+

+Confirm your machine is compatible with our [hardware requirements](../../user-guide/hardware-support.md).

+

+## Model Format

+The Embedding Extraction Pipeline enables you to generate embeddings in any domain, meaning you can use it with any ONNX model. It (optionally) removes the projection head from the model, such that you can re-use SparseZoo models and custom models you have trained in the embedding extraction scenario.

+

+There are two options for passing a model to the Embedding Extraction Pipeline:

+

+- Pass a Local ONNX File

+- Pass a SparseZoo Stub (which identifies an ONNX model in the SparseZoo)

+

+## DeepSparse Pipelines

+Pipeline is the default interface for interacting with DeepSparse.

+

+Like Hugging Face Pipelines, DeepSparse Pipelines wrap pre- and post-processing around the inference performed by the Engine. This creates a clean API that allows you to pass raw text and images to DeepSparse and receive the post-processed predictions, making it easy to add DeepSparse to your application.

+

+We will use the `Pipeline.create()` constructor to create an instance of an embedding extraction Pipeline with a 95% pruned-quantized version of ResNet-50 trained on `imagenet`. We can then pass images the `Pipeline` and receive the embeddings. All of the pre-processing is handled by the `Pipeline`.

+

+The Embedding Extraction Pipeline handles some useful actions around inference:

+

+- First, on initialization, the Pipeline (optionally) removes a projection head from a model. You can use the `emb_extraction_layer` argument to specify which layer to return. If your ONNX model has no projection head, you can set `emb_extraction_layer=None` (the default) to skip this step.

+

+- Second, as with all DeepSparse Pipelines, it handles pre-processing such that you can pass raw input. You will notice that in addition to the typical task argument used in `Pipeline.create()`, the Embedding Extraction Pipeline includes a `base_task` argument. This argument tells the Pipeline the domain of the model, such that the Pipeline can figure out what pre-processing to do.

+

+Download an image to use with the Pipeline.

+```bash

+wget https://huggingface.co/spaces/neuralmagic/image-classification/resolve/main/lion.jpeg

+```

+

+This is an example of extracting the last layer from ResNet-50:

+

+```python

+from deepsparse import Pipeline

+

+# this step removes the projection head before compiling the model

+rn50_embedding_pipeline = Pipeline.create(

+ task="embedding-extraction",

+ base_task="image-classification", # tells the pipeline to expect images and normalize input with ImageNet means/stds

+ model_path="zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none",

+ emb_extraction_layer=-3, # extracts last layer before projection head and softmax

+)

+

+# this step runs pre-processing, inference and returns an embedding

+embedding = rn50_embedding_pipeline(images="lion.jpeg")

+print(len(embedding.embeddings[0][0]))

+# 2048 << size of final layer>>

+```

+

+### Cross Use Case Functionality

+Check out the [Pipeline User Guide](../../user-guide/deepsparse-pipelines.md) for more details on configuring the Pipeline.

+

+## DeepSparse Server

+As an alternative to the Python API, DeepSparse Server allows you to serve an Embedding Extraction Pipeline over HTTP. Configuring the server uses the same parameters and schemas as the Pipelines.

+

+Once launched, a `/docs` endpoint is created with full endpoint descriptions and support for making sample requests.

+

+This configuration file sets `emb_extraction_layer` to -3:

+```yaml

+# config.yaml

+endpoints:

+ - task: embedding_extraction

+ model: zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none

+ kwargs:

+ base_task: image_classification

+ emb_extraction_layer: -3

+```

+Spin up the server:

+```bash

+deepsparse.server --config_file config.yaml

+```

+

+Make requests to the server:

+```python

+import requests, json

+url = "http://0.0.0.0:5543/predict/from_files"

+paths = ["lion.jpeg"]

+files = [("request", open(img, 'rb')) for img in paths]

+resp = requests.post(url=url, files=files)

+result = json.loads(resp.text)

+

+print(len(result["embeddings"][0][0]))

+

+# 2048 << size of final layer>>

+```

+## Using a Custom ONNX File

+Apart from using models from the SparseZoo, DeepSparse allows you to define custom ONNX files for embedding extraction.

+

+The first step is to obtain the ONNX model. You can obtain the file by converting your model to ONNX after training.

+Click Download on the [ResNet-50 - ImageNet page](https://sparsezoo.neuralmagic.com/models/cv%2Fclassification%2Fresnet_v1-50%2Fpytorch%2Fsparseml%2Fimagenet%2Fpruned95_uniform_quant-none) to download a ONNX ResNet model for demonstration.

+

+Extract the downloaded file and use the ResNet-50 ONNX model for embedding extraction:

+```python

+from deepsparse import Pipeline

+

+# this step removes the projection head before compiling the model

+rn50_embedding_pipeline = Pipeline.create(

+ task="embedding-extraction",

+ base_task="image-classification", # tells the pipeline to expect images and normalize input with ImageNet means/stds

+ model_path="resnet.onnx",

+ emb_extraction_layer=-3, # extracts last layer before projection head and softmax

+)

+

+# this step runs pre-processing, inference and returns an embedding

+embedding = rn50_embedding_pipeline(images="lion.jpeg")

+print(len(embedding.embeddings[0][0]))

+# 2048

+```

+### Cross Use Case Functionality

+Check out the [Server User Guide](../../user-guide/deepsparse-server.md) for more details on configuring the Server.

diff --git a/docs/use-cases/cv/image-classification.md b/docs/use-cases/cv/image-classification.md

new file mode 100644

index 0000000000..6d99374dd2

--- /dev/null

+++ b/docs/use-cases/cv/image-classification.md

@@ -0,0 +1,283 @@

+

+

+# Deploying Image Classification Models with DeepSparse

+

+This page explains how to benchmark and deploy an image classification model with DeepSparse.

+

+There are three interfaces for interacting with DeepSparse:

+- **Engine** is the lowest-level API that enables you to compile a model and run inference on raw input tensors.

+

+- **Pipeline** is the default DeepSparse API. Similar to Hugging Face Pipelines, it wraps Engine with pre-processing

+and post-processing steps, allowing you to make requests on raw data and receive post-processed predictions.

+

+- **Server** is a REST API wrapper around Pipelines built on [FastAPI](https://fastapi.tiangolo.com/) and [Uvicorn](https://www.uvicorn.org/). It enables you to start a model serving

+endpoint running DeepSparse with a single CLI.

+

+This example uses ResNet-50. For a full list of pre-sparsified image classification models, [check out the SparseZoo](https://sparsezoo.neuralmagic.com/?domain=cv&sub_domain=classification&page=1).

+

+## Installation Requirements

+

+This use case requires the installation of [DeepSparse Server](../../user-guide/installation.md).

+

+Confirm your machine is compatible with our [hardware requirements](../../user-guide/hardware-support.md).

+

+## Benchmarking

+

+We can use the benchmarking utility to demonstrate the DeepSparse's performance. We ran the numbers below on an AWS `c6i.2xlarge` instance (4 cores).

+

+### ONNX Runtime Baseline

+

+As a baseline, let's check out ONNX Runtime's performance on ResNet-50. Make sure you have ORT installed (`pip install onnxruntime`).

+

+```bash

+deepsparse.benchmark \

+ zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/base-none \

+ -b 64 -s sync -nstreams 1 \

+ -e onnxruntime

+

+> Original Model Path: zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/base-none

+> Batch Size: 64

+> Scenario: sync

+> Throughput (items/sec): 71.83

+```

+ONNX Runtime achieves 72 items/second with batch 64.

+

+### DeepSparse Speedup

+

+Now, let's run DeepSparse on an inference-optimized sparse version of ResNet-50. This model has been 95% pruned, while retaining >99% accuracy of the dense baseline on the `imagenet` dataset.

+

+```bash

+deepsparse.benchmark \

+ zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none \

+ -b 64 -s sync -nstreams 1 \

+ -e deepsparse

+

+> Original Model Path: zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none

+> Batch Size: 64

+> Scenario: sync

+> Throughput (items/sec): 345.69

+```

+

+DeepSparse achieves 346 items/second, an 4.8x speed-up over ONNX Runtime!

+

+## DeepSparse Engine

+Engine is the lowest-level API for interacting with DeepSparse. As much as possible, we recommended using the Pipeline API but Engine is available if you want to handle pre- or post-processing yourself.

+

+With Engine, we can compile an ONNX file and run inference on raw tensors.

+

+Here's an example, using a 95% pruned-quantized ResNet-50 trained on `imagenet` from SparseZoo:

+```python

+from deepsparse import Engine

+from deepsparse.utils import generate_random_inputs, model_to_path

+import numpy as np

+

+# download onnx from sparsezoo and compile with batchsize 1

+sparsezoo_stub = "zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none"

+batch_size = 1

+compiled_model = Engine(

+ model=sparsezoo_stub, # sparsezoo stub or path to local ONNX

+ batch_size=batch_size # defaults to batch size 1

+)

+

+# input is raw numpy tensors, output is raw scores for classes

+inputs = generate_random_inputs(model_to_path(sparsezoo_stub), batch_size)

+output = compiled_model(inputs)

+print(output)

+

+# [array([[-7.73529887e-01, 1.67251182e+00, -1.68212160e-01,

+# ....

+# 1.26290070e-05, 2.30549040e-06, 2.97072188e-06, 1.90549777e-04]], dtype=float32)]

+```

+## DeepSparse Pipelines

+Pipeline is the default interface for interacting with DeepSparse.

+

+Like Hugging Face Pipelines, DeepSparse Pipelines wrap pre- and post-processing around the inference performed by the Engine. This creates a clean API that allows you to pass raw text and images to DeepSparse and receive the post-processed predictions, making it easy to add DeepSparse to your application.

+

+Let's start by downloading a sample image:

+```bash

+wget https://huggingface.co/spaces/neuralmagic/image-classification/resolve/main/lion.jpeg

+```

+

+We will use the `Pipeline.create()` constructor to create an instance of an image classification Pipeline with a 90% pruned-quantized version of ResNet-50. We can then pass images to the Pipeline and receive the predictions. All the pre-processing (such as resizing the images and normalizing the inputs) is handled by the `Pipeline`.

+

+Passing the image as a JPEG to the Pipeline:

+

+```python

+from deepsparse import Pipeline

+

+# download onnx from sparsezoo and compile with batch size 1

+sparsezoo_stub = "zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none"

+pipeline = Pipeline.create(

+ task="image_classification",

+ model_path=sparsezoo_stub, # sparsezoo stub or path to local ONNX

+)

+

+# run inference on image file

+prediction = pipeline(images=["lion.jpeg"])

+print(prediction.labels)

+# [291] << class index of "lion" in imagenet

+```

+

+Passing the image as a numpy array to the Pipeline:

+

+```python

+from deepsparse import Pipeline

+from PIL import Image

+import numpy as np

+

+# download onnx from sparsezoo and compile with batch size 1

+sparsezoo_stub = "zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none"

+pipeline = Pipeline.create(

+ task="image_classification",

+ model_path=sparsezoo_stub, # sparsezoo stub or path to local ONNX

+)

+

+im = np.array(Image.open("lion.jpeg"))

+

+# run inference on image file

+prediction = pipeline(images=[im])

+print(prediction.labels)

+

+# [291] << class index of "lion" in imagenet

+```

+

+### Use Case Specific Arguments

+The Image Classification Pipeline contains additional arguments for configuring a `Pipeline`.

+

+#### Top K

+

+The `top_k` argument specifies the number of classes to return in the prediction.

+

+```python

+from deepsparse import Pipeline

+

+sparsezoo_stub = "zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none"

+pipeline = Pipeline.create(

+ task="image_classification",

+ model_path=sparsezoo_stub, # sparsezoo stub or path to local ONNX

+ top_k=3,

+)

+

+# run inference on image file

+prediction = pipeline(images="lion.jpeg")

+print(prediction.labels)

+# labels=[291, 260, 244]

+```

+#### Class Names

+

+The `class_names` argument defines a dictionary containing the desired class mappings.

+

+```python

+from deepsparse import Pipeline

+

+classes = {0: 'tench, Tinca tinca',1: 'goldfish, Carassius auratus',2: 'great white shark, white shark, man-eater, man-eating shark, Carcharodon carcharias',3: 'tiger shark, Galeocerdo cuvieri',4: 'hammerhead, hammerhead shark',5: 'electric ray, crampfish, numbfish, torpedo',6: 'stingray',7: 'cock', 8: 'hen', 9: 'ostrich, Struthio camelus', 10: 'brambling, Fringilla montifringilla', 11: 'goldfinch, Carduelis carduelis', 12: 'house finch, linnet, Carpodacus mexicanus', 13: 'junco, snowbird', 14: 'indigo bunting, indigo finch, indigo bird, Passerina cyanea', 15: 'robin, American robin, Turdus migratorius', 16: 'bulbul', 17: 'jay', 18: 'magpie', 19: 'chickadee', 20: 'water ouzel, dipper', 21: 'kite', 22: 'bald eagle, American eagle, Haliaeetus leucocephalus', 23: 'vulture', 24: 'great grey owl, great gray owl, Strix nebulosa', 25: 'European fire salamander, Salamandra salamandra', 26: 'common newt, Triturus vulgaris', 27: 'eft', 28: 'spotted salamander, Ambystoma maculatum', 29: 'axolotl, mud puppy, Ambystoma mexicanum', 30: 'bullfrog, Rana catesbeiana', 31: 'tree frog, tree-frog', 32: 'tailed frog, bell toad, ribbed toad, tailed toad, Ascaphus trui', 33: 'loggerhead, loggerhead turtle, Caretta caretta', 34: 'leatherback turtle, leatherback, leathery turtle, Dermochelys coriacea', 35: 'mud turtle', 36: 'terrapin', 37: 'box turtle, box tortoise', 38: 'banded gecko', 39: 'common iguana, iguana, Iguana iguana', 40: 'American chameleon, anole, Anolis carolinensis', 41: 'whiptail, whiptail lizard', 42: 'agama', 43: 'frilled lizard, Chlamydosaurus kingi', 44: 'alligator lizard', 45: 'Gila monster, Heloderma suspectum', 46: 'green lizard, Lacerta viridis', 47: 'African chameleon, Chamaeleo chamaeleon', 48: 'Komodo dragon, Komodo lizard, dragon lizard, giant lizard, Varanus komodoensis', 49: 'African crocodile, Nile crocodile, Crocodylus niloticus', 50: 'American alligator, Alligator mississipiensis', 51: 'triceratops', 52: 'thunder snake, worm snake, Carphophis amoenus', 53: 'ringneck snake, ring-necked snake, ring snake', 54: 'hognose snake, puff adder, sand viper', 55: 'green snake, grass snake', 56: 'king snake, kingsnake', 57: 'garter snake, grass snake', 58: 'water snake', 59: 'vine snake', 60: 'night snake, Hypsiglena torquata', 61: 'boa constrictor, Constrictor constrictor', 62: 'rock python, rock snake, Python sebae', 63: 'Indian cobra, Naja naja', 64: 'green mamba', 65: 'sea snake', 66: 'horned viper, cerastes, sand viper, horned asp, Cerastes cornutus', 67: 'diamondback, diamondback rattlesnake, Crotalus adamanteus', 68: 'sidewinder, horned rattlesnake, Crotalus cerastes', 69: 'trilobite', 70: 'harvestman, daddy longlegs, Phalangium opilio', 71: 'scorpion', 72: 'black and gold garden spider, Argiope aurantia', 73: 'barn spider, Araneus cavaticus', 74: 'garden spider, Aranea diademata', 75: 'black widow, Latrodectus mactans', 76: 'tarantula', 77: 'wolf spider, hunting spider', 78: 'tick', 79: 'centipede', 80: 'black grouse', 81: 'ptarmigan', 82: 'ruffed grouse, partridge, Bonasa umbellus', 83: 'prairie chicken, prairie grouse, prairie fowl', 84: 'peacock', 85: 'quail', 86: 'partridge', 87: 'African grey, African gray, Psittacus erithacus', 88: 'macaw', 89: 'sulphur-crested cockatoo, Kakatoe galerita, Cacatua galerita', 90: 'lorikeet', 91: 'coucal', 92: 'bee eater', 93: 'hornbill', 94: 'hummingbird', 95: 'jacamar', 96: 'toucan', 97: 'drake', 98: 'red-breasted merganser, Mergus serrator', 99: 'goose', 100: 'black swan, Cygnus atratus', 101: 'tusker', 102: 'echidna, spiny anteater, anteater', 103: 'platypus, duckbill, duckbilled platypus, duck-billed platypus, Ornithorhynchus anatinus', 104: 'wallaby, brush kangaroo', 105: 'koala, koala bear, kangaroo bear, native bear, Phascolarctos cinereus', 106: 'wombat', 107: 'jellyfish', 108: 'sea anemone, anemone', 109: 'brain coral', 110: 'flatworm, platyhelminth', 111: 'nematode, nematode worm, roundworm', 112: 'conch', 113: 'snail', 114: 'slug', 115: 'sea slug, nudibranch', 116: 'chiton, coat-of-mail shell, sea cradle, polyplacophore', 117: 'chambered nautilus, pearly nautilus, nautilus', 118: 'Dungeness crab, Cancer magister', 119: 'rock crab, Cancer irroratus', 120: 'fiddler crab', 121: 'king crab, Alaska crab, Alaskan king crab, Alaska king crab, Paralithodes camtschatica', 122: 'American lobster, Northern lobster, Maine lobster, Homarus americanus', 123: 'spiny lobster, langouste, rock lobster, crawfish, crayfish, sea crawfish', 124: 'crayfish, crawfish, crawdad, crawdaddy', 125: 'hermit crab', 126: 'isopod', 127: 'white stork, Ciconia ciconia', 128: 'black stork, Ciconia nigra', 129: 'spoonbill', 130: 'flamingo', 131: 'little blue heron, Egretta caerulea', 132: 'American egret, great white heron, Egretta albus', 133: 'bittern', 134: 'crane', 135: 'limpkin, Aramus pictus', 136: 'European gallinule, Porphyrio porphyrio', 137: 'American coot, marsh hen, mud hen, water hen, Fulica americana', 138: 'bustard', 139: 'ruddy turnstone, Arenaria interpres', 140: 'red-backed sandpiper, dunlin, Erolia alpina', 141: 'redshank, Tringa totanus', 142: 'dowitcher', 143: 'oystercatcher, oyster catcher', 144: 'pelican', 145: 'king penguin, Aptenodytes patagonica', 146: 'albatross, mollymawk', 147: 'grey whale, gray whale, devilfish, Eschrichtius gibbosus, Eschrichtius robustus', 148: 'killer whale, killer, orca, grampus, sea wolf, Orcinus orca', 149: 'dugong, Dugong dugon', 150: 'sea lion', 151: 'Chihuahua', 152: 'Japanese spaniel', 153: 'Maltese dog, Maltese terrier, Maltese', 154: 'Pekinese, Pekingese, Peke', 155: 'Shih-Tzu', 156: 'Blenheim spaniel', 157: 'papillon', 158: 'toy terrier', 159: 'Rhodesian ridgeback', 160: 'Afghan hound, Afghan', 161: 'basset, basset hound', 162: 'beagle', 163: 'bloodhound, sleuthhound', 164: 'bluetick', 165: 'black-and-tan coonhound', 166: 'Walker hound, Walker foxhound', 167: 'English foxhound', 168: 'redbone', 169: 'borzoi, Russian wolfhound', 170: 'Irish wolfhound', 171: 'Italian greyhound', 172: 'whippet', 173: 'Ibizan hound, Ibizan Podenco', 174: 'Norwegian elkhound, elkhound', 175: 'otterhound, otter hound', 176: 'Saluki, gazelle hound', 177: 'Scottish deerhound, deerhound', 178: 'Weimaraner', 179: 'Staffordshire bullterrier, Staffordshire bull terrier', 180: 'American Staffordshire terrier, Staffordshire terrier, American pit bull terrier, pit bull terrier', 181: 'Bedlington terrier', 182: 'Border terrier', 183: 'Kerry blue terrier', 184: 'Irish terrier', 185: 'Norfolk terrier', 186: 'Norwich terrier', 187: 'Yorkshire terrier', 188: 'wire-haired fox terrier', 189: 'Lakeland terrier', 190: 'Sealyham terrier, Sealyham', 191: 'Airedale, Airedale terrier', 192: 'cairn, cairn terrier', 193: 'Australian terrier', 194: 'Dandie Dinmont, Dandie Dinmont terrier', 195: 'Boston bull, Boston terrier', 196: 'miniature schnauzer', 197: 'giant schnauzer', 198: 'standard schnauzer', 199: 'Scotch terrier, Scottish terrier, Scottie', 200: 'Tibetan terrier, chrysanthemum dog', 201: 'silky terrier, Sydney silky', 202: 'soft-coated wheaten terrier', 203: 'West Highland white terrier', 204: 'Lhasa, Lhasa apso', 205: 'flat-coated retriever', 206: 'curly-coated retriever', 207: 'golden retriever', 208: 'Labrador retriever', 209: 'Chesapeake Bay retriever', 210: 'German short-haired pointer', 211: 'vizsla, Hungarian pointer', 212: 'English setter', 213: 'Irish setter, red setter', 214: 'Gordon setter', 215: 'Brittany spaniel', 216: 'clumber, clumber spaniel', 217: 'English springer, English springer spaniel', 218: 'Welsh springer spaniel', 219: 'cocker spaniel, English cocker spaniel, cocker', 220: 'Sussex spaniel', 221: 'Irish water spaniel', 222: 'kuvasz', 223: 'schipperke', 224: 'groenendael', 225: 'malinois', 226: 'briard', 227: 'kelpie', 228: 'komondor', 229: 'Old English sheepdog, bobtail', 230: 'Shetland sheepdog, Shetland sheep dog, Shetland', 231: 'collie', 232: 'Border collie', 233: 'Bouvier des Flandres, Bouviers des Flandres', 234: 'Rottweiler', 235: 'German shepherd, German shepherd dog, German police dog, alsatian', 236: 'Doberman, Doberman pinscher', 237: 'miniature pinscher', 238: 'Greater Swiss Mountain dog', 239: 'Bernese mountain dog', 240: 'Appenzeller', 241: 'EntleBucher', 242: 'boxer', 243: 'bull mastiff', 244: 'Tibetan mastiff', 245: 'French bulldog', 246: 'Great Dane', 247: 'Saint Bernard, St Bernard', 248: 'Eskimo dog, husky', 249: 'malamute, malemute, Alaskan malamute', 250: 'Siberian husky', 251: 'dalmatian, coach dog, carriage dog', 252: 'affenpinscher, monkey pinscher, monkey dog', 253: 'basenji', 254: 'pug, pug-dog', 255: 'Leonberg', 256: 'Newfoundland, Newfoundland dog', 257: 'Great Pyrenees', 258: 'Samoyed, Samoyede', 259: 'Pomeranian', 260: 'chow, chow chow', 261: 'keeshond', 262: 'Brabancon griffon', 263: 'Pembroke, Pembroke Welsh corgi', 264: 'Cardigan, Cardigan Welsh corgi', 265: 'toy poodle', 266: 'miniature poodle', 267: 'standard poodle', 268: 'Mexican hairless', 269: 'timber wolf, grey wolf, gray wolf, Canis lupus', 270: 'white wolf, Arctic wolf, Canis lupus tundrarum', 271: 'red wolf, maned wolf, Canis rufus, Canis niger', 272: 'coyote, prairie wolf, brush wolf, Canis latrans', 273: 'dingo, warrigal, warragal, Canis dingo', 274: 'dhole, Cuon alpinus', 275: 'African hunting dog, hyena dog, Cape hunting dog, Lycaon pictus', 276: 'hyena, hyaena', 277: 'red fox, Vulpes vulpes', 278: 'kit fox, Vulpes macrotis', 279: 'Arctic fox, white fox, Alopex lagopus', 280: 'grey fox, gray fox, Urocyon cinereoargenteus', 281: 'tabby, tabby cat', 282: 'tiger cat', 283: 'Persian cat', 284: 'Siamese cat, Siamese', 285: 'Egyptian cat', 286: 'cougar, puma, catamount, mountain lion, painter, panther, Felis concolor', 287: 'lynx, catamount', 288: 'leopard, Panthera pardus', 289: 'snow leopard, ounce, Panthera uncia', 290: 'jaguar, panther, Panthera onca, Felis onca', 291: 'lion, king of beasts, Panthera leo', 292: 'tiger, Panthera tigris', 293: 'cheetah, chetah, Acinonyx jubatus', 294: 'brown bear, bruin, Ursus arctos', 295: 'American black bear, black bear, Ursus americanus, Euarctos americanus', 296: 'ice bear, polar bear, Ursus Maritimus, Thalarctos maritimus', 297: 'sloth bear, Melursus ursinus, Ursus ursinus', 298: 'mongoose', 299: 'meerkat, mierkat', 300: 'tiger beetle', 301: 'ladybug, ladybeetle, lady beetle, ladybird, ladybird beetle', 302: 'ground beetle, carabid beetle', 303: 'long-horned beetle, longicorn, longicorn beetle', 304: 'leaf beetle, chrysomelid', 305: 'dung beetle', 306: 'rhinoceros beetle', 307: 'weevil', 308: 'fly', 309: 'bee', 310: 'ant, emmet, pismire', 311: 'grasshopper, hopper', 312: 'cricket', 313: 'walking stick, walkingstick, stick insect', 314: 'cockroach, roach', 315: 'mantis, mantid', 316: 'cicada, cicala', 317: 'leafhopper', 318: 'lacewing, lacewing fly', 319: "dragonfly, darning needle, devil's darning needle, sewing needle, snake feeder, snake doctor, mosquito hawk, skeeter hawk", 320: 'damselfly', 321: 'admiral', 322: 'ringlet, ringlet butterfly', 323: 'monarch, monarch butterfly, milkweed butterfly, Danaus plexippus', 324: 'cabbage butterfly', 325: 'sulphur butterfly, sulfur butterfly', 326: 'lycaenid, lycaenid butterfly', 327: 'starfish, sea star', 328: 'sea urchin', 329: 'sea cucumber, holothurian', 330: 'wood rabbit, cottontail, cottontail rabbit', 331: 'hare', 332: 'Angora, Angora rabbit', 333: 'hamster', 334: 'porcupine, hedgehog', 335: 'fox squirrel, eastern fox squirrel, Sciurus niger', 336: 'marmot', 337: 'beaver', 338: 'guinea pig, Cavia cobaya', 339: 'sorrel', 340: 'zebra', 341: 'hog, pig, grunter, squealer, Sus scrofa', 342: 'wild boar, boar, Sus scrofa', 343: 'warthog', 344: 'hippopotamus, hippo, river horse, Hippopotamus amphibius', 345: 'ox', 346: 'water buffalo, water ox, Asiatic buffalo, Bubalus bubalis', 347: 'bison', 348: 'ram, tup', 349: 'bighorn, bighorn sheep, cimarron, Rocky Mountain bighorn, Rocky Mountain sheep, Ovis canadensis', 350: 'ibex, Capra ibex', 351: 'hartebeest', 352: 'impala, Aepyceros melampus', 353: 'gazelle', 354: 'Arabian camel, dromedary, Camelus dromedarius', 355: 'llama', 356: 'weasel', 357: 'mink', 358: 'polecat, fitch, foulmart, foumart, Mustela putorius', 359: 'black-footed ferret, ferret, Mustela nigripes', 360: 'otter', 361: 'skunk, polecat, wood pussy', 362: 'badger', 363: 'armadillo', 364: 'three-toed sloth, ai, Bradypus tridactylus', 365: 'orangutan, orang, orangutang, Pongo pygmaeus', 366: 'gorilla, Gorilla gorilla', 367: 'chimpanzee, chimp, Pan troglodytes', 368: 'gibbon, Hylobates lar', 369: 'siamang, Hylobates syndactylus, Symphalangus syndactylus', 370: 'guenon, guenon monkey', 371: 'patas, hussar monkey, Erythrocebus patas', 372: 'baboon', 373: 'macaque', 374: 'langur', 375: 'colobus, colobus monkey', 376: 'proboscis monkey, Nasalis larvatus', 377: 'marmoset', 378: 'capuchin, ringtail, Cebus capucinus', 379: 'howler monkey, howler', 380: 'titi, titi monkey', 381: 'spider monkey, Ateles geoffroyi', 382: 'squirrel monkey, Saimiri sciureus', 383: 'Madagascar cat, ring-tailed lemur, Lemur catta', 384: 'indri, indris, Indri indri, Indri brevicaudatus', 385: 'Indian elephant, Elephas maximus', 386: 'African elephant, Loxodonta africana', 387: 'lesser panda, red panda, panda, bear cat, cat bear, Ailurus fulgens', 388: 'giant panda, panda, panda bear, coon bear, Ailuropoda melanoleuca', 389: 'barracouta, snoek', 390: 'eel', 391: 'coho, cohoe, coho salmon, blue jack, silver salmon, Oncorhynchus kisutch', 392: 'rock beauty, Holocanthus tricolor', 393: 'anemone fish', 394: 'sturgeon', 395: 'gar, garfish, garpike, billfish, Lepisosteus osseus', 396: 'lionfish', 397: 'puffer, pufferfish, blowfish, globefish', 398: 'abacus', 399: 'abaya', 400: "academic gown, academic robe, judge's robe", 401: 'accordion, piano accordion, squeeze box', 402: 'acoustic guitar', 403: 'aircraft carrier, carrier, flattop, attack aircraft carrier', 404: 'airliner', 405: 'airship, dirigible', 406: 'altar', 407: 'ambulance', 408: 'amphibian, amphibious vehicle', 409: 'analog clock', 410: 'apiary, bee house', 411: 'apron', 412: 'ashcan, trash can, garbage can, wastebin, ash bin, ash-bin, ashbin, dustbin, trash barrel, trash bin', 413: 'assault rifle, assault gun', 414: 'backpack, back pack, knapsack, packsack, rucksack, haversack', 415: 'bakery, bakeshop, bakehouse', 416: 'balance beam, beam', 417: 'balloon', 418: 'ballpoint, ballpoint pen, ballpen, Biro', 419: 'Band Aid', 420: 'banjo', 421: 'bannister, banister, balustrade, balusters, handrail', 422: 'barbell', 423: 'barber chair', 424: 'barbershop', 425: 'barn', 426: 'barometer', 427: 'barrel, cask', 428: 'barrow, garden cart, lawn cart, wheelbarrow', 429: 'baseball', 430: 'basketball', 431: 'bassinet', 432: 'bassoon', 433: 'bathing cap, swimming cap', 434: 'bath towel', 435: 'bathtub, bathing tub, bath, tub', 436: 'beach wagon, station wagon, wagon, estate car, beach waggon, station waggon, waggon', 437: 'beacon, lighthouse, beacon light, pharos', 438: 'beaker', 439: 'bearskin, busby, shako', 440: 'beer bottle', 441: 'beer glass', 442: 'bell cote, bell cot', 443: 'bib', 444: 'bicycle-built-for-two, tandem bicycle, tandem', 445: 'bikini, two-piece', 446: 'binder, ring-binder', 447: 'binoculars, field glasses, opera glasses', 448: 'birdhouse', 449: 'boathouse', 450: 'bobsled, bobsleigh, bob', 451: 'bolo tie, bolo, bola tie, bola', 452: 'bonnet, poke bonnet', 453: 'bookcase', 454: 'bookshop, bookstore, bookstall', 455: 'bottlecap', 456: 'bow', 457: 'bow tie, bow-tie, bowtie', 458: 'brass, memorial tablet, plaque', 459: 'brassiere, bra, bandeau', 460: 'breakwater, groin, groyne, mole, bulwark, seawall, jetty', 461: 'breastplate, aegis, egis', 462: 'broom', 463: 'bucket, pail', 464: 'buckle', 465: 'bulletproof vest', 466: 'bullet train, bullet', 467: 'butcher shop, meat market', 468: 'cab, hack, taxi, taxicab', 469: 'caldron, cauldron', 470: 'candle, taper, wax light', 471: 'cannon', 472: 'canoe', 473: 'can opener, tin opener', 474: 'cardigan', 475: 'car mirror', 476: 'carousel, carrousel, merry-go-round, roundabout, whirligig', 477: "carpenter's kit, tool kit", 478: 'carton', 479: 'car wheel', 480: 'cash machine, cash dispenser, automated teller machine, automatic teller machine, automated teller, automatic teller, ATM', 481: 'cassette', 482: 'cassette player', 483: 'castle', 484: 'catamaran', 485: 'CD player', 486: 'cello, violoncello', 487: 'cellular telephone, cellular phone, cellphone, cell, mobile phone', 488: 'chain', 489: 'chainlink fence', 490: 'chain mail, ring mail, mail, chain armor, chain armour, ring armor, ring armour', 491: 'chain saw, chainsaw', 492: 'chest', 493: 'chiffonier, commode', 494: 'chime, bell, gong', 495: 'china cabinet, china closet', 496: 'Christmas stocking', 497: 'church, church building', 498: 'cinema, movie theater, movie theatre, movie house, picture palace', 499: 'cleaver, meat cleaver, chopper', 500: 'cliff dwelling', 501: 'cloak', 502: 'clog, geta, patten, sabot', 503: 'cocktail shaker', 504: 'coffee mug', 505: 'coffeepot', 506: 'coil, spiral, volute, whorl, helix', 507: 'combination lock', 508: 'computer keyboard, keypad', 509: 'confectionery, confectionary, candy store', 510: 'container ship, containership, container vessel', 511: 'convertible', 512: 'corkscrew, bottle screw', 513: 'cornet, horn, trumpet, trump', 514: 'cowboy boot', 515: 'cowboy hat, ten-gallon hat', 516: 'cradle', 517: 'crane', 518: 'crash helmet', 519: 'crate', 520: 'crib, cot', 521: 'Crock Pot', 522: 'croquet ball', 523: 'crutch', 524: 'cuirass', 525: 'dam, dike, dyke', 526: 'desk', 527: 'desktop computer', 528: 'dial telephone, dial phone', 529: 'diaper, nappy, napkin', 530: 'digital clock', 531: 'digital watch', 532: 'dining table, board', 533: 'dishrag, dishcloth', 534: 'dishwasher, dish washer, dishwashing machine', 535: 'disk brake, disc brake', 536: 'dock, dockage, docking facility', 537: 'dogsled, dog sled, dog sleigh', 538: 'dome', 539: 'doormat, welcome mat', 540: 'drilling platform, offshore rig', 541: 'drum, membranophone, tympan', 542: 'drumstick', 543: 'dumbbell', 544: 'Dutch oven', 545: 'electric fan, blower', 546: 'electric guitar', 547: 'electric locomotive', 548: 'entertainment center', 549: 'envelope', 550: 'espresso maker', 551: 'face powder', 552: 'feather boa, boa', 553: 'file, file cabinet, filing cabinet', 554: 'fireboat', 555: 'fire engine, fire truck', 556: 'fire screen, fireguard', 557: 'flagpole, flagstaff', 558: 'flute, transverse flute', 559: 'folding chair', 560: 'football helmet', 561: 'forklift', 562: 'fountain', 563: 'fountain pen', 564: 'four-poster', 565: 'freight car', 566: 'French horn, horn', 567: 'frying pan, frypan, skillet', 568: 'fur coat', 569: 'garbage truck, dustcart', 570: 'gasmask, respirator, gas helmet', 571: 'gas pump, gasoline pump, petrol pump, island dispenser', 572: 'goblet', 573: 'go-kart', 574: 'golf ball', 575: 'golfcart, golf cart', 576: 'gondola', 577: 'gong, tam-tam', 578: 'gown', 579: 'grand piano, grand', 580: 'greenhouse, nursery, glasshouse', 581: 'grille, radiator grille', 582: 'grocery store, grocery, food market, market', 583: 'guillotine', 584: 'hair slide', 585: 'hair spray', 586: 'half track', 587: 'hammer', 588: 'hamper', 589: 'hand blower, blow dryer, blow drier, hair dryer, hair drier', 590: 'hand-held computer, hand-held microcomputer', 591: 'handkerchief, hankie, hanky, hankey', 592: 'hard disc, hard disk, fixed disk', 593: 'harmonica, mouth organ, harp, mouth harp', 594: 'harp', 595: 'harvester, reaper', 596: 'hatchet', 597: 'holster', 598: 'home theater, home theatre', 599: 'honeycomb', 600: 'hook, claw', 601: 'hoopskirt, crinoline', 602: 'horizontal bar, high bar', 603: 'horse cart, horse-cart', 604: 'hourglass', 605: 'iPod', 606: 'iron, smoothing iron', 607: "jack-o'-lantern", 608: 'jean, blue jean, denim', 609: 'jeep, landrover', 610: 'jersey, T-shirt, tee shirt', 611: 'jigsaw puzzle', 612: 'jinrikisha, ricksha, rickshaw', 613: 'joystick', 614: 'kimono', 615: 'knee pad', 616: 'knot', 617: 'lab coat, laboratory coat', 618: 'ladle', 619: 'lampshade, lamp shade', 620: 'laptop, laptop computer', 621: 'lawn mower, mower', 622: 'lens cap, lens cover', 623: 'letter opener, paper knife, paperknife', 624: 'library', 625: 'lifeboat', 626: 'lighter, light, igniter, ignitor', 627: 'limousine, limo', 628: 'liner, ocean liner', 629: 'lipstick, lip rouge', 630: 'Loafer', 631: 'lotion', 632: 'loudspeaker, speaker, speaker unit, loudspeaker system, speaker system', 633: "loupe, jeweler's loupe", 634: 'lumbermill, sawmill', 635: 'magnetic compass', 636: 'mailbag, postbag', 637: 'mailbox, letter box', 638: 'maillot', 639: 'maillot, tank suit', 640: 'manhole cover', 641: 'maraca', 642: 'marimba, xylophone', 643: 'mask', 644: 'matchstick', 645: 'maypole', 646: 'maze, labyrinth', 647: 'measuring cup', 648: 'medicine chest, medicine cabinet', 649: 'megalith, megalithic structure', 650: 'microphone, mike', 651: 'microwave, microwave oven', 652: 'military uniform', 653: 'milk can', 654: 'minibus', 655: 'miniskirt, mini', 656: 'minivan', 657: 'missile', 658: 'mitten', 659: 'mixing bowl', 660: 'mobile home, manufactured home', 661: 'Model T', 662: 'modem', 663: 'monastery', 664: 'monitor', 665: 'moped', 666: 'mortar', 667: 'mortarboard', 668: 'mosque', 669: 'mosquito net', 670: 'motor scooter, scooter', 671: 'mountain bike, all-terrain bike, off-roader', 672: 'mountain tent', 673: 'mouse, computer mouse', 674: 'mousetrap', 675: 'moving van', 676: 'muzzle', 677: 'nail', 678: 'neck brace', 679: 'necklace', 680: 'nipple', 681: 'notebook, notebook computer', 682: 'obelisk', 683: 'oboe, hautboy, hautbois', 684: 'ocarina, sweet potato', 685: 'odometer, hodometer, mileometer, milometer', 686: 'oil filter', 687: 'organ, pipe organ', 688: 'oscilloscope, scope, cathode-ray oscilloscope, CRO', 689: 'overskirt', 690: 'oxcart', 691: 'oxygen mask', 692: 'packet', 693: 'paddle, boat paddle', 694: 'paddlewheel, paddle wheel', 695: 'padlock', 696: 'paintbrush', 697: "pajama, pyjama, pj's, jammies", 698: 'palace', 699: 'panpipe, pandean pipe, syrinx', 700: 'paper towel', 701: 'parachute, chute', 702: 'parallel bars, bars', 703: 'park bench', 704: 'parking meter', 705: 'passenger car, coach, carriage', 706: 'patio, terrace', 707: 'pay-phone, pay-station', 708: 'pedestal, plinth, footstall', 709: 'pencil box, pencil case', 710: 'pencil sharpener', 711: 'perfume, essence', 712: 'Petri dish', 713: 'photocopier', 714: 'pick, plectrum, plectron', 715: 'pickelhaube', 716: 'picket fence, paling', 717: 'pickup, pickup truck', 718: 'pier', 719: 'piggy bank, penny bank', 720: 'pill bottle', 721: 'pillow', 722: 'ping-pong ball', 723: 'pinwheel', 724: 'pirate, pirate ship', 725: 'pitcher, ewer', 726: "plane, carpenter's plane, woodworking plane", 727: 'planetarium', 728: 'plastic bag', 729: 'plate rack', 730: 'plow, plough', 731: "plunger, plumber's helper", 732: 'Polaroid camera, Polaroid Land camera', 733: 'pole', 734: 'police van, police wagon, paddy wagon, patrol wagon, wagon, black Maria', 735: 'poncho', 736: 'pool table, billiard table, snooker table', 737: 'pop bottle, soda bottle', 738: 'pot, flowerpot', 739: "potter's wheel", 740: 'power drill', 741: 'prayer rug, prayer mat', 742: 'printer', 743: 'prison, prison house', 744: 'projectile, missile', 745: 'projector', 746: 'puck, hockey puck', 747: 'punching bag, punch bag, punching ball, punchball', 748: 'purse', 749: 'quill, quill pen', 750: 'quilt, comforter, comfort, puff', 751: 'racer, race car, racing car', 752: 'racket, racquet', 753: 'radiator', 754: 'radio, wireless', 755: 'radio telescope, radio reflector', 756: 'rain barrel', 757: 'recreational vehicle, RV, R.V.', 758: 'reel', 759: 'reflex camera', 760: 'refrigerator, icebox', 761: 'remote control, remote', 762: 'restaurant, eating house, eating place, eatery', 763: 'revolver, six-gun, six-shooter', 764: 'rifle', 765: 'rocking chair, rocker', 766: 'rotisserie', 767: 'rubber eraser, rubber, pencil eraser', 768: 'rugby ball', 769: 'rule, ruler', 770: 'running shoe', 771: 'safe', 772: 'safety pin', 773: 'saltshaker, salt shaker', 774: 'sandal', 775: 'sarong', 776: 'sax, saxophone', 777: 'scabbard', 778: 'scale, weighing machine', 779: 'school bus', 780: 'schooner', 781: 'scoreboard', 782: 'screen, CRT screen', 783: 'screw', 784: 'screwdriver', 785: 'seat belt, seatbelt', 786: 'sewing machine', 787: 'shield, buckler', 788: 'shoe shop, shoe-shop, shoe store', 789: 'shoji', 790: 'shopping basket', 791: 'shopping cart', 792: 'shovel', 793: 'shower cap', 794: 'shower curtain', 795: 'ski', 796: 'ski mask', 797: 'sleeping bag', 798: 'slide rule, slipstick', 799: 'sliding door', 800: 'slot, one-armed bandit', 801: 'snorkel', 802: 'snowmobile', 803: 'snowplow, snowplough', 804: 'soap dispenser', 805: 'soccer ball', 806: 'sock', 807: 'solar dish, solar collector, solar furnace', 808: 'sombrero', 809: 'soup bowl', 810: 'space bar', 811: 'space heater', 812: 'space shuttle', 813: 'spatula', 814: 'speedboat', 815: "spider web, spider's web", 816: 'spindle', 817: 'sports car, sport car', 818: 'spotlight, spot', 819: 'stage', 820: 'steam locomotive', 821: 'steel arch bridge', 822: 'steel drum', 823: 'stethoscope', 824: 'stole', 825: 'stone wall', 826: 'stopwatch, stop watch', 827: 'stove', 828: 'strainer', 829: 'streetcar, tram, tramcar, trolley, trolley car', 830: 'stretcher', 831: 'studio couch, day bed', 832: 'stupa, tope', 833: 'submarine, pigboat, sub, U-boat', 834: 'suit, suit of clothes', 835: 'sundial', 836: 'sunglass', 837: 'sunglasses, dark glasses, shades', 838: 'sunscreen, sunblock, sun blocker', 839: 'suspension bridge', 840: 'swab, swob, mop', 841: 'sweatshirt', 842: 'swimming trunks, bathing trunks', 843: 'swing', 844: 'switch, electric switch, electrical switch', 845: 'syringe', 846: 'table lamp', 847: 'tank, army tank, armored combat vehicle, armoured combat vehicle', 848: 'tape player', 849: 'teapot', 850: 'teddy, teddy bear', 851: 'television, television system', 852: 'tennis ball', 853: 'thatch, thatched roof', 854: 'theater curtain, theatre curtain', 855: 'thimble', 856: 'thresher, thrasher, threshing machine', 857: 'throne', 858: 'tile roof', 859: 'toaster', 860: 'tobacco shop, tobacconist shop, tobacconist', 861: 'toilet seat', 862: 'torch', 863: 'totem pole', 864: 'tow truck, tow car, wrecker', 865: 'toyshop', 866: 'tractor', 867: 'trailer truck, tractor trailer, trucking rig, rig, articulated lorry, semi', 868: 'tray', 869: 'trench coat', 870: 'tricycle, trike, velocipede', 871: 'trimaran', 872: 'tripod', 873: 'triumphal arch', 874: 'trolleybus, trolley coach, trackless trolley', 875: 'trombone', 876: 'tub, vat', 877: 'turnstile', 878: 'typewriter keyboard', 879: 'umbrella', 880: 'unicycle, monocycle', 881: 'upright, upright piano', 882: 'vacuum, vacuum cleaner', 883: 'vase', 884: 'vault', 885: 'velvet', 886: 'vending machine', 887: 'vestment', 888: 'viaduct', 889: 'violin, fiddle', 890: 'volleyball', 891: 'waffle iron', 892: 'wall clock', 893: 'wallet, billfold, notecase, pocketbook', 894: 'wardrobe, closet, press', 895: 'warplane, military plane', 896: 'washbasin, handbasin, washbowl, lavabo, wash-hand basin', 897: 'washer, automatic washer, washing machine', 898: 'water bottle', 899: 'water jug', 900: 'water tower', 901: 'whiskey jug', 902: 'whistle', 903: 'wig', 904: 'window screen', 905: 'window shade', 906: 'Windsor tie', 907: 'wine bottle', 908: 'wing', 909: 'wok', 910: 'wooden spoon', 911: 'wool, woolen, woollen', 912: 'worm fence, snake fence, snake-rail fence, Virginia fence', 913: 'wreck', 914: 'yawl', 915: 'yurt', 916: 'web site, website, internet site, site', 917: 'comic book', 918: 'crossword puzzle, crossword', 919: 'street sign', 920: 'traffic light, traffic signal, stoplight', 921: 'book jacket, dust cover, dust jacket, dust wrapper', 922: 'menu', 923: 'plate', 924: 'guacamole', 925: 'consomme', 926: 'hot pot, hotpot', 927: 'trifle', 928: 'ice cream, icecream', 929: 'ice lolly, lolly, lollipop, popsicle', 930: 'French loaf', 931: 'bagel, beigel', 932: 'pretzel', 933: 'cheeseburger', 934: 'hotdog, hot dog, red hot', 935: 'mashed potato', 936: 'head cabbage', 937: 'broccoli', 938: 'cauliflower', 939: 'zucchini, courgette', 940: 'spaghetti squash', 941: 'acorn squash', 942: 'butternut squash', 943: 'cucumber, cuke', 944: 'artichoke, globe artichoke', 945: 'bell pepper', 946: 'cardoon', 947: 'mushroom', 948: 'Granny Smith', 949: 'strawberry', 950: 'orange', 951: 'lemon', 952: 'fig', 953: 'pineapple, ananas', 954: 'banana', 955: 'jackfruit, jak, jack', 956: 'custard apple', 957: 'pomegranate', 958: 'hay', 959: 'carbonara', 960: 'chocolate sauce, chocolate syrup', 961: 'dough', 962: 'meat loaf, meatloaf', 963: 'pizza, pizza pie', 964: 'potpie', 965: 'burrito', 966: 'red wine', 967: 'espresso', 968: 'cup', 969: 'eggnog', 970: 'alp', 971: 'bubble', 972: 'cliff, drop, drop-off', 973: 'coral reef', 974: 'geyser', 975: 'lakeside, lakeshore', 976: 'promontory, headland, head, foreland', 977: 'sandbar, sand bar', 978: 'seashore, coast, seacoast, sea-coast', 979: 'valley, vale', 980: 'volcano', 981: 'ballplayer, baseball player', 982: 'groom, bridegroom', 983: 'scuba diver', 984: 'rapeseed', 985: 'daisy', 986: "yellow lady's slipper, yellow lady-slipper, Cypripedium calceolus, Cypripedium parviflorum", 987: 'corn', 988: 'acorn', 989: 'hip, rose hip, rosehip', 990: 'buckeye, horse chestnut, conker', 991: 'coral fungus', 992: 'agaric', 993: 'gyromitra', 994: 'stinkhorn, carrion fungus', 995: 'earthstar', 996: 'hen-of-the-woods, hen of the woods, Polyporus frondosus, Grifola frondosa', 997: 'bolete', 998: 'ear, spike, capitulum', 999: 'toilet tissue, toilet paper, bathroom tissue'}

+

+# download onnx from sparsezoo and compile with batch size 1

+sparsezoo_stub = "zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none"

+pipeline = Pipeline.create(

+ task="image_classification",

+ model_path=sparsezoo_stub, # sparsezoo stub or path to local ONNX

+ class_names=classes,

+)

+

+# run inference on image file

+prediction = pipeline(images="lion.jpeg")

+print(prediction.labels)

+# labels=['lion, king of beasts, Panthera leo']

+```

+### Cross Use Case Functionality

+Check out the [Pipeline User Guide](../../user-guide/deepsparse-pipelines.md) for more details on configuring a Pipeline.

+

+## DeepSparse Server

+Built on the popular FastAPI and Uvicorn stack, DeepSparse Server enables you to set up a REST endpoint for serving inferences over HTTP. Since DeepSparse Server wraps the Pipeline API, it inherits all the utilities provided by Pipelines.

+

+The CLI command below launches an image classification pipeline with a 95% pruned ResNet model:

+

+```bash

+deepsparse.server \

+ --task image_classification \

+ --model_path zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none

+```

+You should see Uvicorn report that it is running on http://0.0.0.0:5543. Once launched, a /docs path is created with full endpoint descriptions and support for making sample requests.

+

+Here is an example client request, using the Python requests library for formatting the HTTP:

+

+```python

+import requests

+

+url = 'http://0.0.0.0:5543/predict/from_files'

+path = ['lion.jpeg'] # just put the name of images in here

+files = [('request', open(img, 'rb')) for img in path]

+resp = requests.post(url=url, files=files)

+print(resp.text)

+# {"labels":[291],"scores":[24.185693740844727]}

+```

+#### Use Case Specific Arguments

+

+To use a use-case specific argument, create a server configuration file for passing the argument via kwargs.

+

+This configuration file sets `top_k` classes to 3:

+```yaml

+# image_classification-config.yaml

+endpoints:

+ - task: image_classification

+ model: zoo:cv/classification/resnet_v1-50/pytorch/sparseml/imagenet/pruned95_quant-none

+ kwargs:

+ top_k: 3

+```

+

+Start the server:

+```bash

+deepsparse.server --config-file image_classification-config.yaml

+```

+

+Make a request over HTTP:

+

+```python

+import requests

+

+url = 'http://0.0.0.0:5543/predict/from_files'

+path = ['lion.jpeg'] # just put the name of images in here

+files = [('request', open(img, 'rb')) for img in path]

+resp = requests.post(url=url, files=files)

+print(resp.text)

+# {"labels":[291,260,244],"scores":[24.185693740844727,18.982254028320312,16.390701293945312]}

+```

+## Using a Custom ONNX File

+Apart from using models from the SparseZoo, DeepSparse allows you to define custom ONNX files when deploying a model.

+

+The first step is to obtain the ONNX model. You can obtain the file by converting your model to ONNX after training.

+Click Download on the [ResNet-50 - ImageNet page](https://sparsezoo.neuralmagic.com/models/cv%2Fclassification%2Fresnet_v1-50%2Fpytorch%2Fsparseml%2Fimagenet%2Fpruned95_uniform_quant-none) to download a ONNX ResNet model for demonstration.

+

+Extract the downloaded file and use the ResNet-50 ONNX model for inference:

+```python

+from deepsparse import Pipeline

+

+# download onnx from sparsezoo and compile with batch size 1

+pipeline = Pipeline.create(

+ task="image_classification",

+ model_path="resnet.onnx", # sparsezoo stub or path to local ONNX

+)

+

+# run inference on image file

+prediction = pipeline(images=["lion.jpeg"])

+print(prediction.labels)

+# [291]

+```

+### Cross Use Case Functionality

+

+Check out the [Server User Guide](../../user-guide/deepsparse-server.md) for more details on configuring the Server.

diff --git a/docs/use-cases/cv/image-segmentation-yolact.md b/docs/use-cases/cv/image-segmentation-yolact.md

new file mode 100644

index 0000000000..cc7be1e044

--- /dev/null

+++ b/docs/use-cases/cv/image-segmentation-yolact.md

@@ -0,0 +1,251 @@

+

+

+# Deploying Image Segmentation Models with DeepSparse

+

+This page explains how to benchmark and deploy an image segmentation with DeepSparse.

+

+There are three interfaces for interacting with DeepSparse:

+- **Engine** is the lowest-level API that enables you to compile a model and run inference on raw input tensors.

+

+- **Pipeline** is the default DeepSparse API. Similar to Hugging Face Pipelines, it wraps Engine with pre-processing

+and post-processing steps, allowing you to make requests on raw data and receive post-processed predictions.

+

+- **Server** is a REST API wrapper around Pipelines built on [FastAPI](https://fastapi.tiangolo.com/) and [Uvicorn](https://www.uvicorn.org/). It enables you to start a model serving

+endpoint running DeepSparse with a single CLI.

+

+We will walk through an example of each using YOLACT.

+

+## Installation Requirements

+

+This use case requires the installation of [DeepSparse Server](../../user-guide/installation.md).

+

+Confirm your machine is compatible with our [hardware requirements](../../user-guide/hardware-support.md)

+

+## Benchmarking

+

+We can use the benchmarking utility to demonstrate the DeepSparse's performance. The numbers below were run on a 4 core `c6i.2xlarge` instance in AWS.

+

+### ONNX Runtime Baseline

+

+As a baseline, let's check out ONNX Runtime's performance on YOLACT. Make sure you have ORT installed (`pip install onnxruntime`).

+

+```bash

+deepsparse.benchmark \

+ zoo:cv/segmentation/yolact-darknet53/pytorch/dbolya/coco/base-none \

+ -b 64 -s sync -nstreams 1 \

+ -e onnxruntime

+

+> Original Model Path: zoo:cv/segmentation/yolact-darknet53/pytorch/dbolya/coco/base-none

+> Batch Size: 64

+> Scenario: sync

+> Throughput (items/sec): 3.5290

+```

+

+ONNX Runtime achieves 3.5 items/second with batch 64.

+

+### DeepSparse Speedup

+Now, let's run DeepSparse on an inference-optimized sparse version of YOLACT. This model has been 82.5% pruned and quantized to INT8, while retaining >99% accuracy of the dense baseline on the `coco` dataset.

+

+```bash

+deepsparse.benchmark \

+ zoo:cv/segmentation/yolact-darknet53/pytorch/dbolya/coco/pruned82_quant-none \

+ -b 64 -s sync -nstreams 1 \

+ -e deepsparse

+

+> Original Model Path: zoo:cv/segmentation/yolact-darknet53/pytorch/dbolya/coco/pruned82_quant-none

+> Batch Size: 64

+> Scenario: sync

+> Throughput (items/sec): 23.2061

+```

+

+DeepSparse achieves 23 items/second, a 6.6x speed-up over ONNX Runtime!

+

+## DeepSparse Engine

+Engine is the lowest-level API for interacting with DeepSparse. As much as possible, we recommended using the Pipeline API but Engine is available if you want to handle pre- or post-processing yourself.

+

+With Engine, we can compile an ONNX file and run inference on raw tensors.

+

+Here's an example, using a 82.5% pruned-quantized YOLACT model from SparseZoo:

+

+```python

+from deepsparse import Engine

+from deepsparse.utils import generate_random_inputs, model_to_path

+import numpy as np

+