+

+

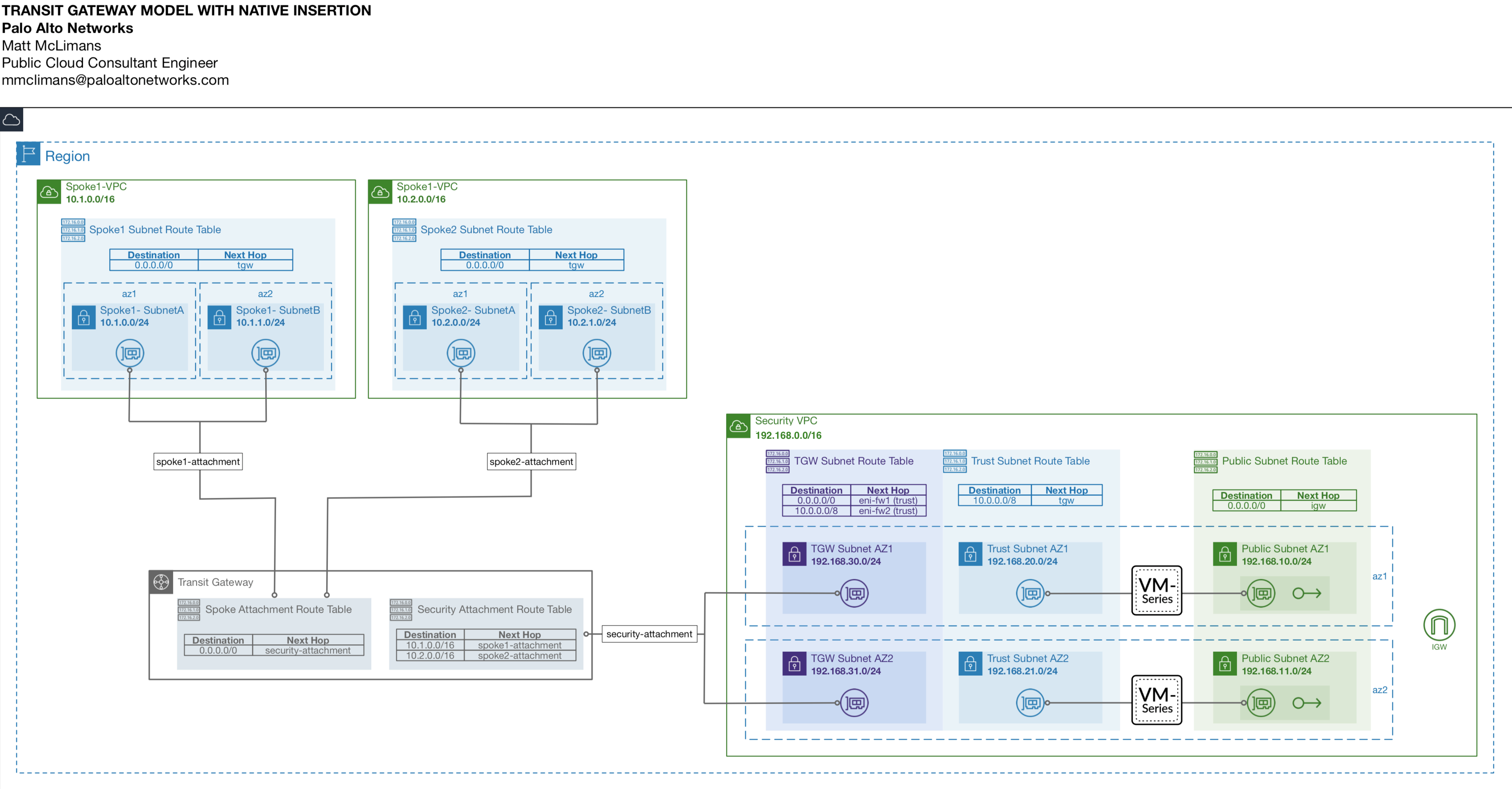

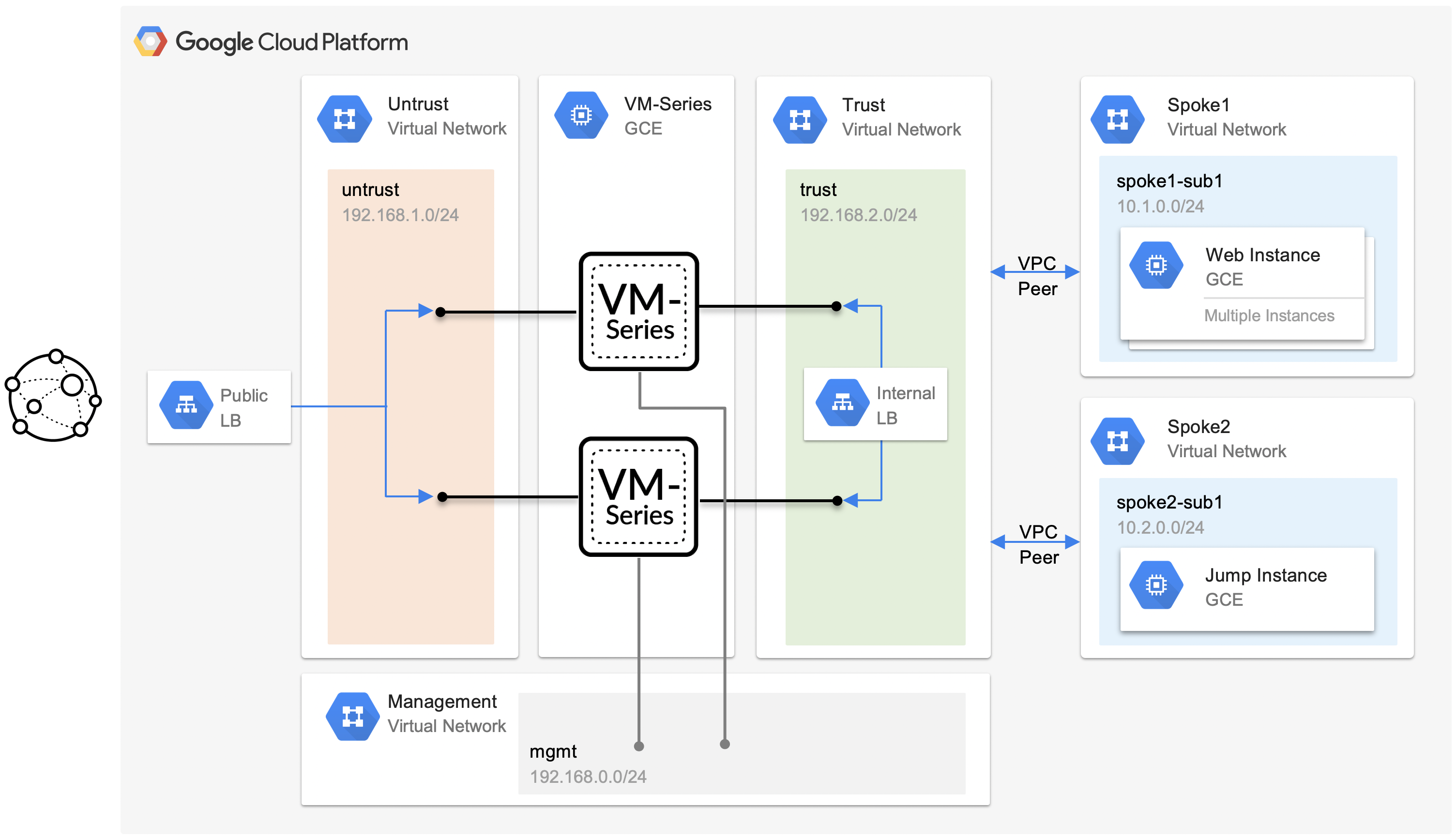

+#### VM-Series Overview

+* Firewall-1 handles egress traffic to internet

+* Firewall-2 handles east/west traffic between Spoke1-VPC and Spoke2-VPC

+* Both Firewalls can handle inbound traffic to the spokes

+* Firewalls are bootstrapped off an S3 Bucket (buckets are created during deployment)

+

+#### S3 Buckets Overview

+* 2 x S3 Buckets are deployed & configured to bootstrap the firewalls with a fully working configuration.

+* The buckets names have a random 30 string added to its name for global uniqueness `tgw-fw#-bootstrap-

+

+

+#### VM-Series Overview

+* Firewall-1 handles egress traffic to internet

+* Firewall-2 handles east/west traffic between Spoke1-VPC and Spoke2-VPC

+* Both Firewalls can handle inbound traffic to the spokes

+* Firewalls are bootstrapped off an S3 Bucket (buckets are created during deployment)

+

+#### S3 Buckets Overview

+* 2 x S3 Buckets are deployed & configured to bootstrap the firewalls with a fully working configuration.

+* The buckets names have a random 30 string added to its name for global uniqueness `tgw-fw#-bootstrap- +

+

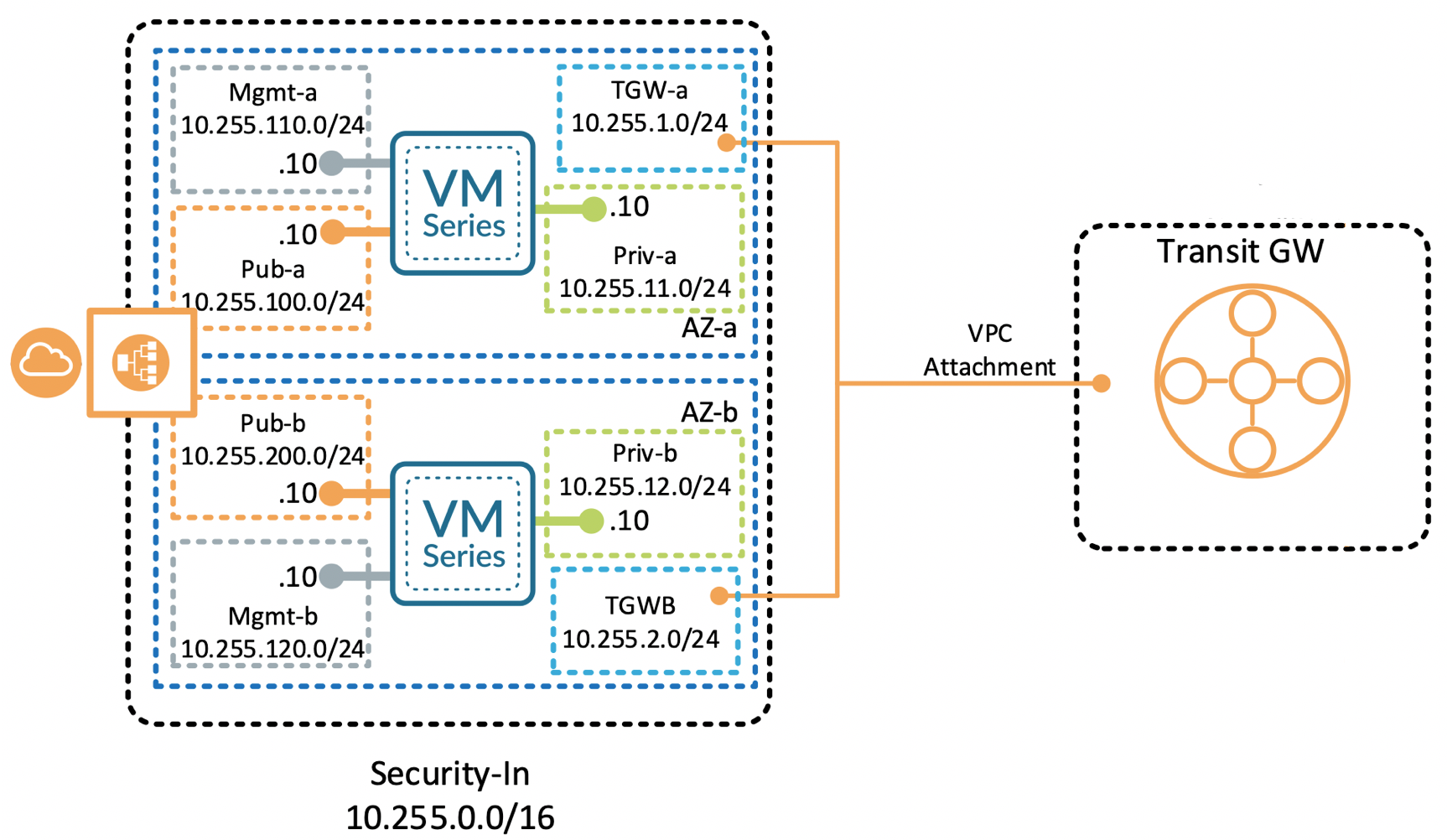

+### Requirements

+* Existing Transit Gateway

+* Existing Transit Gateway route table for the Security-VPC attachment

+* EC2 Key Pair for deployment region

+* UN/PW: **pandemo** / **demopassword**

+

+

+### How to Deploy

+1. Open **variables.tf** in a text editor.

+2. Uncomment default values and add correct value for following variables:

+ * **fw_ami**

+ * Firewall AMI for AWS Region, SKU, & PAN-OS version.

+ * **fw_sg_source**

+ * Source prefix to apply to VM-Series mgmt. interface

+ * **tgw_id**

+ * Existing Transit Gateway ID

+ * **tgw_rtb_id**

+ * Existing Transit Gateway Route Table ID

+3. Save **variables.tf**

+4. BYOL ONLY

+ * If you want to license the VM-Series on creation, copy and paste your Auth Code into the /bootstrap/authcodes file. The Auth Code must be registered with your Palo Alto Networks support account before proceeding.

+Before proceeding, make sure you have accepted and subscribed to the VM-Series software in the AWS Marketplace.

+

+## Notes

+1. us-gov-west was used for deployment testing. It should work in other regions provided the underlying features are available.

+

+## Support Policy

+The guide in this directory and accompanied files are released under an as-is, best effort, support policy. These scripts should be seen as community supported and Palo Alto Networks will contribute our expertise as and when possible. We do not provide technical support or help in using or troubleshooting the components of the project through our normal support options such as Palo Alto Networks support teams, or ASC (Authorized Support Centers) partners and backline support options. The underlying product used (the VM-Series firewall) by the scripts or templates are still supported, but the support is only for the product functionality and not for help in deploying or using the template or script itself.

+Unless explicitly tagged, all projects or work posted in our GitHub repository (at https://github.com/PaloAltoNetworks) or sites other than our official Downloads page on https://support.paloaltonetworks.com are provided under the best effort policy.

diff --git a/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes

new file mode 100644

index 00000000..8d1c8b69

--- /dev/null

+++ b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes

@@ -0,0 +1 @@

+

diff --git a/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml

new file mode 100644

index 00000000..e0db5fed

--- /dev/null

+++ b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml

@@ -0,0 +1,439 @@

+

+

+

+

+### Requirements

+* Existing Transit Gateway

+* Existing Transit Gateway route table for the Security-VPC attachment

+* EC2 Key Pair for deployment region

+* UN/PW: **pandemo** / **demopassword**

+

+

+### How to Deploy

+1. Open **variables.tf** in a text editor.

+2. Uncomment default values and add correct value for following variables:

+ * **fw_ami**

+ * Firewall AMI for AWS Region, SKU, & PAN-OS version.

+ * **fw_sg_source**

+ * Source prefix to apply to VM-Series mgmt. interface

+ * **tgw_id**

+ * Existing Transit Gateway ID

+ * **tgw_rtb_id**

+ * Existing Transit Gateway Route Table ID

+3. Save **variables.tf**

+4. BYOL ONLY

+ * If you want to license the VM-Series on creation, copy and paste your Auth Code into the /bootstrap/authcodes file. The Auth Code must be registered with your Palo Alto Networks support account before proceeding.

+Before proceeding, make sure you have accepted and subscribed to the VM-Series software in the AWS Marketplace.

+

+## Notes

+1. us-gov-west was used for deployment testing. It should work in other regions provided the underlying features are available.

+

+## Support Policy

+The guide in this directory and accompanied files are released under an as-is, best effort, support policy. These scripts should be seen as community supported and Palo Alto Networks will contribute our expertise as and when possible. We do not provide technical support or help in using or troubleshooting the components of the project through our normal support options such as Palo Alto Networks support teams, or ASC (Authorized Support Centers) partners and backline support options. The underlying product used (the VM-Series firewall) by the scripts or templates are still supported, but the support is only for the product functionality and not for help in deploying or using the template or script itself.

+Unless explicitly tagged, all projects or work posted in our GitHub repository (at https://github.com/PaloAltoNetworks) or sites other than our official Downloads page on https://support.paloaltonetworks.com are provided under the best effort policy.

diff --git a/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes

new file mode 100644

index 00000000..8d1c8b69

--- /dev/null

+++ b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/authcodes

@@ -0,0 +1 @@

+

diff --git a/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml

new file mode 100644

index 00000000..e0db5fed

--- /dev/null

+++ b/aws/tgw_inbound_asg-GovCloud/bootstrap_files/bootstrap.xml

@@ -0,0 +1,439 @@

+

+ +

+

+### Requirements

+* Existing Transit Gateway

+* Existing Transit Gateway route table for the Security-VPC attachment

+* EC2 Key Pair for deployment region

+* UN/PW: **pandemo** / **demopassword**

+

+

+### How to Deploy

+1. Open **variables.tf** in a text editor.

+2. Uncomment default values and add correct value for following variables:

+ * **fw_ami**

+ * Firewall AMI for AWS Region, SKU, & PAN-OS version.

+ * **fw_sg_source**

+ * Source prefix to apply to VM-Series mgmt. interface

+ * **tgw_id**

+ * Existing Transit Gateway ID

+ * **tgw_rtb_id**

+ * Existing Transit Gateway Route Table ID

+3. Save **variables.tf**

+4. BYOL ONLY

+ * If you want to license the VM-Series on creation, copy and paste your Auth Code into the /bootstrap/authcodes file. The Auth Code must be registered with your Palo Alto Networks support account before proceeding.

+Before proceeding, make sure you have accepted and subscribed to the VM-Series software in the AWS Marketplace.

+

+

+

+## Support Policy

+The guide in this directory and accompanied files are released under an as-is, best effort, support policy. These scripts should be seen as community supported and Palo Alto Networks will contribute our expertise as and when possible. We do not provide technical support or help in using or troubleshooting the components of the project through our normal support options such as Palo Alto Networks support teams, or ASC (Authorized Support Centers) partners and backline support options. The underlying product used (the VM-Series firewall) by the scripts or templates are still supported, but the support is only for the product functionality and not for help in deploying or using the template or script itself.

+Unless explicitly tagged, all projects or work posted in our GitHub repository (at https://github.com/PaloAltoNetworks) or sites other than our official Downloads page on https://support.paloaltonetworks.com are provided under the best effort policy.

diff --git a/aws/tgw_inbound_asg/bootstrap_files/authcodes b/aws/tgw_inbound_asg/bootstrap_files/authcodes

new file mode 100644

index 00000000..8d1c8b69

--- /dev/null

+++ b/aws/tgw_inbound_asg/bootstrap_files/authcodes

@@ -0,0 +1 @@

+

diff --git a/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml b/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml

new file mode 100644

index 00000000..e0db5fed

--- /dev/null

+++ b/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml

@@ -0,0 +1,439 @@

+

+

+

+

+### Requirements

+* Existing Transit Gateway

+* Existing Transit Gateway route table for the Security-VPC attachment

+* EC2 Key Pair for deployment region

+* UN/PW: **pandemo** / **demopassword**

+

+

+### How to Deploy

+1. Open **variables.tf** in a text editor.

+2. Uncomment default values and add correct value for following variables:

+ * **fw_ami**

+ * Firewall AMI for AWS Region, SKU, & PAN-OS version.

+ * **fw_sg_source**

+ * Source prefix to apply to VM-Series mgmt. interface

+ * **tgw_id**

+ * Existing Transit Gateway ID

+ * **tgw_rtb_id**

+ * Existing Transit Gateway Route Table ID

+3. Save **variables.tf**

+4. BYOL ONLY

+ * If you want to license the VM-Series on creation, copy and paste your Auth Code into the /bootstrap/authcodes file. The Auth Code must be registered with your Palo Alto Networks support account before proceeding.

+Before proceeding, make sure you have accepted and subscribed to the VM-Series software in the AWS Marketplace.

+

+

+

+## Support Policy

+The guide in this directory and accompanied files are released under an as-is, best effort, support policy. These scripts should be seen as community supported and Palo Alto Networks will contribute our expertise as and when possible. We do not provide technical support or help in using or troubleshooting the components of the project through our normal support options such as Palo Alto Networks support teams, or ASC (Authorized Support Centers) partners and backline support options. The underlying product used (the VM-Series firewall) by the scripts or templates are still supported, but the support is only for the product functionality and not for help in deploying or using the template or script itself.

+Unless explicitly tagged, all projects or work posted in our GitHub repository (at https://github.com/PaloAltoNetworks) or sites other than our official Downloads page on https://support.paloaltonetworks.com are provided under the best effort policy.

diff --git a/aws/tgw_inbound_asg/bootstrap_files/authcodes b/aws/tgw_inbound_asg/bootstrap_files/authcodes

new file mode 100644

index 00000000..8d1c8b69

--- /dev/null

+++ b/aws/tgw_inbound_asg/bootstrap_files/authcodes

@@ -0,0 +1 @@

+

diff --git a/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml b/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml

new file mode 100644

index 00000000..e0db5fed

--- /dev/null

+++ b/aws/tgw_inbound_asg/bootstrap_files/bootstrap.xml

@@ -0,0 +1,439 @@

+

+ +

+

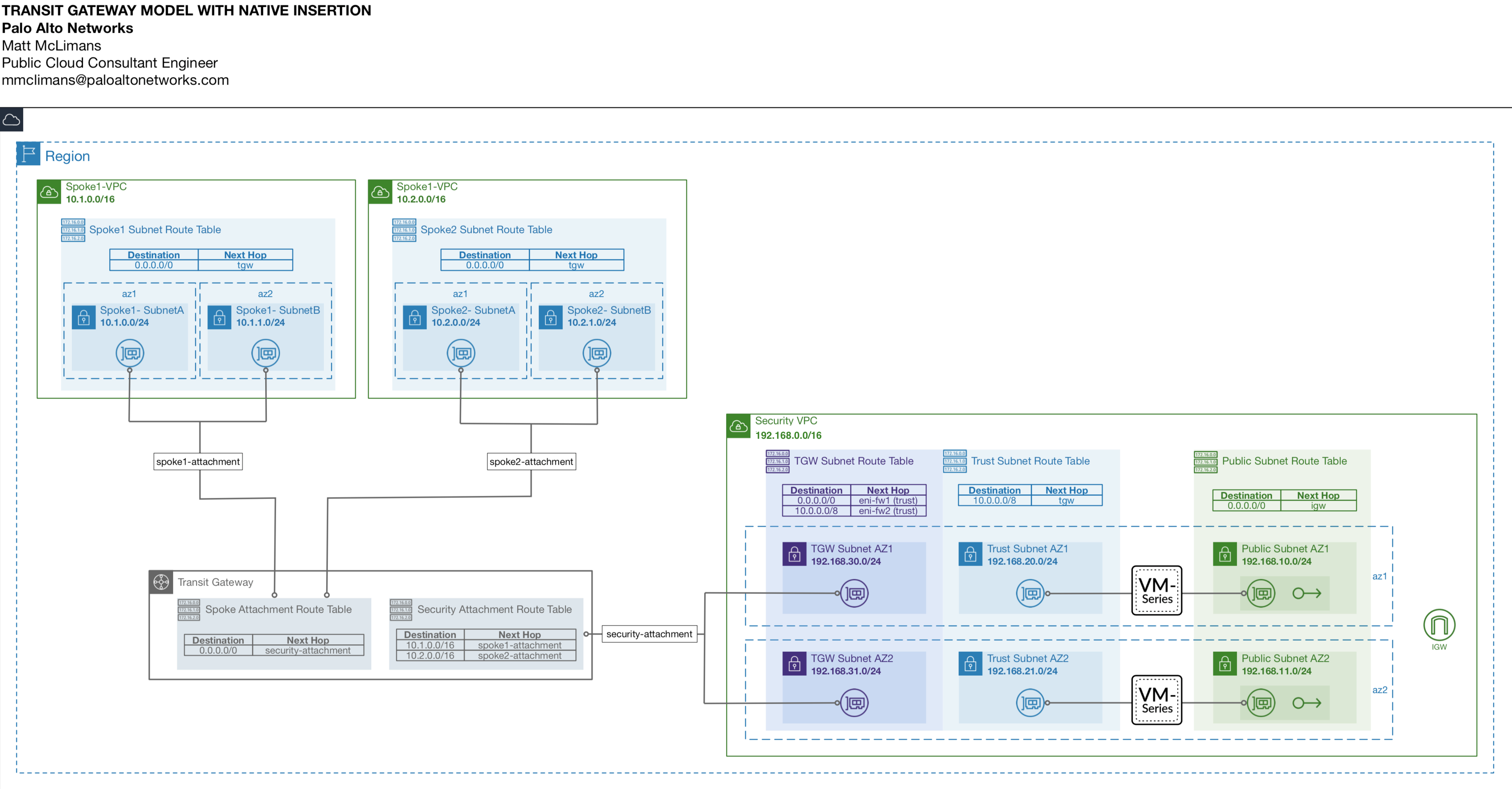

+#### VM-Series Overview

+* Firewall-1 handles egress traffic to internet

+* Firewall-2 handles east/west traffic between Spoke1-VPC and Spoke2-VPC

+* Both Firewalls can handle inbound traffic to the spokes

+* Firewalls are bootstrapped off an S3 Bucket (buckets are created during deployment)

+

+#### S3 Buckets Overview

+* 2 x S3 Buckets are deployed & configured to bootstrap the firewalls with a fully working configuration.

+* The buckets names have a random 30 string added to its name for global uniqueness `tgw-fw#-bootstrap-

+

+

+#### VM-Series Overview

+* Firewall-1 handles egress traffic to internet

+* Firewall-2 handles east/west traffic between Spoke1-VPC and Spoke2-VPC

+* Both Firewalls can handle inbound traffic to the spokes

+* Firewalls are bootstrapped off an S3 Bucket (buckets are created during deployment)

+

+#### S3 Buckets Overview

+* 2 x S3 Buckets are deployed & configured to bootstrap the firewalls with a fully working configuration.

+* The buckets names have a random 30 string added to its name for global uniqueness `tgw-fw#-bootstrap-| + deploy-v2 | index /Users/jharris/Documents/PycharmProjects/terraform/azure/Jenkins_proj-master/deploy-v2.py |

# Copyright (c) 2018, Palo Alto Networks

+#

+# Permission to use, copy, modify, and/or distribute this software for any

+# purpose with or without fee is hereby granted, provided that the above

+# copyright notice and this permission notice appear in all copies.

+#

+# THE SOFTWARE IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES

+# WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF

+# MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR

+# ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES

+# WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN

+# ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF

+# OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

+

+# Author: Justin Harris jharris@paloaltonetworks.com

+

+Usage

+

+python deploy.py -u <fwusername> -p<fwpassword> -r<resource group> -j<region>

+

| +Modules | ||||||

| + |

| |||||

+

| +Classes | ||||||||||

| + |

+ +

| |||||||||

+

| +Functions | ||

| + |

| |

+

| +Data | ||

| + | formatter = <logging.Formatter object> +handler = <StreamHandler <stderr> (NOTSET)> +logger = <RootLogger root (INFO)> +status_output = {} | |

| + deploy-v2 | index /Users/jharris/Documents/PycharmProjects/terraform/azure/Jenkins_proj-master/deploy-v2.py |

# Copyright (c) 2018, Palo Alto Networks

+#

+# Permission to use, copy, modify, and/or distribute this software for any

+# purpose with or without fee is hereby granted, provided that the above

+# copyright notice and this permission notice appear in all copies.

+#

+# THE SOFTWARE IS PROVIDED "AS IS" AND THE AUTHOR DISCLAIMS ALL WARRANTIES

+# WITH REGARD TO THIS SOFTWARE INCLUDING ALL IMPLIED WARRANTIES OF

+# MERCHANTABILITY AND FITNESS. IN NO EVENT SHALL THE AUTHOR BE LIABLE FOR

+# ANY SPECIAL, DIRECT, INDIRECT, OR CONSEQUENTIAL DAMAGES OR ANY DAMAGES

+# WHATSOEVER RESULTING FROM LOSS OF USE, DATA OR PROFITS, WHETHER IN AN

+# ACTION OF CONTRACT, NEGLIGENCE OR OTHER TORTIOUS ACTION, ARISING OUT OF

+# OR IN CONNECTION WITH THE USE OR PERFORMANCE OF THIS SOFTWARE.

+

+# Author: Justin Harris jharris@paloaltonetworks.com

+

+Usage

+

+python deploy.py -u <fwusername> -p<fwpassword> -r<resource group> -j<region>

+

| +Modules | ||||||

| + |

| |||||

+

| +Classes | ||||||||||

| + |

+ +

| |||||||||

+

| +Functions | ||

| + |

| |

+

| +Data | ||

| + | formatter = <logging.Formatter object> +handler = <StreamHandler <stderr> (NOTSET)> +logger = <RootLogger root (INFO)> +status_output = {} | |

+ +

+

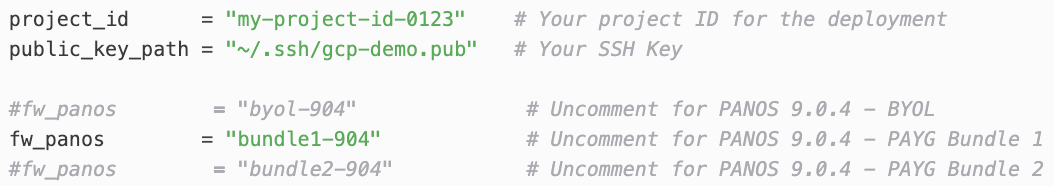

+Your terraform.tfvars should look like this before proceeding

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'

'; + } +} +?> diff --git a/azure/transit_2fw_2spoke_common/scripts/web_startup.yml.tpl b/azure/transit_2fw_2spoke_common/scripts/web_startup.yml.tpl new file mode 100644 index 00000000..1d02e945 --- /dev/null +++ b/azure/transit_2fw_2spoke_common/scripts/web_startup.yml.tpl @@ -0,0 +1,10 @@ +#cloud-config + +runcmd: + - sudo apt-get update -y + - sudo apt-get install -y php + - sudo apt-get install -y apache2 + - sudo apt-get install -y libapache2-mod-php + - sudo rm -f /var/www/html/index.html + - sudo wget -O /var/www/html/index.php https://raw.githubusercontent.com/wwce/terraform/master/azure/transit_2fw_2spoke_common/scripts/showheaders.php + - sudo systemctl restart apache2 \ No newline at end of file diff --git a/azure/transit_2fw_2spoke_common/spokes.tf b/azure/transit_2fw_2spoke_common/spokes.tf new file mode 100644 index 00000000..ea6ab06c --- /dev/null +++ b/azure/transit_2fw_2spoke_common/spokes.tf @@ -0,0 +1,97 @@ +#----------------------------------------------------------------------------------------------------------------- +# Create spoke1 resource group, spoke1 VNET, spoke1 internal LB, (2) spoke1 VMs + +resource "azurerm_resource_group" "spoke1_rg" { + name = "${var.global_prefix}${var.spoke1_prefix}-rg" + location = var.location +} + +module "spoke1_vnet" { + source = "./modules/spoke_vnet/" + name = "${var.spoke1_prefix}-vnet" + address_space = var.spoke1_vnet_cidr + subnet_prefixes = var.spoke1_subnet_cidrs + remote_vnet_rg = azurerm_resource_group.transit.name + remote_vnet_name = module.vnet.vnet_name + remote_vnet_id = module.vnet.vnet_id + route_table_destinations = var.spoke_udrs + route_table_next_hop = [var.fw_internal_lb_ip] + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +data "template_file" "web_startup" { + template = "${file("${path.module}/scripts/web_startup.yml.tpl")}" +} + +module "spoke1_vm" { + source = "./modules/spoke_vm/" + name = "${var.spoke1_prefix}-vm" + vm_count = var.spoke1_vm_count + subnet_id = module.spoke1_vnet.vnet_subnets[0] + availability_set_id = "" + backend_pool_ids = [module.spoke1_lb.backend_pool_id] + custom_data = base64encode(data.template_file.web_startup.rendered) + publisher = "Canonical" + offer = "UbuntuServer" + sku = "16.04-LTS" + username = var.spoke_username + password = var.spoke_password + tags = var.tags + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +module "spoke1_lb" { + source = "./modules/lb/" + name = "${var.spoke1_prefix}-lb" + type = "private" + sku = "Standard" + probe_ports = [80] + frontend_ports = [80] + backend_ports = [80] + protocol = "Tcp" + enable_floating_ip = false + subnet_id = module.spoke1_vnet.vnet_subnets[0] + private_ip_address = var.spoke1_internal_lb_ip + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +#----------------------------------------------------------------------------------------------------------------- +# Create spoke2 resource group, spoke2 VNET, spoke2 VM + +resource "azurerm_resource_group" "spoke2_rg" { + name = "${var.global_prefix}${var.spoke2_prefix}-rg" + location = var.location +} + +module "spoke2_vnet" { + source = "./modules/spoke_vnet/" + name = "${var.spoke2_prefix}-vnet" + address_space = var.spoke2_vnet_cidr + subnet_prefixes = var.spoke2_subnet_cidrs + remote_vnet_rg = azurerm_resource_group.transit.name + remote_vnet_name = module.vnet.vnet_name + remote_vnet_id = module.vnet.vnet_id + route_table_destinations = var.spoke_udrs + route_table_next_hop = [var.fw_internal_lb_ip] + location = var.location + resource_group_name = azurerm_resource_group.spoke2_rg.name +} + +module "spoke2_vm" { + source = "./modules/spoke_vm/" + name = "${var.spoke2_prefix}-vm" + vm_count = var.spoke2_vm_count + subnet_id = module.spoke2_vnet.vnet_subnets[0] + availability_set_id = "" + publisher = "Canonical" + offer = "UbuntuServer" + sku = "16.04-LTS" + username = var.spoke_username + password = var.spoke_password + tags = var.tags + location = var.location + resource_group_name = azurerm_resource_group.spoke2_rg.name +} \ No newline at end of file diff --git a/azure/transit_2fw_2spoke_common/terraform.tfvars b/azure/transit_2fw_2spoke_common/terraform.tfvars new file mode 100644 index 00000000..2f122c28 --- /dev/null +++ b/azure/transit_2fw_2spoke_common/terraform.tfvars @@ -0,0 +1,43 @@ +#fw_license = "byol" # Uncomment 1 fw_license to select VM-Series licensing mode +#fw_license = "bundle1" +#fw_license = "bundle2" + +global_prefix = "" # Prefix to add to all resource groups created. This is useful to create unique resource groups within a shared Azure subscription +location = "eastus" + +# ----------------------------------------------------------------------- +# VM-Series resource group variables + +fw_prefix = "vmseries" # Adds prefix name to all resources created in the firewall resource group +fw_count = 2 +fw_panos = "9.0.1" +fw_nsg_prefix = "0.0.0.0/0" +fw_username = "paloalto" +fw_password = "Pal0Alt0@123" +fw_internal_lb_ip = "10.0.2.100" + +# ----------------------------------------------------------------------- +# Transit resource group variables + +transit_prefix = "transit" # Adds prefix name to all resources created in the transit vnet's resource group +transit_vnet_cidr = "10.0.0.0/16" +transit_subnet_names = ["mgmt", "untrust", "trust"] +transit_subnet_cidrs = ["10.0.0.0/24", "10.0.1.0/24", "10.0.2.0/24"] + +# ----------------------------------------------------------------------- +# Spoke resource group variables + +spoke1_prefix = "spoke1" # Adds prefix name to all resources created in spoke1's resource group +spoke1_vm_count = 2 +spoke1_vnet_cidr = "10.1.0.0/16" +spoke1_subnet_cidrs = ["10.1.0.0/24"] +spoke1_internal_lb_ip = "10.1.0.100" + +spoke2_prefix = "spoke2" # Adds prefix name to all resources created in spoke2's resource group +spoke2_vm_count = 1 +spoke2_vnet_cidr = "10.2.0.0/16" +spoke2_subnet_cidrs = ["10.2.0.0/24"] + +spoke_username = "paloalto" +spoke_password = "Pal0Alt0@123" +spoke_udrs = ["0.0.0.0/0", "10.1.0.0/16", "10.2.0.0/16"] \ No newline at end of file diff --git a/azure/transit_2fw_2spoke_common/variables.tf b/azure/transit_2fw_2spoke_common/variables.tf new file mode 100644 index 00000000..4899c169 --- /dev/null +++ b/azure/transit_2fw_2spoke_common/variables.tf @@ -0,0 +1,129 @@ +variable location { + description = "Enter a location" +} + +variable fw_prefix { + description = "Prefix to add to all resources added in the firewall resource group" + default = "" +} + +variable fw_license { + description = "VM-Series license: byol, bundle1, or bundle2" + # default = "byol" + # default = "bundle1" + # default = "bundle2" +} + +variable global_prefix { + description = "Prefix to add to all resource groups created. This is useful to create unique resource groups within a shared Azure subscription" +} +#----------------------------------------------------------------------------------------------------------------- +# Transit VNET variables + +variable transit_prefix { +} + +variable transit_vnet_cidr { +} + +variable transit_subnet_names { + type = list(string) +} + +variable transit_subnet_cidrs { + type = list(string) +} + +#----------------------------------------------------------------------------------------------------------------- +# VM-Series variables + +variable fw_count { +} + +variable fw_nsg_prefix { +} + +variable fw_panos { +} + +variable fw_username { +} + +variable fw_password { +} + +variable fw_internal_lb_ip { +} + +#----------------------------------------------------------------------------------------------------------------- +# Spoke variables + +variable spoke_username { +} + +variable spoke_password { +} + +variable spoke_udrs { +} + +variable spoke1_prefix { + description = "Prefix to add to all resources added in spoke1's resource group" +} + +variable spoke1_vm_count { +} + +variable spoke1_vnet_cidr { +} + +variable spoke1_subnet_cidrs { + type = list(string) +} + +variable spoke1_internal_lb_ip { +} + +variable spoke2_prefix { + description = "Prefix to add to all resources added in spoke2's resource group" +} + +variable spoke2_vm_count { +} + +variable spoke2_vnet_cidr { +} + +variable spoke2_subnet_cidrs { + type = list(string) +} + +variable tags { + description = "The tags to associate with newly created resources" + type = map(string) + + default = {} +} + +#----------------------------------------------------------------------------------------------------------------- +# Azure environment variables + +variable client_id { + description = "Azure client ID" + default = "" +} + +variable client_secret { + description = "Azure client secret" + default = "" +} + +variable subscription_id { + description = "Azure subscription ID" + default = "" +} + +variable tenant_id { + description = "Azure tenant ID" + default = "" +} \ No newline at end of file diff --git a/azure/transit_2fw_2spoke_common_appgw/GUIDE.pdf b/azure/transit_2fw_2spoke_common_appgw/GUIDE.pdf new file mode 100644 index 00000000..c66ebfe6 Binary files /dev/null and b/azure/transit_2fw_2spoke_common_appgw/GUIDE.pdf differ diff --git a/azure/transit_2fw_2spoke_common_appgw/README.md b/azure/transit_2fw_2spoke_common_appgw/README.md new file mode 100644 index 00000000..01101669 --- /dev/null +++ b/azure/transit_2fw_2spoke_common_appgw/README.md @@ -0,0 +1,60 @@ +# 2 x VM-Series / Public LB / Internal LB / AppGW / 2 x Spoke VNETs + +This is an extension of the Terraform template located at [**transit_2fw_2spoke_common**](https://github.com/wwce/terraform/tree/master/azure/transit_2fw_2spoke_common). + +Terraform creates 2 VM-Series firewalls deployed in a transit VNET with two connected spoke VNETs (via VNET peering). The VM-Series firewalls secure all ingress/egress to and from the spoke VNETs. All traffic originating from the spokes is routed to an internal load balancer in the transit VNET's trust subnet. All inbound traffic from the internet is sent through a public load balancer or an application gateway (both are deployed). The Application Gateway is configured to load balance HTTP traffic on port 80. + +N.B. - The template can take 15+ minutes to complete due to the Application Gateway deployment time. When complete, the FQDN of the Application Gateway is included in the output. + +Please see the [**Deployment Guide**](https://github.com/wwce/terraform/blob/master/azure/transit_2fw_2spoke_common/GUIDE.pdf) for more information. + + + +## Prerequistes +* Valid Azure Subscription +* Access to Azure Cloud Shell + + + +## How to Deploy +### 1. Setup & Download Build +In the Azure Portal, open Azure Cloud Shell and run the following **BASH ONLY!**. +``` +# Accept VM-Series EULA for desired license type (BYOL, Bundle1, or Bundle2) +$ az vm image terms accept --urn paloaltonetworks:vmseries1:

+Your terraform.tfvars should look like this before proceeding

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'

'; + } +} +?> diff --git a/azure/transit_2fw_2spoke_common_appgw/scripts/web_startup.yml.tpl b/azure/transit_2fw_2spoke_common_appgw/scripts/web_startup.yml.tpl new file mode 100644 index 00000000..1d02e945 --- /dev/null +++ b/azure/transit_2fw_2spoke_common_appgw/scripts/web_startup.yml.tpl @@ -0,0 +1,10 @@ +#cloud-config + +runcmd: + - sudo apt-get update -y + - sudo apt-get install -y php + - sudo apt-get install -y apache2 + - sudo apt-get install -y libapache2-mod-php + - sudo rm -f /var/www/html/index.html + - sudo wget -O /var/www/html/index.php https://raw.githubusercontent.com/wwce/terraform/master/azure/transit_2fw_2spoke_common/scripts/showheaders.php + - sudo systemctl restart apache2 \ No newline at end of file diff --git a/azure/transit_2fw_2spoke_common_appgw/spokes.tf b/azure/transit_2fw_2spoke_common_appgw/spokes.tf new file mode 100644 index 00000000..a4bf874b --- /dev/null +++ b/azure/transit_2fw_2spoke_common_appgw/spokes.tf @@ -0,0 +1,97 @@ +#----------------------------------------------------------------------------------------------------------------- +# Create spoke1 resource group, spoke1 VNET, spoke1 internal LB, (2) spoke1 VMs + +resource "azurerm_resource_group" "spoke1_rg" { + name = "${var.global_prefix}-${var.spoke1_prefix}-rg" + location = var.location +} + +module "spoke1_vnet" { + source = "./modules/spoke_vnet/" + name = "${var.spoke1_prefix}-vnet" + address_space = var.spoke1_vnet_cidr + subnet_prefixes = var.spoke1_subnet_cidrs + remote_vnet_rg = azurerm_resource_group.transit.name + remote_vnet_name = module.vnet.vnet_name + remote_vnet_id = module.vnet.vnet_id + route_table_destinations = var.spoke_udrs + route_table_next_hop = [var.fw_internal_lb_ip] + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +data "template_file" "web_startup" { + template = "${file("${path.module}/scripts/web_startup.yml.tpl")}" +} + +module "spoke1_vm" { + source = "./modules/spoke_vm/" + name = "${var.spoke1_prefix}-vm" + vm_count = var.spoke1_vm_count + subnet_id = module.spoke1_vnet.vnet_subnets[0] + availability_set_id = "" + backend_pool_ids = [module.spoke1_lb.backend_pool_id] + custom_data = base64encode(data.template_file.web_startup.rendered) + publisher = "Canonical" + offer = "UbuntuServer" + sku = "16.04-LTS" + username = var.spoke_username + password = var.spoke_password + tags = var.tags + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +module "spoke1_lb" { + source = "./modules/lb/" + name = "${var.spoke1_prefix}-lb" + type = "private" + sku = "Standard" + probe_ports = [80] + frontend_ports = [80] + backend_ports = [80] + protocol = "Tcp" + enable_floating_ip = false + subnet_id = module.spoke1_vnet.vnet_subnets[0] + private_ip_address = var.spoke1_internal_lb_ip + location = var.location + resource_group_name = azurerm_resource_group.spoke1_rg.name +} + +#----------------------------------------------------------------------------------------------------------------- +# Create spoke2 resource group, spoke2 VNET, spoke2 VM + +resource "azurerm_resource_group" "spoke2_rg" { + name = "${var.global_prefix}-${var.spoke2_prefix}-rg" + location = var.location +} + +module "spoke2_vnet" { + source = "./modules/spoke_vnet/" + name = "${var.spoke2_prefix}-vnet" + address_space = var.spoke2_vnet_cidr + subnet_prefixes = var.spoke2_subnet_cidrs + remote_vnet_rg = azurerm_resource_group.transit.name + remote_vnet_name = module.vnet.vnet_name + remote_vnet_id = module.vnet.vnet_id + route_table_destinations = var.spoke_udrs + route_table_next_hop = [var.fw_internal_lb_ip] + location = var.location + resource_group_name = azurerm_resource_group.spoke2_rg.name +} + +module "spoke2_vm" { + source = "./modules/spoke_vm/" + name = "${var.spoke2_prefix}-vm" + vm_count = var.spoke2_vm_count + subnet_id = module.spoke2_vnet.vnet_subnets[0] + availability_set_id = "" + publisher = "Canonical" + offer = "UbuntuServer" + sku = "16.04-LTS" + username = var.spoke_username + password = var.spoke_password + tags = var.tags + location = var.location + resource_group_name = azurerm_resource_group.spoke2_rg.name +} diff --git a/azure/transit_2fw_2spoke_common_appgw/terraform.tfvars b/azure/transit_2fw_2spoke_common_appgw/terraform.tfvars new file mode 100644 index 00000000..3304b1e6 --- /dev/null +++ b/azure/transit_2fw_2spoke_common_appgw/terraform.tfvars @@ -0,0 +1,43 @@ +#fw_license = "byol" # Uncomment 1 fw_license to select VM-Series licensing mode +#fw_license = "bundle1" +#fw_license = "bundle2" + +global_prefix = "" # Prefix to add to all resource groups created. This is useful to create unique resource groups within a shared Azure subscription +location = "centralus" + +# ----------------------------------------------------------------------- +# VM-Series resource group variables + +fw_prefix = "vmseries" # Adds prefix name to all resources created in the firewall resource group +fw_count = 2 +fw_panos = "9.0.1" +fw_nsg_prefix = "0.0.0.0/0" +fw_username = "paloalto" +fw_password = "Pal0Alt0@123" +fw_internal_lb_ip = "10.0.2.100" + +# ----------------------------------------------------------------------- +# Transit resource group variables + +transit_prefix = "transit" # Adds prefix name to all resources created in the transit vnet's resource group +transit_vnet_cidr = "10.0.0.0/16" +transit_subnet_names = ["mgmt", "untrust", "trust","gateway"] +transit_subnet_cidrs = ["10.0.0.0/24", "10.0.1.0/24", "10.0.2.0/24", "10.0.3.0/24"] + +# ----------------------------------------------------------------------- +# Spoke resource group variables + +spoke1_prefix = "spoke1" # Adds prefix name to all resources created in spoke1's resource group +spoke1_vm_count = 2 +spoke1_vnet_cidr = "10.1.0.0/16" +spoke1_subnet_cidrs = ["10.1.0.0/24"] +spoke1_internal_lb_ip = "10.1.0.100" + +spoke2_prefix = "spoke2" # Adds prefix name to all resources created in spoke2's resource group +spoke2_vm_count = 1 +spoke2_vnet_cidr = "10.2.0.0/16" +spoke2_subnet_cidrs = ["10.2.0.0/24"] + +spoke_username = "paloalto" +spoke_password = "Pal0Alt0@123" +spoke_udrs = ["0.0.0.0/0", "10.1.0.0/16", "10.2.0.0/16"] diff --git a/azure/transit_2fw_2spoke_common_appgw/variables.tf b/azure/transit_2fw_2spoke_common_appgw/variables.tf new file mode 100644 index 00000000..4899c169 --- /dev/null +++ b/azure/transit_2fw_2spoke_common_appgw/variables.tf @@ -0,0 +1,129 @@ +variable location { + description = "Enter a location" +} + +variable fw_prefix { + description = "Prefix to add to all resources added in the firewall resource group" + default = "" +} + +variable fw_license { + description = "VM-Series license: byol, bundle1, or bundle2" + # default = "byol" + # default = "bundle1" + # default = "bundle2" +} + +variable global_prefix { + description = "Prefix to add to all resource groups created. This is useful to create unique resource groups within a shared Azure subscription" +} +#----------------------------------------------------------------------------------------------------------------- +# Transit VNET variables + +variable transit_prefix { +} + +variable transit_vnet_cidr { +} + +variable transit_subnet_names { + type = list(string) +} + +variable transit_subnet_cidrs { + type = list(string) +} + +#----------------------------------------------------------------------------------------------------------------- +# VM-Series variables + +variable fw_count { +} + +variable fw_nsg_prefix { +} + +variable fw_panos { +} + +variable fw_username { +} + +variable fw_password { +} + +variable fw_internal_lb_ip { +} + +#----------------------------------------------------------------------------------------------------------------- +# Spoke variables + +variable spoke_username { +} + +variable spoke_password { +} + +variable spoke_udrs { +} + +variable spoke1_prefix { + description = "Prefix to add to all resources added in spoke1's resource group" +} + +variable spoke1_vm_count { +} + +variable spoke1_vnet_cidr { +} + +variable spoke1_subnet_cidrs { + type = list(string) +} + +variable spoke1_internal_lb_ip { +} + +variable spoke2_prefix { + description = "Prefix to add to all resources added in spoke2's resource group" +} + +variable spoke2_vm_count { +} + +variable spoke2_vnet_cidr { +} + +variable spoke2_subnet_cidrs { + type = list(string) +} + +variable tags { + description = "The tags to associate with newly created resources" + type = map(string) + + default = {} +} + +#----------------------------------------------------------------------------------------------------------------- +# Azure environment variables + +variable client_id { + description = "Azure client ID" + default = "" +} + +variable client_secret { + description = "Azure client secret" + default = "" +} + +variable subscription_id { + description = "Azure subscription ID" + default = "" +} + +variable tenant_id { + description = "Azure tenant ID" + default = "" +} \ No newline at end of file diff --git a/gcp/GP-NoAutoScaling/Guide.pdf b/gcp/GP-NoAutoScaling/Guide.pdf new file mode 100644 index 00000000..8b294c36 Binary files /dev/null and b/gcp/GP-NoAutoScaling/Guide.pdf differ diff --git a/gcp/GP-NoAutoScaling/README.md b/gcp/GP-NoAutoScaling/README.md new file mode 100644 index 00000000..e140aa27 --- /dev/null +++ b/gcp/GP-NoAutoScaling/README.md @@ -0,0 +1,51 @@ +# GlobalProtect in GCP + +Terraform creates a basic GlobalProtect infrastructure consisting of 1 Portal and 2 Gateways (in separate Zones) along with two test Ubuntu servers. + +Please see the [**Deployment Guide**](https://github.com/wwce/terraform/blob/master/gcp/GP-NoAutoScaling/GUIDE.pdf) for more information. + + +

+ +

+

-

+

+ +

+

+ +

+

+ +

+

+ +

+

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'

'; + } +} +?> diff --git a/gcp/adv-peering-with-lbnh/scripts/webserver-startup.sh b/gcp/adv-peering-with-lbnh/scripts/webserver-startup.sh new file mode 100644 index 00000000..3576db4e --- /dev/null +++ b/gcp/adv-peering-with-lbnh/scripts/webserver-startup.sh @@ -0,0 +1,8 @@ +#!/bin/bash +until sudo apt-get update; do echo "Retrying"; sleep 2; done +until sudo apt-get install -y php; do echo "Retrying"; sleep 2; done +until sudo apt-get install -y apache2; do echo "Retrying"; sleep 2; done +until sudo apt-get install -y libapache2-mod-php; do echo "Retrying"; sleep 2; done +until sudo rm -f /var/www/html/index.html; do echo "Retrying"; sleep 2; done +until sudo wget -O /var/www/html/index.php https://raw.githubusercontent.com/wwce/terraform/master/gcp/adv_peering_2fw_2spoke/scripts/showheaders.php; do echo "Retrying"; sleep 2; done +until sudo systemctl restart apache2; do echo "Retrying"; sleep 2; done diff --git a/gcp/adv-peering-with-lbnh/spoke1.tf b/gcp/adv-peering-with-lbnh/spoke1.tf new file mode 100644 index 00000000..7cae884c --- /dev/null +++ b/gcp/adv-peering-with-lbnh/spoke1.tf @@ -0,0 +1,79 @@ +provider "google" { + credentials = "${var.spoke1_project_authfile}" + project = "${var.spoke1_project}" + region = "${var.region}" + alias = "spoke1" +} + +#************************************************************************************ +# CREATE SPOKE1 VPC & SPOKE1 VMs (w/ INTLB) +#************************************************************************************ +module "vpc_spoke1" { + source = "./modules/create_vpc/" + vpc_name = "spoke1-vpc" + subnetworks = ["spoke1-subnet"] + ip_cidrs = ["10.10.1.0/24"] + regions = ["${var.region}"] + ingress_allow_all = true + ingress_sources = ["0.0.0.0/0"] + + providers = { + google = "google.spoke1" + } +} + +module "vm_spoke1" { + source = "./modules/create_vm/" + vm_names = ["spoke1-vm1", "spoke1-vm2"] + vm_zones = ["${var.region}-a", "${var.region}-a"] + vm_machine_type = "f1-micro" + vm_image = "ubuntu-os-cloud/ubuntu-1604-lts" + vm_subnetworks = ["${module.vpc_spoke1.subnetwork_self_link[0]}", "${module.vpc_spoke1.subnetwork_self_link[0]}"] + vm_ssh_key = "ubuntu:${var.ubuntu_ssh_key}" + startup_script = "${file("${path.module}/scripts/webserver-startup.sh")}" // default "" - runs no startup script + + internal_lb_create = true // default false + internal_lb_name = "spoke1-intlb" // default "intlb" + internal_lb_ports = ["80", "443"] // default ["80"] + internal_lb_ip = "10.10.1.100" // default "" (assigns an any available IP in subnetwork ) + + providers = { + google = "google.spoke1" + } +} + +#************************************************************************************ +# CREATE PEERING LINK SPOKE1-to-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke1_to_trust" { + name = "spoke1-to-trust" + network = "${module.vpc_spoke1.vpc_self_link}" + peer_network = "${module.vpc_trust.vpc_self_link}" + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.ilb_trust_to_spoke2", + ] + provider = "google.spoke1" +} +#************************************************************************************ +# CREATE PEERING LINK SPOKE1-to-ilb-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke1_to_ilb_trust" { + name = "spoke1-to-ilb-trust" + network = "${module.vpc_spoke1.vpc_self_link}" + peer_network = "${module.ilb_trust.vpc_self_link}" + + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.spoke1_to_trust", + ] + provider = "google.spoke1" +} \ No newline at end of file diff --git a/gcp/adv-peering-with-lbnh/spoke2.tf b/gcp/adv-peering-with-lbnh/spoke2.tf new file mode 100644 index 00000000..591bdb24 --- /dev/null +++ b/gcp/adv-peering-with-lbnh/spoke2.tf @@ -0,0 +1,72 @@ +provider "google" { + credentials = "${var.spoke2_project_authfile}" + project = "${var.spoke2_project}" + region = "${var.region}" + alias = "spoke2" +} + +#************************************************************************************ +# CREATE SPOKE2 VPC & SPOKE2 VM +#************************************************************************************ +module "vpc_spoke2" { + source = "./modules/create_vpc/" + vpc_name = "spoke2-vpc" + subnetworks = ["spoke2-subnet"] + ip_cidrs = ["10.10.2.0/24"] + regions = ["${var.region}"] + ingress_allow_all = true + ingress_sources = ["0.0.0.0/0"] + + providers = { + google = "google.spoke2" + } +} + +module "vm_spoke2" { + source = "./modules/create_vm/" + vm_names = ["spoke2-vm1"] + vm_zones = ["${var.region}-a"] + vm_machine_type = "f1-micro" + vm_image = "ubuntu-os-cloud/ubuntu-1604-lts" + vm_subnetworks = ["${module.vpc_spoke2.subnetwork_self_link[0]}"] + vm_ssh_key = "ubuntu:${var.ubuntu_ssh_key}" + + providers = { + google = "google.spoke2" + } +} + +#************************************************************************************ +# CREATE PEERING LINK SPOKE2-to-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke2_to_trust" { + name = "spoke2-to-trust" + network = "${module.vpc_spoke2.vpc_self_link}" + peer_network = "${module.vpc_trust.vpc_self_link}" + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.spoke1_to_ilb_trust", + ] + provider = "google.spoke2" +} +#************************************************************************************ +# CREATE PEERING LINK SPOKE2-to-ilb-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke2_to_ilb_trust" { + name = "spoke2-to-ilb-trust" + network = "${module.vpc_spoke2.vpc_self_link}" + peer_network = "${module.ilb_trust.vpc_self_link}" + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.spoke2_to_trust", + ] + provider = "google.spoke2" +} diff --git a/gcp/adv-peering-with-lbnh/variables.tf b/gcp/adv-peering-with-lbnh/variables.tf new file mode 100644 index 00000000..0b4fda73 --- /dev/null +++ b/gcp/adv-peering-with-lbnh/variables.tf @@ -0,0 +1,70 @@ +#************************************************************************************ +# GCP VARIABLES +#************************************************************************************ +variable enable_ilbnh { + default = true +} + +variable "region" { + default = "us-central1" +} + +#************************************************************************************ +# main.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "main_project" { + description = "Existing project ID for main project (all resources deployed in main.tf)" + default = "ilb-2019" +} + +variable "main_project_authfile" { + description = "Authentication file for main project (all resources deployed in main.tf)" + default = "/Users/dspears/GCP/ilb-2019-key.json" +} + +#************************************************************************************ +# spoke1.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "spoke1_project" { + description = "Existing project for spoke1 (can be the same as main project and can be same as main project)." + default = "ilb-2019" +} + +variable "spoke1_project_authfile" { + description = "Authentication file for spoke1 project (all resources deployed in spoke1.tf)" + default = "/Users/dspears/GCP/ilb-2019-key.json" +} + +#************************************************************************************ +# spoke2.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "spoke2_project" { + description = "Existing project for spoke2 (can be the same as main project and can be same as main project)." + default = "ilb-2019" +} + +variable "spoke2_project_authfile" { + description = "Authentication file for spoke2 project (all resources deployed in spoke2.tf and can be same as main project)" + default = "/Users/dspears/GCP/ilb-2019-key.json" +} + +#************************************************************************************ +# VMSERIES SSH KEY & IMAGE (not required if bootstrapping) +#************************************************************************************ +variable "vmseries_ssh_key" { + default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAXsXFhJABLkPEsF2NC/oLJ5sj/cZDXso+qPy30nllU5w== davespears@gmail.com" +} + +#************************************************************************************ +# UBUNTU SSH KEY +#************************************************************************************ +variable "ubuntu_ssh_key" { + default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAXsXFhJABLkPEsF2NC/oLJ5sj/cZDXso+qPy30nllU5w== davespears@gmail.com" + } + +variable "vmseries_image" { + # default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-byol-814" + default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-bundle1-814" + + # default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-bundle2-814" +} diff --git a/gcp/adv_peering_2fw_2spoke/README.md b/gcp/adv_peering_2fw_2spoke/README.md new file mode 100644 index 00000000..40a0f00a --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke/README.md @@ -0,0 +1,72 @@ +## 2 x VM-Series / 2 x Spoke VPCs via Advanced Peering +Terraform creates 2 VM-Series firewalls that secure ingress/egress traffic for 2 spoke VPCs. The spoke VPCs are connected (via VPC Peering) to the VM-Series trust VPC. After the build completes, several manual changes must be performed to enable transitive routing. The manual changes are required since they cannot be performed through Terraform, yet. + +### Overview +* 5 x VPCs (mgmt, untrust, trust, spoke1, & spoke2) with relevant peering connections +* 2 x VM-Series (BYOL / Bundle1 / Bundle2) +* 2 x Ubuntu VM in spoke1 VPC (install Apache during creation) +* 1 x Ubuntu VM in spoke2 VPC +* 1 x GCP Public Load Balancer (VM-Series as backend) +* 1 x GCP Internal Load Balancer (spoke1 VM's as backend) +* 1 x GCP Storage Bucket for VM-Series bootstrapping (random string appended to bucket name for global uniqueness) + +

+ +

+

+ +

+

+ +

+

+ +

+

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'

'; + } +} +?> diff --git a/gcp/adv_peering_2fw_2spoke/scripts/webserver-startup.sh b/gcp/adv_peering_2fw_2spoke/scripts/webserver-startup.sh new file mode 100644 index 00000000..1349754f --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke/scripts/webserver-startup.sh @@ -0,0 +1,7 @@ +#!/bin/bash +until sudo apt-get update; do echo "Retrying"; sleep 2; done +until sudo apt-get install -y php; do echo "Retrying"; sleep 2; done +until sudo apt-get install -y apache2 php7. libapache2-mod-php7.; do echo "Retrying"; sleep 2; done +until sudo rm -f /var/www/html/index.html; do echo "Retrying"; sleep 2; done +until sudo wget -O /var/www/html/index.php https://raw.githubusercontent.com/wwce/terraform/master/gcp/adv_peering_2fw_2spoke/scripts/showheaders.php; do echo "Retrying"; sleep 2; done +until sudo systemctl restart apache2; do echo "Retrying"; sleep 2; done diff --git a/gcp/adv_peering_2fw_2spoke/spoke1.tf b/gcp/adv_peering_2fw_2spoke/spoke1.tf new file mode 100644 index 00000000..fbbe81ec --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke/spoke1.tf @@ -0,0 +1,61 @@ +provider "google" { + credentials = "${var.spoke1_project_authfile}" + project = "${var.spoke1_project}" + region = "${var.region}" + alias = "spoke1" +} + +#************************************************************************************ +# CREATE SPOKE2 VPC & SPOKE1 VMs (w/ INTLB) +#************************************************************************************ +module "vpc_spoke1" { + source = "./modules/create_vpc/" + vpc_name = "spoke1-vpc" + subnetworks = ["spoke1-subnet"] + ip_cidrs = ["10.10.1.0/24"] + regions = ["${var.region}"] + ingress_allow_all = true + ingress_sources = ["0.0.0.0/0"] + + providers = { + google = "google.spoke1" + } +} + +module "vm_spoke1" { + source = "./modules/create_vm/" + vm_names = ["spoke1-vm1", "spoke1-vm2"] + vm_zones = ["${var.region}-a", "${var.region}-a"] + vm_machine_type = "f1-micro" + vm_image = "ubuntu-os-cloud/ubuntu-1604-lts" + vm_subnetworks = ["${module.vpc_spoke1.subnetwork_self_link[0]}", "${module.vpc_spoke1.subnetwork_self_link[0]}"] + vm_ssh_key = "ubuntu:${var.ubuntu_ssh_key}" + startup_script = "${file("${path.module}/scripts/webserver-startup.sh")}" // default "" - runs no startup script + + internal_lb_create = true // default false + internal_lb_name = "spoke1-intlb" // default "intlb" + internal_lb_ports = ["80", "443"] // default ["80"] + internal_lb_ip = "10.10.1.100" // default "" (assigns an any available IP in subnetwork ) + + providers = { + google = "google.spoke1" + } +} + +#************************************************************************************ +# CREATE PEERING LINK SPOKE1-to-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke1_to_trust" { + name = "spoke1-to-trust" + network = "${module.vpc_spoke1.vpc_self_link}" + peer_network = "${module.vpc_trust.vpc_self_link}" + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.trust_to_spoke2", + ] + provider = "google.spoke1" +} diff --git a/gcp/adv_peering_2fw_2spoke/spoke2.tf b/gcp/adv_peering_2fw_2spoke/spoke2.tf new file mode 100644 index 00000000..718ff170 --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke/spoke2.tf @@ -0,0 +1,55 @@ +provider "google" { + credentials = "${var.spoke2_project_authfile}" + project = "${var.spoke2_project}" + region = "${var.region}" + alias = "spoke2" +} + +#************************************************************************************ +# CREATE SPOKE2 VPC & SPOKE2 VM +#************************************************************************************ +module "vpc_spoke2" { + source = "./modules/create_vpc/" + vpc_name = "spoke2-vpc" + subnetworks = ["spoke2-subnet"] + ip_cidrs = ["10.10.2.0/24"] + regions = ["${var.region}"] + ingress_allow_all = true + ingress_sources = ["0.0.0.0/0"] + + providers = { + google = "google.spoke2" + } +} + +module "vm_spoke2" { + source = "./modules/create_vm/" + vm_names = ["spoke2-vm1"] + vm_zones = ["${var.region}-a"] + vm_machine_type = "f1-micro" + vm_image = "ubuntu-os-cloud/ubuntu-1604-lts" + vm_subnetworks = ["${module.vpc_spoke2.subnetwork_self_link[0]}"] + vm_ssh_key = "ubuntu:${var.ubuntu_ssh_key}" + + providers = { + google = "google.spoke2" + } +} + +#************************************************************************************ +# CREATE PEERING LINK SPOKE2-to-TRUST +#************************************************************************************ +resource "google_compute_network_peering" "spoke2_to_trust" { + name = "spoke2-to-trust" + network = "${module.vpc_spoke2.vpc_self_link}" + peer_network = "${module.vpc_trust.vpc_self_link}" + + provisioner "local-exec" { + command = "sleep 45" + } + + depends_on = [ + "google_compute_network_peering.spoke1_to_trust", + ] + provider = "google.spoke2" +} diff --git a/gcp/adv_peering_2fw_2spoke/variables.tf b/gcp/adv_peering_2fw_2spoke/variables.tf new file mode 100644 index 00000000..f273131f --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke/variables.tf @@ -0,0 +1,65 @@ +#************************************************************************************ +# GCP VARIABLES +#************************************************************************************ +variable "region" { + default = "us-east4" +} + +#************************************************************************************ +# main.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "main_project" { + description = "Existing project ID for main project (all resources deployed in main.tf)" + default = "host-project-242119" +} + +variable "main_project_authfile" { + description = "Authentication file for main project (all resources deployed in main.tf)" + default = "host-project-b533f464016c.json" +} + +#************************************************************************************ +# spoke1.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "spoke1_project" { + description = "Existing project for spoke1 (can be the same as main project and can be same as main project)." + default = "host-project-242119" +} + +variable "spoke1_project_authfile" { + description = "Authentication file for spoke1 project (all resources deployed in spoke1.tf)" + default = "host-project-b533f464016c.json" +} + +#************************************************************************************ +# spoke2.tf PROJECT ID & AUTHFILE +#************************************************************************************ +variable "spoke2_project" { + description = "Existing project for spoke2 (can be the same as main project and can be same as main project)." + default = "host-project-242119" +} + +variable "spoke2_project_authfile" { + description = "Authentication file for spoke2 project (all resources deployed in spoke2.tf and can be same as main project)" + default = "host-project-b533f464016c.json" +} + +#************************************************************************************ +# VMSERIES SSH KEY & IMAGE (not required if bootstrapping) +#************************************************************************************ +variable "vmseries_ssh_key" { + default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDa7UUo1v42jebXVHlBof9E9GAFfalTndZQmvlmFu9e88euqrLI4xEZwg9ihwPFVTXOmrAogye6ojv5rbf3f13ZFYB+USjcR/9RFX+DKkPmXluC5Xq3z0ZlxY3QETHSlr6G8pfEqNwFebYJmKZ1MVNUztmb1DTIhjbFN4IAK/8NzQTbOYnEbXV4BB9E9Xe7dtuDuQrgaoII7KITnYdY4tjI10/K01Ay52PC7eISvZBRZntto2Mg1WjWQAwyIJHFC8nXoE04Wbzv91ohLfs/Og/dSOhdFymX1KVx5XSZWZ0POEOFY3rsDHFDrMiZIxipfuvBtEsznExp7ybkIDtWOxNX admin" +} + +#************************************************************************************ +# UBUNTU SSH KEY +#************************************************************************************ +variable "ubuntu_ssh_key" { + default = "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDk7y0D0Rz4F5J9Lu7gtTRTaEkJdWNLpmnDXcvHvaNC3euQ0KITIU6XaPHlXiB1M8pCrmBw3CFkFLxnPoGHrcN39wi2BR9d6Y1piz1v0gJqbggdMloSnrz51OVPqqC5BjtN/lB9hTcyNrh4MDfv37sRChHJb31s934vbj+qeiR16ZeLHH5moRXnyuzIvVUePnXHZvYz0M+YxJtvf806cz+Dvio72Y5g69/DUWReTNZ3h51MKseYMJT0Uu7mPJUZlH+xURc8zzzFazTE1jD7qL2z497si7oVHzmHm5nCECNayore3jzp5YYQkzEfe2fujxeM4UGlEBYuMkUxlH8QV5qN ubuntu" +} + +variable "vmseries_image" { + # default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-byol-814" + default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-bundle1-814" + # default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries-bundle2-814" +} diff --git a/gcp/adv_peering_2fw_2spoke_common/GUIDE.pdf b/gcp/adv_peering_2fw_2spoke_common/GUIDE.pdf new file mode 100644 index 00000000..ef6db3f1 Binary files /dev/null and b/gcp/adv_peering_2fw_2spoke_common/GUIDE.pdf differ diff --git a/gcp/adv_peering_2fw_2spoke_common/README.md b/gcp/adv_peering_2fw_2spoke_common/README.md new file mode 100644 index 00000000..0ac8b6bc --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke_common/README.md @@ -0,0 +1,59 @@ +# 2 x VM-Series / Public LB / Internal LB / 2 x Spoke VPCs + +Terraform creates 2 VM-Series firewalls that secure ingress/egress traffic from spoke VPCs. The spoke VPCs are connected (via VPC Peering) to the VM-Series trust VPC. All TCP/UDP traffic originating from the spokes is routed to internal load balancers in the trust VPC. + +Please see the [**Deployment Guide**](https://github.com/wwce/terraform/blob/master/gcp/adv_peering_2fw_2spoke_common/GUIDE.pdf) for more information. + + +

+ +

+

+Your terraform.tfvars should look like this before proceeding

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'

'; + } +} +?> diff --git a/gcp/adv_peering_2fw_2spoke_common/scripts/webserver-startup.sh b/gcp/adv_peering_2fw_2spoke_common/scripts/webserver-startup.sh new file mode 100644 index 00000000..f799d23d --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke_common/scripts/webserver-startup.sh @@ -0,0 +1,9 @@ +#!/bin/bash +sleep 120; +until sudo apt-get update; do echo "Retrying"; sleep 5; done +until sudo apt-get install -y php; do echo "Retrying"; sleep 5; done +until sudo apt-get install -y apache2; do echo "Retrying"; sleep 5; done +until sudo apt-get install -y libapache2-mod-php; do echo "Retrying"; sleep 5; done +until sudo rm -f /var/www/html/index.html; do echo "Retrying"; sleep 5; done +until sudo wget -O /var/www/html/index.php https://raw.githubusercontent.com/wwce/terraform/master/gcp/adv_peering_2fw_2spoke_common/scripts/showheaders.php; do echo "Retrying"; sleep 2; done +until sudo systemctl restart apache2; do echo "Retrying"; sleep 5; done diff --git a/gcp/adv_peering_2fw_2spoke_common/spokes.tf b/gcp/adv_peering_2fw_2spoke_common/spokes.tf new file mode 100644 index 00000000..f808f011 --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke_common/spokes.tf @@ -0,0 +1,113 @@ +#----------------------------------------------------------------------------------------------- +# Create spoke2 vpc with 2 web VMs (with internal LB). Create peer link with trust VPC. +module "vpc_spoke1" { + source = "./modules/vpc/" + vpc = var.spoke1_vpc + subnets = var.spoke1_subnets + cidrs = var.spoke1_cidrs + regions = var.regions + allowed_sources = ["0.0.0.0/0"] + delete_default_route = true +} + +module "vm_spoke1" { + source = "./modules/vm/" + names = var.spoke1_vms + zones = [ + data.google_compute_zones.available.names[0], + data.google_compute_zones.available.names[1] + ] + subnetworks = [module.vpc_spoke1.subnetwork_self_link[0]] + machine_type = "f1-micro" + image = "ubuntu-os-cloud/ubuntu-1604-lts" + create_instance_group = true + ssh_key = fileexists(var.public_key_path) ? "${var.spoke_user}:${file(var.public_key_path)}" : "" + startup_script = file("${path.module}/scripts/webserver-startup.sh") +} + +module "ilb_web" { + source = "./modules/lb_tcp_internal/" + name = var.spoke1_ilb + subnetworks = [module.vpc_spoke1.subnetwork_self_link[0]] + all_ports = false + ports = ["80"] + health_check_port = "80" + ip_address = var.spoke1_ilb_ip + + backends = { + "0" = [ + { + group = module.vm_spoke1.instance_group[0] + failover = false + }, + { + group = module.vm_spoke1.instance_group[1] + failover = false + } + ] + } + providers = { + google = google-beta + } +} + +resource "google_compute_network_peering" "trust_to_spoke1" { + name = "${var.trust_vpc}-to-${var.spoke1_vpc}" + provider = google-beta + network = module.vpc_trust.vpc_self_link + peer_network = module.vpc_spoke1.vpc_self_link + export_custom_routes = true +} + +resource "google_compute_network_peering" "spoke1_to_trust" { + name = "${var.spoke1_vpc}-to-${var.trust_vpc}" + provider = google-beta + network = module.vpc_spoke1.vpc_self_link + peer_network = module.vpc_trust.vpc_self_link + import_custom_routes = true + + depends_on = [google_compute_network_peering.trust_to_spoke1] +} + +#----------------------------------------------------------------------------------------------- +# Create spoke2 vpc with VM. Create peer link with trust VPC. +module "vpc_spoke2" { + source = "./modules/vpc/" + vpc = var.spoke2_vpc + subnets = var.spoke2_subnets + cidrs = var.spoke2_cidrs + regions = var.regions + allowed_sources = ["0.0.0.0/0"] + delete_default_route = true +} + +module "vm_spoke2" { + source = "./modules/vm/" + names = var.spoke2_vms + zones = [data.google_compute_zones.available.names[0]] + machine_type = "f1-micro" + image = "ubuntu-os-cloud/ubuntu-1604-lts" + subnetworks = [module.vpc_spoke2.subnetwork_self_link[0]] + ssh_key = fileexists(var.public_key_path) ? "${var.spoke_user}:${file(var.public_key_path)}" : "" +} + +resource "google_compute_network_peering" "trust_to_spoke2" { + name = "${var.trust_vpc}-to-${var.spoke2_vpc}" + provider = google-beta + network = module.vpc_trust.vpc_self_link + peer_network = module.vpc_spoke2.vpc_self_link + export_custom_routes = true + + depends_on = [google_compute_network_peering.spoke1_to_trust] +} + +resource "google_compute_network_peering" "spoke2_to_trust" { + name = "${var.spoke2_vpc}-to-${var.trust_vpc}" + provider = google-beta + network = module.vpc_spoke2.vpc_self_link + peer_network = module.vpc_trust.vpc_self_link + import_custom_routes = true + + depends_on = [google_compute_network_peering.trust_to_spoke2] +} + diff --git a/gcp/adv_peering_2fw_2spoke_common/terraform.tfvars b/gcp/adv_peering_2fw_2spoke_common/terraform.tfvars new file mode 100644 index 00000000..74734aad --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke_common/terraform.tfvars @@ -0,0 +1,42 @@ +#project_id = "" # Your project ID for the deployment +#public_key_path = "~/.ssh/gcp-demo.pub" # Your SSH Key + +#fw_panos = "byol-904" # Uncomment for PAN-OS 9.0.4 - BYOL +#fw_panos = "bundle1-904" # Uncomment for PAN-OS 9.0.4 - PAYG Bundle 1 +#fw_panos = "bundle2-904" # Uncomment for PAN-OS 9.0.4 - PAYG Bundle 2 + + +#------------------------------------------------------------------- +regions = ["us-east4"] + +mgmt_vpc = "mgmt-vpc" +mgmt_subnet = ["mgmt"] +mgmt_cidr = ["192.168.0.0/24"] +mgmt_sources = ["0.0.0.0/0"] + +untrust_vpc = "untrust-vpc" +untrust_subnet = ["untrust"] +untrust_cidr = ["192.168.1.0/24"] + +trust_vpc = "trust-vpc" +trust_subnet = ["trust"] +trust_cidr = ["192.168.2.0/24"] + +spoke1_vpc = "spoke1-vpc" +spoke1_subnets = ["spoke1-subnet1"] +spoke1_cidrs = ["10.1.0.0/24"] +spoke1_vms = ["spoke1-vm1", "spoke1-vm2"] +spoke1_ilb = "spoke1-intlb" +spoke1_ilb_ip = "10.1.0.100" + +spoke2_vpc = "spoke2-vpc" +spoke2_subnets = ["spoke2-subnet1"] +spoke2_cidrs = ["10.2.0.0/24"] +spoke2_vms = ["spoke2-vm1"] +spoke_user = "demo" + +fw_names_common = ["vmseries01", "vmseries02"] +fw_machine_type = "n1-standard-4" + +extlb_name = "vmseries-extlb" +intlb_name = "vmseries-intlb" diff --git a/gcp/adv_peering_2fw_2spoke_common/variables.tf b/gcp/adv_peering_2fw_2spoke_common/variables.tf new file mode 100644 index 00000000..a33106a5 --- /dev/null +++ b/gcp/adv_peering_2fw_2spoke_common/variables.tf @@ -0,0 +1,113 @@ +variable project_id { + description = "GCP Project ID" +} + +variable auth_file { + description = "GCP Project auth file" + default = "" +} + +variable regions { +} + +variable fw_panos { + description = "VM-Series license and PAN-OS (ie: bundle1-814, bundle2-814, or byol-814)" +} + +variable fw_image { + default = "https://www.googleapis.com/compute/v1/projects/paloaltonetworksgcp-public/global/images/vmseries" +} + +variable fw_names_common { + type = list(string) +} + +variable fw_machine_type { +} + +variable extlb_name { +} + +variable intlb_name { +} + +variable mgmt_vpc { +} + +variable mgmt_subnet { + type = list(string) +} + +variable mgmt_cidr { + type = list(string) +} + +variable untrust_vpc { +} + +variable untrust_subnet { + type = list(string) +} + +variable untrust_cidr { + type = list(string) +} + +variable trust_vpc { +} + +variable trust_subnet { + type = list(string) +} + +variable trust_cidr { + type = list(string) +} + +variable mgmt_sources { + type = list(string) +} + +variable spoke1_vpc { +} + +variable spoke1_subnets { + type = list(string) +} + +variable spoke1_cidrs { + type = list(string) +} + +variable spoke1_vms { + type = list(string) +} + +variable spoke1_ilb { +} + +variable spoke1_ilb_ip { +} + +variable spoke2_vpc { +} + +variable spoke2_subnets { + type = list(string) +} + +variable spoke2_cidrs { + type = list(string) +} + +variable spoke2_vms { + type = list(string) +} + +variable spoke_user { + description = "SSH user for spoke Linux VM" +} + +variable public_key_path { + description = "Local path to public SSH key. If you do not have a public key, run >> ssh-keygen -f ~/.ssh/demo-key -t rsa -C admin" +} diff --git a/gcp/adv_peering_4fw_2spoke/README.md b/gcp/adv_peering_4fw_2spoke/README.md new file mode 100644 index 00000000..c07f719e --- /dev/null +++ b/gcp/adv_peering_4fw_2spoke/README.md @@ -0,0 +1,40 @@ +## 4 x VM-Series / 2 x Spoke VPCs via Advanced Peering / ILBNH + +Terraform creates 4 VM-Series firewalls that secure ingress/egress traffic from spoke VPCs. The spoke VPCs are connected (via VPC Peering) to the VM-Series trust VPC. All TCP/UDP traffic originating from the spokes is routed to the internal load balancers. + +Please see the [**Deployment Guide**](https://github.com/wwce/terraform/blob/master/gcp/adv_peering_4fw_2spoke/guide.pdf) for more information. + +### Diagram + +

+ +

+

'; +$localIPAddress = getHostByName(getHostName()); +$sourceIPAddress = getRealIpAddr(); +echo ''. "SOURCE IP" .': '. $sourceIPAddress .'

'; +echo ''. "LOCAL IP" .': '. $localIPAddress .'

'; + +$vm_name = gethostname(); +echo ''. "VM NAME" .': '. $vm_name .'

'; +echo ''. '

'; +echo ' + HEADER INFORMATION + '; +/* All $_SERVER variables prefixed with HTTP_ are the HTTP headers */ +foreach ($_SERVER as $header => $value) { + if (substr($header, 0, 5) == 'HTTP_') { + /* Strip the HTTP_ prefix from the $_SERVER variable, what remains is the header */ + $clean_header = strtolower(substr($header, 5, strlen($header))); + + /* Replace underscores by the dashes, as the browser sends them */ + $clean_header = str_replace('_', '-', $clean_header); + + /* Cleanup: standard headers are first-letter uppercase */ + $clean_header = ucwords($clean_header, " \t\r\n\f\v-"); + + /* And show'm */ + echo ''. $header .': '. $value .'