You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

In the CrabNet matbench notebook, it does train/val/test splits. However, if #15 (comment) is correct such that the validation data (i.e. val.csv) doesn't contribute to hyperparameter tuning, then that 25% of the training data is essentially getting thrown away, correct?

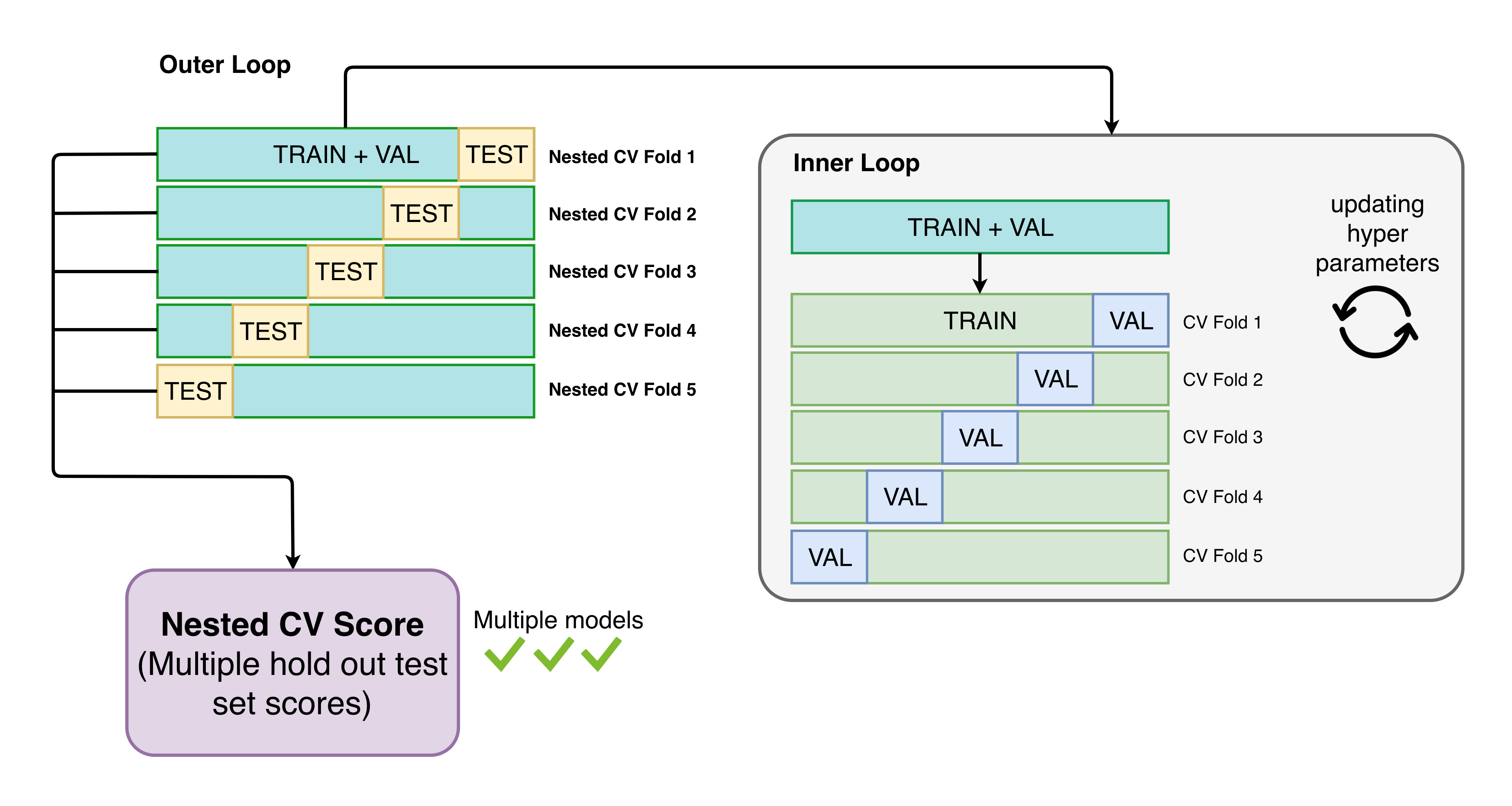

In other words, the CrabNet results are based on only 75% of the training data compared to what the other matbench models use for training. From what I understand, the train/val/test split in the context of matbench only really makes sense if you're doing hyperparameter optimization in a nested CV scheme, as follows:

(Source: https://hackingmaterials.lbl.gov/automatminer/advanced.html)

To correct this, I think all that needs to be done is change:

#split_train_val splits the training data into two sets: training and validationdefsplit_train_val(df):

df=df.sample(frac=1.0, random_state=7)

val_df=df.sample(frac=0.25, random_state=7)

train_df=df.drop(val_df.index)

returntrain_df, val_df

to

#split_train_val splits the training data into two sets: training and validationdefsplit_train_val(df):

train_df=df.sample(frac=1.0, random_state=7)

val_df=df.sample(frac=0.25, random_state=7)

returntrain_df, val_df

which makes it so there's data bleeding between train_df and val_df, but val_df ends up being essentially just a dummy dataset so that CrabNet doesn't error out when a val.csv isn't available.

Sterling

The text was updated successfully, but these errors were encountered:

If I remember correctly, for the matbench submission we chose to assign 25% of the training data as validation just as a sanity check for us to make sure CrabNet is not overfitting on the training data. I don't think CrabNet does any internal hyperparameters optimization using the validation data. Validation data should not be used for optimization or any adjustments to the model whatsoever while training.

The results from #15 suggest that adding the extra 25% validation data into the training data may not necessarily improve the results since the validation data is used to update the weights through SWA.

@anthony-wang,

In the CrabNet matbench notebook, it does train/val/test splits. However, if #15 (comment) is correct such that the validation data (i.e.

val.csv) doesn't contribute to hyperparameter tuning, then that 25% of the training data is essentially getting thrown away, correct?In other words, the CrabNet results are based on only 75% of the training data compared to what the other

matbenchmodels use for training. From what I understand, the train/val/test split in the context ofmatbenchonly really makes sense if you're doing hyperparameter optimization in a nested CV scheme, as follows:(Source: https://hackingmaterials.lbl.gov/automatminer/advanced.html)

To correct this, I think all that needs to be done is change:

to

which makes it so there's data bleeding between

train_dfandval_df, butval_dfends up being essentially just a dummy dataset so that CrabNet doesn't error out when aval.csvisn't available.Sterling

The text was updated successfully, but these errors were encountered: