🌟 Get 120 Potential Customers in 2.5 Minutes! 🤖

Hola! 🌟

I am Google Maps Scraper, created to help you find new customers and grow your sales. 🚀

Why Scrape Google Maps, you may ask? Here's why it's the perfect ground for hunting B2B customers:

-

📞 Direct Access to Phone Numbers: Connect with potential clients directly, drastically reducing the time it takes to seal a deal.

-

🌟 Target the Cream of the Crop: Target rich business owners based on their reviews, and supercharge your sales.

-

🎯 Tailored Pitching: With access to categories and websites, you can customize your pitch to cater to specific businesses and maximize your sales potential.

Countless entrepreneurs have achieved remarkable success by prospecting leads from Google Maps, and now it's your turn!

Let's delve into some of my remarkable features that entrepreneurs love:

-

💪 Rapid Lead Generation: I can scrape a whopping 1200 Google Map Leads in just 25 minutes, flooding you with potential sales prospects.

-

🚀 Effortless Multi-Query Scraping: Easily scrape multiple queries in one go, saving you valuable time and effort.

-

🌐 Unlimited Query Potential: There's no limit to the number of queries you can scrape, ensuring you never run out of leads.

In the next 5 minutes, you'll witness the magic as I extract 120 Leads from Google Maps for you, opening up a world of opportunities.

Ready to supercharge your business growth? Let's get started! 💼🌍

If you'd like to see my capabilities in action before using me, I encourage you to watch this short video.

Let's get started generating Google Maps Leads by following these simple steps:

1️⃣ Clone the Magic 🧙♀️:

git clone https://github.com/omkarcloud/google-maps-scraper

cd google-maps-scraper2️⃣ Install Dependencies 📦:

python -m pip install -r requirements.txt3️⃣ Let the Rain of Google Map Leads Begin 😎:

python main.pyOnce the scraping process is complete, you can find your leads in the output directory.

A: Open the file src/config.py and update the keyword with your desired search query.

For example, if you want to scrape leads about Digital Marketers in Kathmandu 🇳🇵, modify the code as follows:

queries = [

{

"keyword": "digital marketers in kathmandu",

},

]Note: You will be able to scrape up to 120 leads per search due to Google's scrolling limitation. Technically, there is no way possible to bypass this limitation.

A: Easy! Open src/config.py and add as many queries as you like.

For example, if you want to scrape restaurants in both Delhi 😎 and Bangalore 👨💻, use the following code:

queries = [

{

"keyword": "restaurants in delhi",

},

{

"keyword": "restaurants in bangalore",

}

]Absolutely! Open src/config.py and modify the max_results parameter.

For example, if you want to scrape the first 5 restaurants in Bangalore, use the following code:

queries = [

{

"keyword": "restaurants in Bangalore",

"max_results": 5,

}

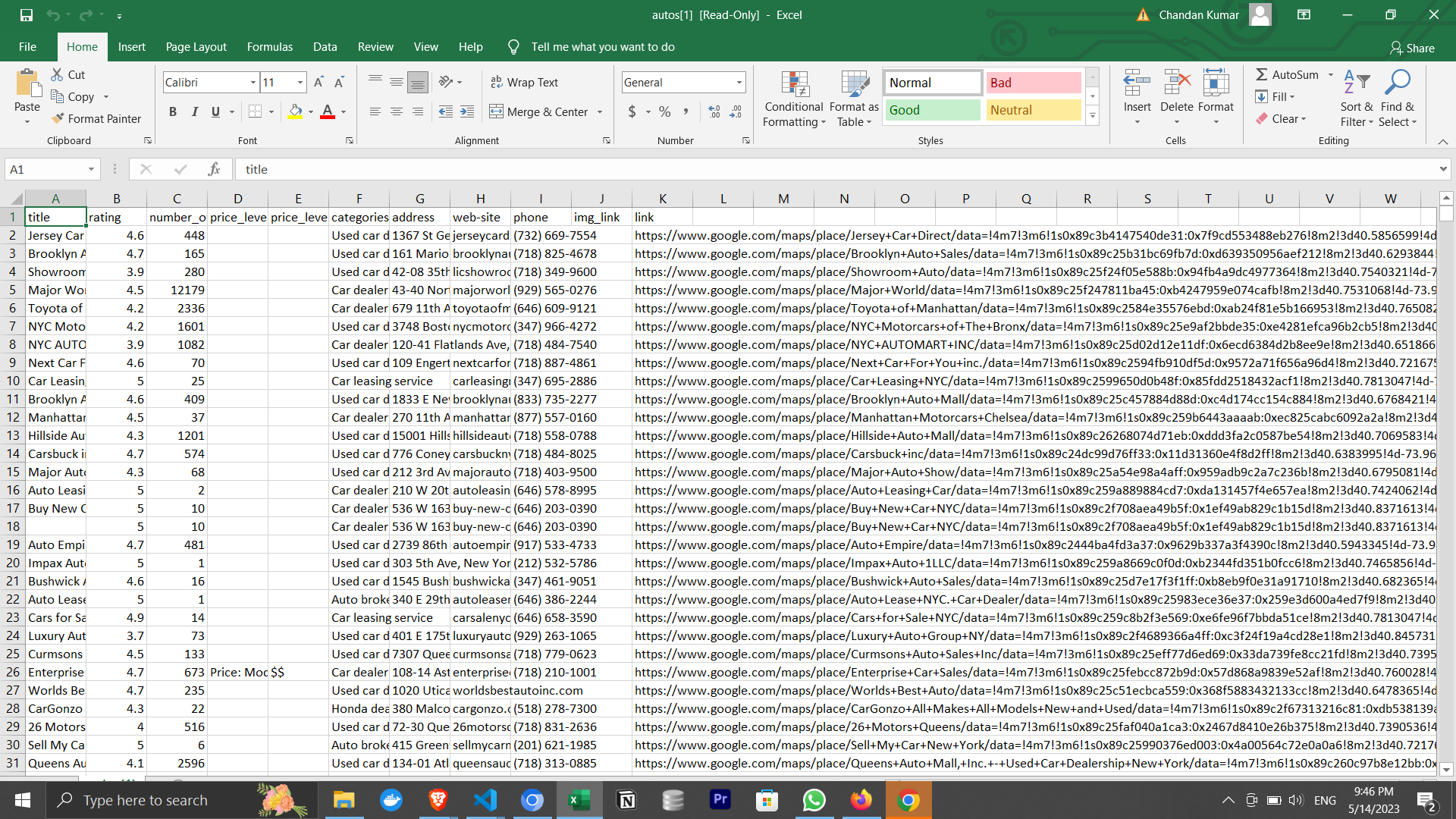

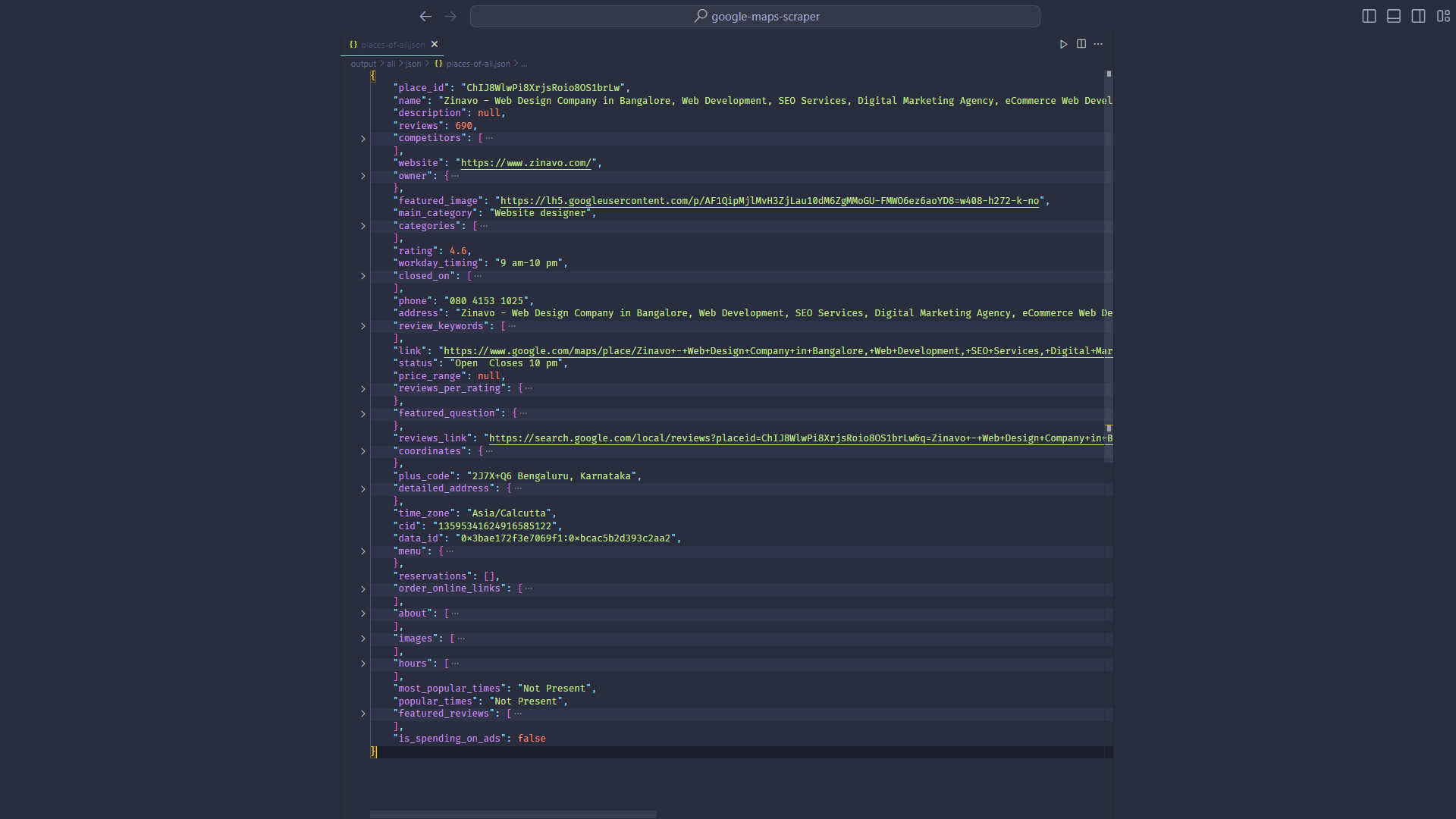

]You may upgrade to the Pro Version of the Google Maps Scraper to scrape additional data points, like:

- 🌐 Website

- 📞 Phone Numbers

- 🌍 Geo Coordinates

- 💰 Price Range

- And 26 more data points like Owner details, Photos, About Section, and many more!

Below is a sample lead scraped by the Pro Version:

View sample leads scraped by the Pro Version here

View sample leads scraped by the Pro Version here

But that's not all! The Pro Version comes loaded with advanced features, allowing you to:

-

🌟 Sort by Reviews and Ratings: Target top businesses by sorting your leads in descending/ascending order of reviews and ratings.

-

🧐 Filter Your Leads: Use filters to narrow down prospects based on minimum/maximum reviews, ratings, website availability, and more.

-

📋 Select Specific Fields: Customize your data output by selecting only the fields you need, such as title, rating, reviews, and phone numbers.

To learn more about how to use these Pro Version features, read the Pro Version docs here.

🔍 Comparison:

See how the Pro Version stacks up against the free version in this comparison image:

And here's the best part - the Pro Version offers great ROI because it helps you land new customers bringing hundreds and thousands of dollars, all with zero risk. That's right because, we offer a 30-Day Refund Policy!

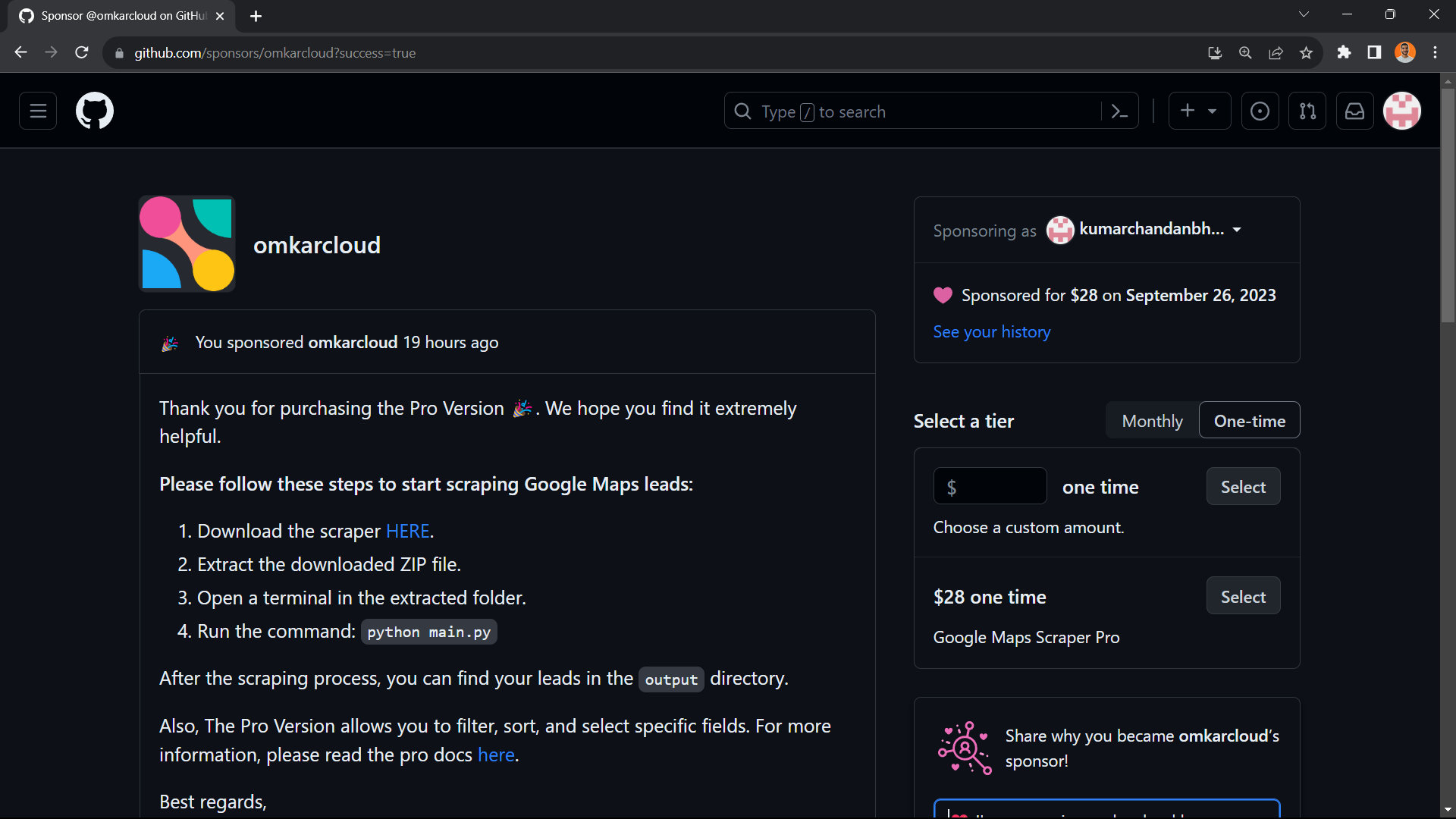

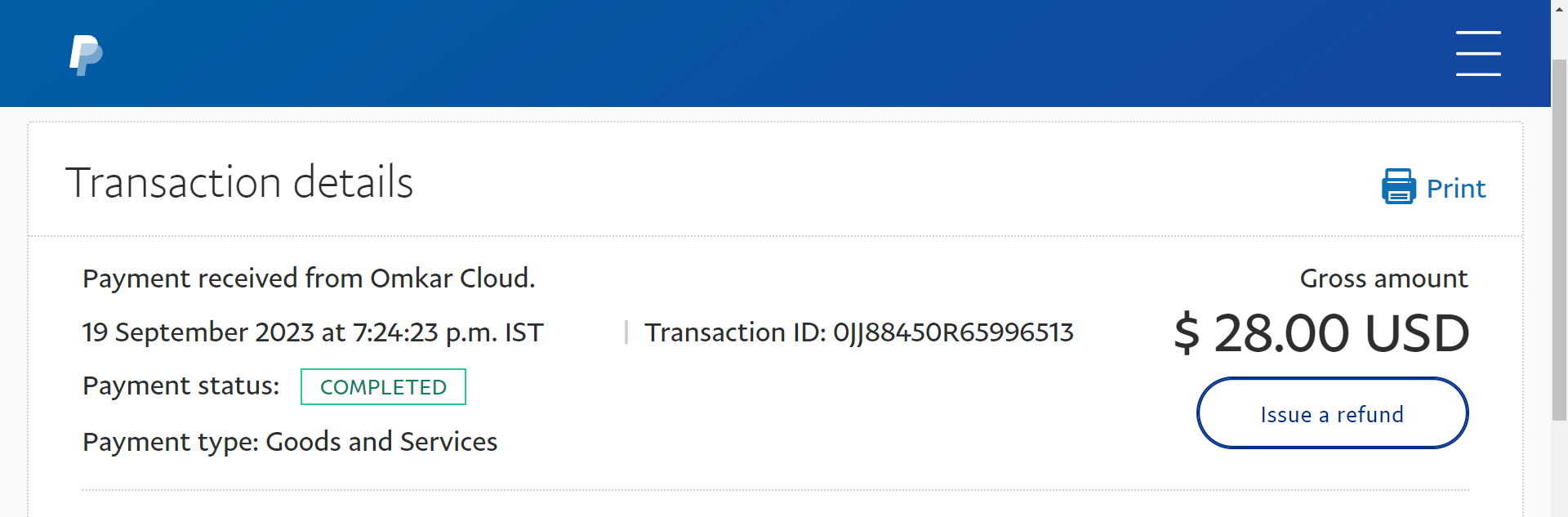

Visit the Sponsorship Page here and pay $28 by selecting Google Maps Scraper Pro Option.

After payment, you'll see a success screen with instructions on how to use the Pro Version:

We wholeheartedly believe in the value our product brings, especially since it has successfully worked for hundreds of entrepreneurs like you.

But, we also understand the reservations you might have.

That's why we've put the ball in your court: If, within the next 30 days, you feel that our product hasn’t met your expectations, don't hesitate. Reach out to us, and We will gladly refund your money, no questions and no hassles.

The risk is entirely on us because we're confident in what we've created.

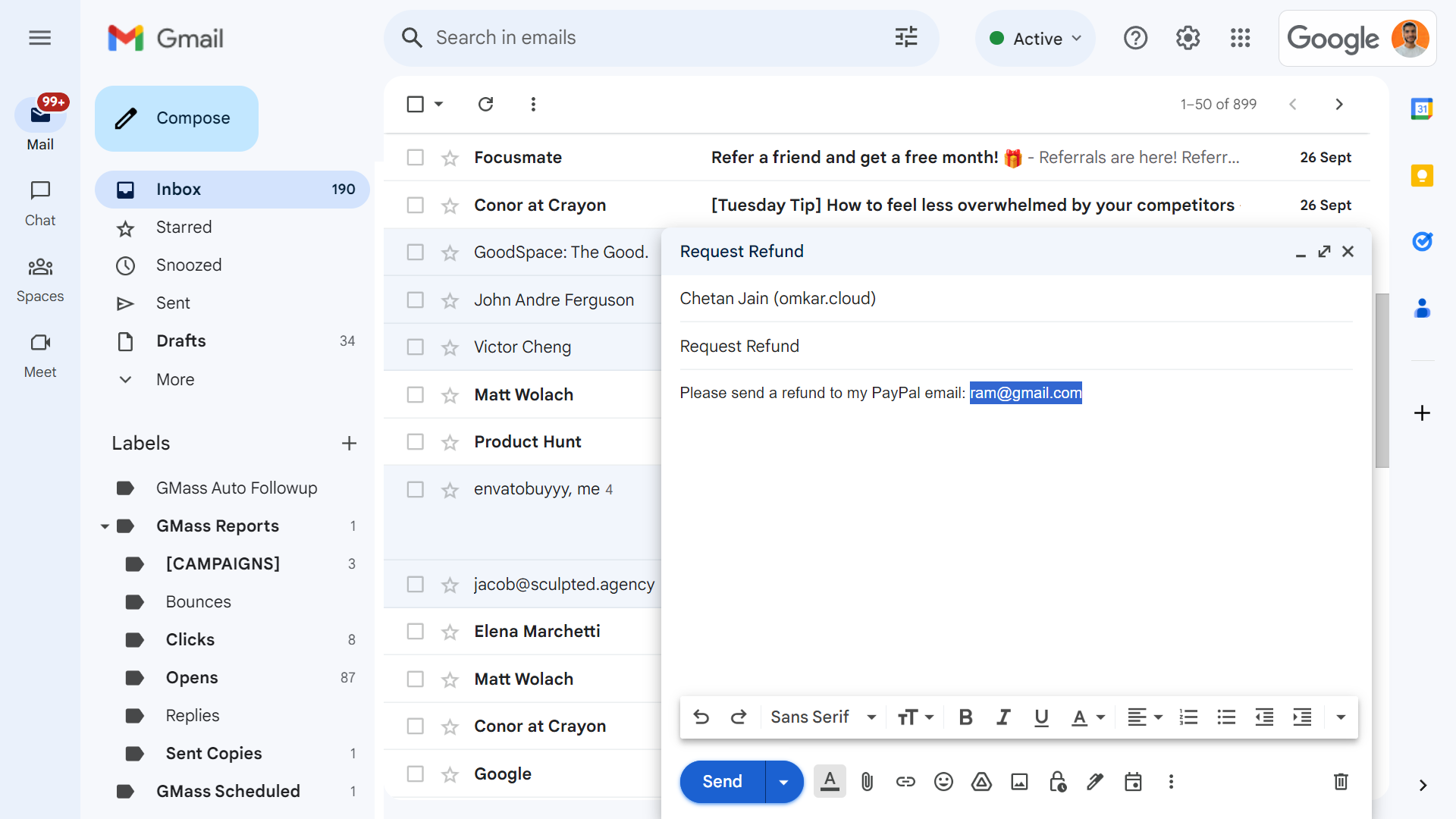

Requesting a refund is a simple process that should only take about 5 minutes. To request a refund, ensure you have one of the following:

- A PayPal Account (e.g., "[email protected]" or "[email protected]")

- or a UPI ID (India only) (e.g., 'myname@bankname" or "chetan@okhdfc")

Next, follow these steps to initiate a refund:

-

Send an email to

[email protected]using the following template:-

To request a refund via PayPal:

Subject: Request Refund Content: Please send a refund to my PayPal email: [email protected] -

To request a refund via UPI (India Only):

Subject: Request Refund Content: Please send a refund to my UPI ID: myname@bankname

-

-

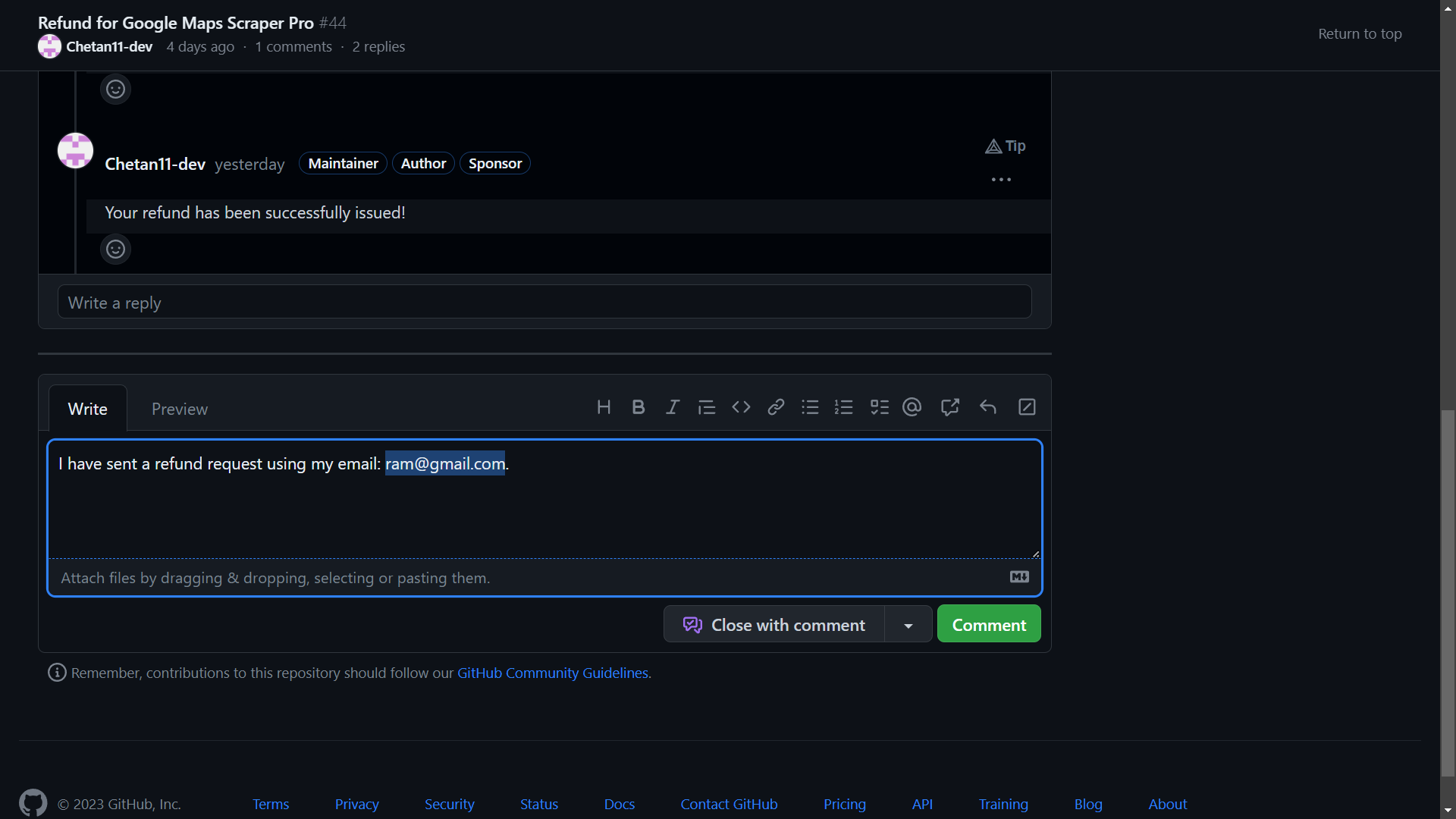

Next, go to the discussion here and comment to request a refund using this template:

I have sent a refund request from my email: [email protected]. -

You can expect to receive your refund within 1 day. We will also update you in the GitHub Discussion here :)

To boost the scraping speed, the scraper launches multiple browsers simultaneously. Here's how you can increase the speed further:

- Adjust the

number_of_scrapersvariable in the configuration file. Recommended values are:- Standard laptop: 4 or 8

- Powerful laptop: 12 or 16

Note: Avoid setting number_of_scrapers above 16, as Google Maps typically returns only 120 results. Using more than 16 scrapers may lead to a longer time spent on launching the scrapers than on scraping the places. Hence, it is best to stick to 4, 8, 12, or 16.

In case you encounter any issues, like the scraper crashing due to low-end PC specifications, follow these recommendations:

- Reduce the

number_of_scrapersby 4 points. - Ensure you have sufficient storage (at least 4 GB) available, as running multiple browser instances consumes storage space.

- Close other applications on your computer to free up memory.

Additionally, consider improving your internet speed to further enhance the scraping process.

You can easily run the scraper in Gitpod, a browser-based environment. Set it up in just 5 minutes by following these steps:

-

Visit this link and sign up using your GitHub account.

-

In the terminal, run the following command:

docker-compose build && docker-compose up -

Once the scraper has finished running, download the leads from the

outputfolder.

Reach out to us on WhatsApp! We'll solve your problem within 24 hours.