IRS: A Large Naturalistic Indoor Robotics Stereo Dataset to Train Deep Models for Disparity and Surface Normal Estimation

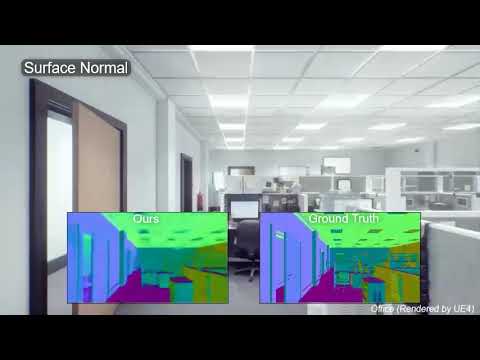

IRS is an open dataset for indoor robotics vision tasks, especially disparity and surface normal estimation. It contains totally 103,316 samples covering a wide range of indoor scenes, such as home, office, store and restaurant.

|

|

|---|---|

| Left image | Right image |

|

|

| Disparity map | Surface normal map |

| Rendering Characteristic | Options |

|---|---|

| indoor scene class | home(31145), office(43417), restaurant(22058), store(6696) |

| object class | desk, chair, sofa, glass, mirror, bed, bedside table, lamp, wardrobe, etc. |

| brightness | over-exposure(>1300), darkness(>1700) |

| light behavior | bloom(>1700), lens flare(>1700), glass transmission(>3600), mirror reflection(>3600) |

We give some sample of different indoor scene characteristics as follows. The parameters of the virtual stereo camera in UE4 are as follows:

- Baseline: 0.1 meter

- Focal Length: 480 for both the x-axis and y-axis.

|

|

|

|---|---|---|

| Home | Office | Restaurant |

|

|

|

| Normal light | Over exposure | Darkness |

|

|

|

| Glass | Mirror | Metal |

We designed a novel deep model, DTN-Net, to predict the surface normal map by refining the initial one transformed from the predicted disparity. DTN-Net (Disparity To Normal Network) is comprised of two modules, RD-Net and NormNetS. First, RD-Net predicts the disparity map for the input stereo images. Then we apply the transformation from disparity to normal in GeoNet, denoted by D2N Transform, to produces the initial coarse normal map. Finally, NormNetS takes the stereo images, the predicted disparity map by RD-Net and the initial normal map as input and predicts the final normal map. The structure of NormNetS is similar to DispNetS except that the final convolution layer outputs three channels instead of one, as each pixel normal has three dimension (x,y,z).

Q. Wang*,1, S. Zheng*,1, Q. Yan*,2, F. Deng2, K. Zhao†,1, X. Chu†,1.

IRS: A Large Naturalistic Indoor Robotics Stereo Dataset to Train Deep Models for Disparity and Surface Normal Estimation. [preprint]

* indicates equal contribution. † indicates corresponding authors.1Department of Computer Science, Hong Kong Baptist University. 2School of Geodesy and Geomatics, Wuhan University.

You can use the following OneDrive link to download our dataset.

OneDrive: https://1drv.ms/f/s!AmN7U9URpGVGem0coY8PJMHYg0g?e=nvH5oB

- Python 3.7

- PyTorch 1.6.0+

- torchvision 0.5.0+

- CUDA 10.1 (https://developer.nvidia.com/cuda-downloads)

We recommend using conda for installation:

conda env create -f environment.ymlInstall dependencies:

cd layers_package

./install.sh

# install OpenEXR (https://www.openexr.com/)

sudo apt-get update

sudo apt-get install openexr

Download IRS dataset from https://1drv.ms/f/s!AmN7U9URpGVGem0coY8PJMHYg0g?e=nvH5oB (OneDrive).

Extract zip files and put them in correct folder:

data

└── IRSDataset

├── Home

├── Office

├── Restaurant

└── Store

"FT3D" denotes FlyingThings3D.

| IRS | FT3D | IRS+FT3D | |

|---|---|---|---|

| FADNet | fadnet-ft3d.pth | fadnet-irs.pth | fadnet-ft3d-irs.pth |

| GwcNet | gwcnet-ft3d.pth | gwcnet-irs.pth | gwcnet-ft3d-irs.pth |

| DTN-Net | DNF-Net | NormNetS | |

|---|---|---|---|

| IRS | dtonnet-irs.pth | dnfusionnet-irs.pth | normnets-irs.pth |

There are configurations for train in "exp_configs" folder. You can create your own configuration file as samples.

As an example, following configuration can be used to train a DispNormNet on IRS dataset:

/exp_configs/dtonnet.conf

net=dispnormnet

loss=loss_configs/dispnetcres_irs.json

outf_model=models/${net}-irs

logf=logs/${net}-irs.log

lr=1e-4

devices=0,1,2,3

dataset=irs #sceneflow, irs, sintel

trainlist=lists/IRSDataset_TRAIN.list

vallist=lists/IRSDataset_TEST.list

startR=0

startE=0

endE=10

batchSize=16

maxdisp=-1

model=none

Then, the configuration should be specified in the "train.sh"

/train.sh

dnn="${dnn:-dispnormnet}"

source exp_configs/$dnn.conf

python main.py --cuda --net $net --loss $loss --lr $lr \

--outf $outf_model --logFile $logf \

--devices $devices --batch_size $batchSize \

--dataset $dataset --trainlist $trainlist --vallist $vallist \

--startRound $startR --startEpoch $startE --endEpoch $endE \

--model $model \

--maxdisp $maxdisp \

--manualSeed 1024 \

Lastly, use the following command to start a train

./train.sh

There is a script for evaluation with a model from a train

/detech.sh

dataset=irs

net=dispnormnet

model=models/dispnormnet-irs/model_best.pth

outf=detect_results/${net}-${dataset}/

filelist=lists/IRSDataset_TEST.list

filepath=data

CUDA_VISIBLE_DEVICES=0 python detecter.py --model $model --rp $outf --filelist $filelist --filepath $filepath --devices 0 --net ${net} --disp-on --norm-on

Use the script in your configuration, and then get result in detect_result folder.

Disparity results are saved in png format as default.

Normal results are saved in exr format as default.

If you want to change the output format, you need to modify "detecter.py" and use save function as follow

# png

skimage.io.imsave(filepath, image)

# pfm

save_pfm(filepath, data)

# exr

save_exr(data, filepath)

For viewing files in exr format, we recommand a free software

Please contact us at [email protected] if you have any question.