.

diff --git a/code/.gitignore b/code/.gitignore

new file mode 100644

index 00000000..d742ec84

--- /dev/null

+++ b/code/.gitignore

@@ -0,0 +1,2 @@

+/apikey_usage.json

+*.pem

diff --git a/code/calc/calc.go b/code/calc/calc.go

deleted file mode 100644

index c6139ba5..00000000

--- a/code/calc/calc.go

+++ /dev/null

@@ -1,24 +0,0 @@

-package calc

-

-import (

- "fmt"

-

- "gopkg.in/Knetic/govaluate.v2"

-)

-

-func CalcStr(str string) (float64, error) {

- fmt.Println(str)

-

- expression, _ := govaluate.NewEvaluableExpression(str)

- out, _ := expression.Evaluate(nil)

- fmt.Println(out)

- return out.(float64), nil

-}

-

-func FormatMathOut(out float64) string {

- //if is int

- if out == float64(int(out)) {

- return fmt.Sprintf("%d", int(out))

- }

- return fmt.Sprintf("%f", out)

-}

diff --git a/code/calc/calc_test.go b/code/calc/calc_test.go

deleted file mode 100644

index 77cb88e3..00000000

--- a/code/calc/calc_test.go

+++ /dev/null

@@ -1,41 +0,0 @@

-package calc

-

-import (

- "testing"

-)

-

-func TestCalc(t *testing.T) {

-

- out, err := CalcStr("1+1")

- if err != nil {

- t.Error(err)

- }

-

- if out != 2 {

- t.Error("1+1 should be 2")

- }

-}

-

-func TestCalc2(t *testing.T) {

-

- out, err := CalcStr("1+2")

- if err != nil {

- t.Error(err)

- }

-

- if out != 3 {

- t.Error("1+2 should be 3")

- }

-}

-

-func TestCalc3(t *testing.T) {

- //22*32

- out, err := CalcStr("22*32")

- if err != nil {

- t.Error(err)

- }

-

- if out != 704 {

- t.Error("22*32 should be 704")

- }

-}

diff --git a/code/config.example.yaml b/code/config.example.yaml

index 46c6b20a..d93a1abe 100644

--- a/code/config.example.yaml

+++ b/code/config.example.yaml

@@ -1,5 +1,37 @@

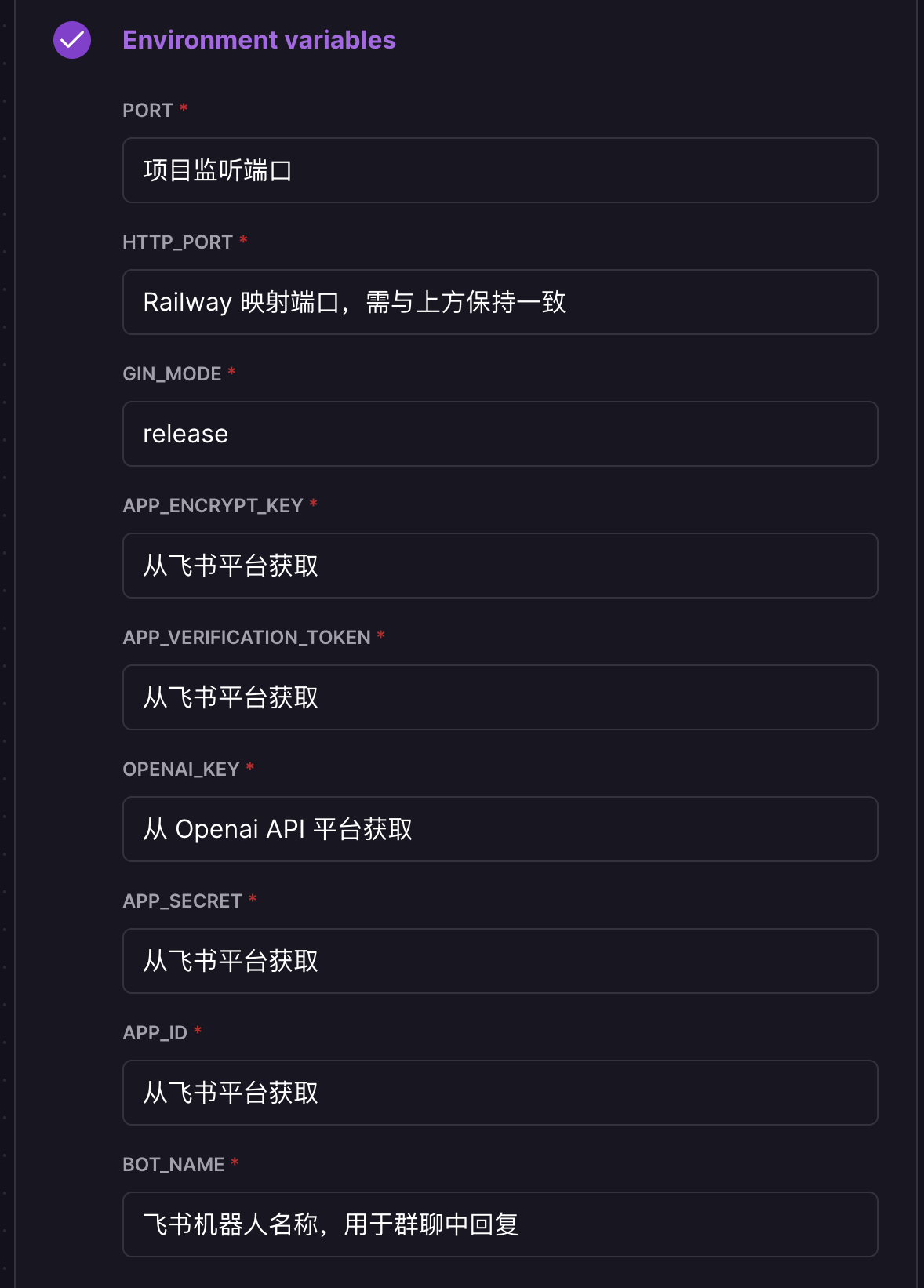

+# 飞书

+BASE_URL: https://open.feishu.cn

APP_ID: cli_axxx

APP_SECRET: xxx

-APP_ENCRYPT_KEY: xxxx

+APP_ENCRYPT_KEY: xxx

APP_VERIFICATION_TOKEN: xxx

-OPENAI_KEY: XXX

+# 请确保和飞书应用管理平台中的设置一致

+BOT_NAME: chatGpt

+# openAI key 支持负载均衡 可以填写多个key 用逗号分隔

+OPENAI_KEY: sk-xxx,sk-xxx,sk-xxx

+# openAI model 指定模型,默认为 gpt-3.5-turbo

+# 可选参数有:"gpt-4-1106-preview", "gpt-4-32K","gpt-4","gpt-3.5-turbo-16k", "gpt-3.5-turbo","gpt-3.5-turbo-16k","gpt-3.5-turbo-1106" 等

+# 如果使用gpt-4,请确认自己是否有接口调用白名单

+OPENAI_MODEL: gpt-3.5-turbo

+# openAI 最大token数 默认为2000

+OPENAI_MAX_TOKENS: 2000

+# 响应超时时间,单位为毫秒,默认为550毫秒

+OPENAI_HTTP_CLIENT_TIMEOUT: 550

+# 服务器配置

+HTTP_PORT: 9000

+HTTPS_PORT: 9001

+USE_HTTPS: false

+CERT_FILE: cert.pem

+KEY_FILE: key.pem

+# openai 地址, 一般不需要修改, 除非你有自己的反向代理

+API_URL: https://api.openai.com

+# 代理设置, 例如 "http://127.0.0.1:7890", ""代表不使用代理

+HTTP_PROXY: ""

+# 是否开启流式接口返回

+STREAM_MODE: false # set true to use stream mode

+# AZURE OPENAI

+AZURE_ON: false # set true to use Azure rather than OpenAI

+AZURE_API_VERSION: 2023-03-15-preview # 2023-03-15-preview or 2022-12-01 refer https://learn.microsoft.com/en-us/azure/cognitive-services/openai/reference#completions

+AZURE_RESOURCE_NAME: xxxx # you can find in endpoint url. Usually looks like https://{RESOURCE_NAME}.openai.azure.com

+AZURE_DEPLOYMENT_NAME: xxxx # usually looks like ...openai.azure.com/openai/deployments/{DEPLOYMENT_NAME}/chat/completions.

+AZURE_OPENAI_TOKEN: xxxx # Authentication key. We can use Azure Active Directory Authentication(TBD).

+

diff --git a/code/go.mod b/code/go.mod

index fa7420bb..59b92c77 100644

--- a/code/go.mod

+++ b/code/go.mod

@@ -5,20 +5,32 @@ go 1.18

require github.com/larksuite/oapi-sdk-go/v3 v3.0.14

require (

+ github.com/duke-git/lancet/v2 v2.1.17

github.com/gin-gonic/gin v1.8.2

+ github.com/google/uuid v1.3.0

github.com/larksuite/oapi-sdk-gin v1.0.0

+ github.com/pandodao/tokenizer-go v0.2.0

github.com/patrickmn/go-cache v2.1.0+incompatible

+ github.com/pion/opus v0.0.0-20230123082803-1052c3e89e58

+ github.com/sashabaranov/go-openai v1.13.0

+ github.com/sirupsen/logrus v1.9.0

+ github.com/spf13/pflag v1.0.5

github.com/spf13/viper v1.14.0

- gopkg.in/Knetic/govaluate.v2 v2.3.0

+ gopkg.in/yaml.v2 v2.4.0

)

require (

+ github.com/dlclark/regexp2 v1.8.1 // indirect

+ github.com/dop251/goja v0.0.0-20230304130813-e2f543bf4b4c // indirect

+ github.com/dop251/goja_nodejs v0.0.0-20230226152057-060fa99b809f // indirect

github.com/fsnotify/fsnotify v1.6.0 // indirect

github.com/gin-contrib/sse v0.1.0 // indirect

github.com/go-playground/locales v0.14.1 // indirect

github.com/go-playground/universal-translator v0.18.0 // indirect

github.com/go-playground/validator/v10 v10.11.1 // indirect

+ github.com/go-sourcemap/sourcemap v2.1.3+incompatible // indirect

github.com/goccy/go-json v0.10.0 // indirect

+ github.com/google/pprof v0.0.0-20230309165930-d61513b1440d // indirect

github.com/hashicorp/hcl v1.0.0 // indirect

github.com/json-iterator/go v1.1.12 // indirect

github.com/leodido/go-urn v1.2.1 // indirect

@@ -32,15 +44,16 @@ require (

github.com/spf13/afero v1.9.3 // indirect

github.com/spf13/cast v1.5.0 // indirect

github.com/spf13/jwalterweatherman v1.1.0 // indirect

- github.com/spf13/pflag v1.0.5 // indirect

github.com/subosito/gotenv v1.4.1 // indirect

github.com/ugorji/go/codec v1.2.8 // indirect

golang.org/x/crypto v0.5.0 // indirect

+ golang.org/x/exp v0.0.0-20221208152030-732eee02a75a // indirect

golang.org/x/net v0.5.0 // indirect

- golang.org/x/sys v0.4.0 // indirect

- golang.org/x/text v0.6.0 // indirect

+ golang.org/x/sys v0.5.0 // indirect

+ golang.org/x/text v0.8.0 // indirect

google.golang.org/protobuf v1.28.1 // indirect

gopkg.in/ini.v1 v1.67.0 // indirect

- gopkg.in/yaml.v2 v2.4.0 // indirect

gopkg.in/yaml.v3 v3.0.1 // indirect

)

+

+//replace github.com/sashabaranov/go-openai v1.13.0 => github.com/Leizhenpeng/go-openai v0.0.3

diff --git a/code/go.sum b/code/go.sum

index 677e3062..accd0903 100644

--- a/code/go.sum

+++ b/code/go.sum

@@ -40,8 +40,11 @@ github.com/BurntSushi/toml v0.3.1/go.mod h1:xHWCNGjB5oqiDr8zfno3MHue2Ht5sIBksp03

github.com/BurntSushi/xgb v0.0.0-20160522181843-27f122750802/go.mod h1:IVnqGOEym/WlBOVXweHU+Q+/VP0lqqI8lqeDx9IjBqo=

github.com/census-instrumentation/opencensus-proto v0.2.1/go.mod h1:f6KPmirojxKA12rnyqOA5BBL4O983OfeGPqjHWSTneU=

github.com/chzyer/logex v1.1.10/go.mod h1:+Ywpsq7O8HXn0nuIou7OrIPyXbp3wmkHB+jjWRnGsAI=

+github.com/chzyer/logex v1.2.0/go.mod h1:9+9sk7u7pGNWYMkh0hdiL++6OeibzJccyQU4p4MedaY=

github.com/chzyer/readline v0.0.0-20180603132655-2972be24d48e/go.mod h1:nSuG5e5PlCu98SY8svDHJxuZscDgtXS6KTTbou5AhLI=

+github.com/chzyer/readline v1.5.0/go.mod h1:x22KAscuvRqlLoK9CsoYsmxoXZMMFVyOl86cAH8qUic=

github.com/chzyer/test v0.0.0-20180213035817-a1ea475d72b1/go.mod h1:Q3SI9o4m/ZMnBNeIyt5eFwwo7qiLfzFZmjNmxjkiQlU=

+github.com/chzyer/test v0.0.0-20210722231415-061457976a23/go.mod h1:Q3SI9o4m/ZMnBNeIyt5eFwwo7qiLfzFZmjNmxjkiQlU=

github.com/client9/misspell v0.3.4/go.mod h1:qj6jICC3Q7zFZvVWo7KLAzC3yx5G7kyvSDkc90ppPyw=

github.com/cncf/udpa/go v0.0.0-20191209042840-269d4d468f6f/go.mod h1:M8M6+tZqaGXZJjfX53e64911xZQV5JYwmTeXPW+k8Sc=

github.com/cncf/udpa/go v0.0.0-20200629203442-efcf912fb354/go.mod h1:WmhPx2Nbnhtbo57+VJT5O0JRkEi1Wbu0z5j0R8u5Hbk=

@@ -50,6 +53,20 @@ github.com/creack/pty v1.1.9/go.mod h1:oKZEueFk5CKHvIhNR5MUki03XCEU+Q6VDXinZuGJ3

github.com/davecgh/go-spew v1.1.0/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

github.com/davecgh/go-spew v1.1.1 h1:vj9j/u1bqnvCEfJOwUhtlOARqs3+rkHYY13jYWTU97c=

github.com/davecgh/go-spew v1.1.1/go.mod h1:J7Y8YcW2NihsgmVo/mv3lAwl/skON4iLHjSsI+c5H38=

+github.com/dlclark/regexp2 v1.4.1-0.20201116162257-a2a8dda75c91/go.mod h1:2pZnwuY/m+8K6iRw6wQdMtk+rH5tNGR1i55kozfMjCc=

+github.com/dlclark/regexp2 v1.7.0/go.mod h1:DHkYz0B9wPfa6wondMfaivmHpzrQ3v9q8cnmRbL6yW8=

+github.com/dlclark/regexp2 v1.8.1 h1:6Lcdwya6GjPUNsBct8Lg/yRPwMhABj269AAzdGSiR+0=

+github.com/dlclark/regexp2 v1.8.1/go.mod h1:DHkYz0B9wPfa6wondMfaivmHpzrQ3v9q8cnmRbL6yW8=

+github.com/dop251/goja v0.0.0-20211022113120-dc8c55024d06/go.mod h1:R9ET47fwRVRPZnOGvHxxhuZcbrMCuiqOz3Rlrh4KSnk=

+github.com/dop251/goja v0.0.0-20221118162653-d4bf6fde1b86/go.mod h1:yRkwfj0CBpOGre+TwBsqPV0IH0Pk73e4PXJOeNDboGs=

+github.com/dop251/goja v0.0.0-20230304130813-e2f543bf4b4c h1:/utv6nmTctV6OVgfk5+O6lEMEWL+6KJy4h9NZ5fnkQQ=

+github.com/dop251/goja v0.0.0-20230304130813-e2f543bf4b4c/go.mod h1:QMWlm50DNe14hD7t24KEqZuUdC9sOTy8W6XbCU1mlw4=

+github.com/dop251/goja_nodejs v0.0.0-20210225215109-d91c329300e7/go.mod h1:hn7BA7c8pLvoGndExHudxTDKZ84Pyvv+90pbBjbTz0Y=

+github.com/dop251/goja_nodejs v0.0.0-20211022123610-8dd9abb0616d/go.mod h1:DngW8aVqWbuLRMHItjPUyqdj+HWPvnQe8V8y1nDpIbM=

+github.com/dop251/goja_nodejs v0.0.0-20230226152057-060fa99b809f h1:mmnNidRg3cMfcgyeNtIBSDZgjf/85lA/2pplccwSxYg=

+github.com/dop251/goja_nodejs v0.0.0-20230226152057-060fa99b809f/go.mod h1:0tlktQL7yHfYEtjcRGi/eiOkbDR5XF7gyFFvbC5//E0=

+github.com/duke-git/lancet/v2 v2.1.17 h1:4u9oAGgmTPTt2D7AcjjLp0ubbcaQlova8xeTIuyupDw=

+github.com/duke-git/lancet/v2 v2.1.17/go.mod h1:hNcc06mV7qr+crH/0nP+rlC3TB0Q9g5OrVnO8/TGD4c=

github.com/envoyproxy/go-control-plane v0.9.0/go.mod h1:YTl/9mNaCwkRvm6d1a2C3ymFceY/DCBVvsKhRF0iEA4=

github.com/envoyproxy/go-control-plane v0.9.1-0.20191026205805-5f8ba28d4473/go.mod h1:YTl/9mNaCwkRvm6d1a2C3ymFceY/DCBVvsKhRF0iEA4=

github.com/envoyproxy/go-control-plane v0.9.4/go.mod h1:6rpuAdCZL397s3pYoYcLgu1mIlRU8Am5FuJP05cCM98=

@@ -75,6 +92,8 @@ github.com/go-playground/universal-translator v0.18.0 h1:82dyy6p4OuJq4/CByFNOn/j

github.com/go-playground/universal-translator v0.18.0/go.mod h1:UvRDBj+xPUEGrFYl+lu/H90nyDXpg0fqeB/AQUGNTVA=

github.com/go-playground/validator/v10 v10.11.1 h1:prmOlTVv+YjZjmRmNSF3VmspqJIxJWXmqUsHwfTRRkQ=

github.com/go-playground/validator/v10 v10.11.1/go.mod h1:i+3WkQ1FvaUjjxh1kSvIA4dMGDBiPU55YFDl0WbKdWU=

+github.com/go-sourcemap/sourcemap v2.1.3+incompatible h1:W1iEw64niKVGogNgBN3ePyLFfuisuzeidWPMPWmECqU=

+github.com/go-sourcemap/sourcemap v2.1.3+incompatible/go.mod h1:F8jJfvm2KbVjc5NqelyYJmf/v5J0dwNLS2mL4sNA1Jg=

github.com/goccy/go-json v0.10.0 h1:mXKd9Qw4NuzShiRlOXKews24ufknHO7gx30lsDyokKA=

github.com/goccy/go-json v0.10.0/go.mod h1:6MelG93GURQebXPDq3khkgXZkazVtN9CRI+MGFi0w8I=

github.com/golang/glog v0.0.0-20160126235308-23def4e6c14b/go.mod h1:SBH7ygxi8pfUlaOkMMuAQtPIUF8ecWP5IEl/CR7VP2Q=

@@ -130,8 +149,13 @@ github.com/google/pprof v0.0.0-20200708004538-1a94d8640e99/go.mod h1:ZgVRPoUq/hf

github.com/google/pprof v0.0.0-20201023163331-3e6fc7fc9c4c/go.mod h1:kpwsk12EmLew5upagYY7GY0pfYCcupk39gWOCRROcvE=

github.com/google/pprof v0.0.0-20201203190320-1bf35d6f28c2/go.mod h1:kpwsk12EmLew5upagYY7GY0pfYCcupk39gWOCRROcvE=

github.com/google/pprof v0.0.0-20201218002935-b9804c9f04c2/go.mod h1:kpwsk12EmLew5upagYY7GY0pfYCcupk39gWOCRROcvE=

+github.com/google/pprof v0.0.0-20230207041349-798e818bf904/go.mod h1:uglQLonpP8qtYCYyzA+8c/9qtqgA3qsXGYqCPKARAFg=

+github.com/google/pprof v0.0.0-20230309165930-d61513b1440d h1:um9/pc7tKMINFfP1eE7Wv6PRGXlcCSJkVajF7KJw3uQ=

+github.com/google/pprof v0.0.0-20230309165930-d61513b1440d/go.mod h1:79YE0hCXdHag9sBkw2o+N/YnZtTkXi0UT9Nnixa5eYk=

github.com/google/renameio v0.1.0/go.mod h1:KWCgfxg9yswjAJkECMjeO8J8rahYeXnNhOm40UhjYkI=

github.com/google/uuid v1.1.2/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

+github.com/google/uuid v1.3.0 h1:t6JiXgmwXMjEs8VusXIJk2BXHsn+wx8BZdTaoZ5fu7I=

+github.com/google/uuid v1.3.0/go.mod h1:TIyPZe4MgqvfeYDBFedMoGGpEw/LqOeaOT+nhxU+yHo=

github.com/googleapis/gax-go/v2 v2.0.4/go.mod h1:0Wqv26UfaUD9n4G6kQubkQ+KchISgw+vpHVxEJEs9eg=

github.com/googleapis/gax-go/v2 v2.0.5/go.mod h1:DWXyrwAJ9X0FpwwEdw+IPEYBICEFu5mhpdKc/us6bOk=

github.com/googleapis/google-cloud-go-testing v0.0.0-20200911160855-bcd43fbb19e8/go.mod h1:dvDLG8qkwmyD9a/MJJN3XJcT3xFxOKAvTZGvuZmac9g=

@@ -141,6 +165,7 @@ github.com/hashicorp/hcl v1.0.0 h1:0Anlzjpi4vEasTeNFn2mLJgTSwt0+6sfsiTG8qcWGx4=

github.com/hashicorp/hcl v1.0.0/go.mod h1:E5yfLk+7swimpb2L/Alb/PJmXilQ/rhwaUYs4T20WEQ=

github.com/ianlancetaylor/demangle v0.0.0-20181102032728-5e5cf60278f6/go.mod h1:aSSvb/t6k1mPoxDqO4vJh6VOCGPwU4O0C2/Eqndh1Sc=

github.com/ianlancetaylor/demangle v0.0.0-20200824232613-28f6c0f3b639/go.mod h1:aSSvb/t6k1mPoxDqO4vJh6VOCGPwU4O0C2/Eqndh1Sc=

+github.com/ianlancetaylor/demangle v0.0.0-20220319035150-800ac71e25c2/go.mod h1:aYm2/VgdVmcIU8iMfdMvDMsRAQjcfZSKFby6HOFvi/w=

github.com/json-iterator/go v1.1.12 h1:PV8peI4a0ysnczrg+LtxykD8LfKY9ML6u2jnxaEnrnM=

github.com/json-iterator/go v1.1.12/go.mod h1:e30LSqwooZae/UwlEbR2852Gd8hjQvJoHmT4TnhNGBo=

github.com/jstemmer/go-junit-report v0.0.0-20190106144839-af01ea7f8024/go.mod h1:6v2b51hI/fHJwM22ozAgKL4VKDeJcHhJFhtBdhmNjmU=

@@ -172,12 +197,16 @@ github.com/modern-go/concurrent v0.0.0-20180306012644-bacd9c7ef1dd h1:TRLaZ9cD/w

github.com/modern-go/concurrent v0.0.0-20180306012644-bacd9c7ef1dd/go.mod h1:6dJC0mAP4ikYIbvyc7fijjWJddQyLn8Ig3JB5CqoB9Q=

github.com/modern-go/reflect2 v1.0.2 h1:xBagoLtFs94CBntxluKeaWgTMpvLxC4ur3nMaC9Gz0M=

github.com/modern-go/reflect2 v1.0.2/go.mod h1:yWuevngMOJpCy52FWWMvUC8ws7m/LJsjYzDa0/r8luk=

+github.com/pandodao/tokenizer-go v0.2.0 h1:NhfI8fGvQkDld2cZCag6NEU3pJ/ugU9zoY1R/zi9YCs=

+github.com/pandodao/tokenizer-go v0.2.0/go.mod h1:t6qFbaleKxbv0KNio2XUN/mfGM5WKv4haPXDQWVDG00=

github.com/patrickmn/go-cache v2.1.0+incompatible h1:HRMgzkcYKYpi3C8ajMPV8OFXaaRUnok+kx1WdO15EQc=

github.com/patrickmn/go-cache v2.1.0+incompatible/go.mod h1:3Qf8kWWT7OJRJbdiICTKqZju1ZixQ/KpMGzzAfe6+WQ=

github.com/pelletier/go-toml v1.9.5 h1:4yBQzkHv+7BHq2PQUZF3Mx0IYxG7LsP222s7Agd3ve8=

github.com/pelletier/go-toml v1.9.5/go.mod h1:u1nR/EPcESfeI/szUZKdtJ0xRNbUoANCkoOuaOx1Y+c=

github.com/pelletier/go-toml/v2 v2.0.6 h1:nrzqCb7j9cDFj2coyLNLaZuJTLjWjlaz6nvTvIwycIU=

github.com/pelletier/go-toml/v2 v2.0.6/go.mod h1:eumQOmlWiOPt5WriQQqoM5y18pDHwha2N+QD+EUNTek=

+github.com/pion/opus v0.0.0-20230123082803-1052c3e89e58 h1:wi5XffRvL9Ghx8nRAdZyAjmLV/ccnn2xJ4w6S6fELgA=

+github.com/pion/opus v0.0.0-20230123082803-1052c3e89e58/go.mod h1:m8ODxkLrcNvLY6BPvOj7yLxK1wMQWA+2jqKcsrZ293U=

github.com/pkg/diff v0.0.0-20210226163009-20ebb0f2a09e/go.mod h1:pJLUxLENpZxwdsKMEsNbx1VGcRFpLqf3715MtcvvzbA=

github.com/pkg/errors v0.9.1/go.mod h1:bwawxfHBFNV+L2hUp1rHADufV3IMtnDRdf1r5NINEl0=

github.com/pkg/sftp v1.13.1/go.mod h1:3HaPG6Dq1ILlpPZRO0HVMrsydcdLt6HRDccSgb87qRg=

@@ -188,6 +217,10 @@ github.com/rogpeppe/go-internal v1.3.0/go.mod h1:M8bDsm7K2OlrFYOpmOWEs/qY81heoFR

github.com/rogpeppe/go-internal v1.6.1/go.mod h1:xXDCJY+GAPziupqXw64V24skbSoqbTEfhy4qGm1nDQc=

github.com/rogpeppe/go-internal v1.8.0 h1:FCbCCtXNOY3UtUuHUYaghJg4y7Fd14rXifAYUAtL9R8=

github.com/rogpeppe/go-internal v1.8.0/go.mod h1:WmiCO8CzOY8rg0OYDC4/i/2WRWAB6poM+XZ2dLUbcbE=

+github.com/sashabaranov/go-openai v1.13.0 h1:EAusFfnhaMaaUspUZ2+MbB/ZcVeD4epJmTOlZ+8AcAE=

+github.com/sashabaranov/go-openai v1.13.0/go.mod h1:lj5b/K+zjTSFxVLijLSTDZuP7adOgerWeFyZLUhAKRg=

+github.com/sirupsen/logrus v1.9.0 h1:trlNQbNUG3OdDrDil03MCb1H2o9nJ1x4/5LYw7byDE0=

+github.com/sirupsen/logrus v1.9.0/go.mod h1:naHLuLoDiP4jHNo9R0sCBMtWGeIprob74mVsIT4qYEQ=

github.com/spf13/afero v1.9.3 h1:41FoI0fD7OR7mGcKE/aOiLkGreyf8ifIOQmJANWogMk=

github.com/spf13/afero v1.9.3/go.mod h1:iUV7ddyEEZPO5gA3zD4fJt6iStLlL+Lg4m2cihcDf8Y=

github.com/spf13/cast v1.5.0 h1:rj3WzYc11XZaIZMPKmwP96zkFEnnAmV8s6XbB2aY32w=

@@ -209,8 +242,8 @@ github.com/stretchr/testify v1.6.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/

github.com/stretchr/testify v1.7.0/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

github.com/stretchr/testify v1.7.1/go.mod h1:6Fq8oRcR53rry900zMqJjRRixrwX3KX962/h/Wwjteg=

github.com/stretchr/testify v1.8.0/go.mod h1:yNjHg4UonilssWZ8iaSj1OCr/vHnekPRkoO+kdMU+MU=

-github.com/stretchr/testify v1.8.1 h1:w7B6lhMri9wdJUVmEZPGGhZzrYTPvgJArz7wNPgYKsk=

github.com/stretchr/testify v1.8.1/go.mod h1:w2LPCIKwWwSfY2zedu0+kehJoqGctiVI29o6fzry7u4=

+github.com/stretchr/testify v1.8.2 h1:+h33VjcLVPDHtOdpUCuF+7gSuG3yGIftsP1YvFihtJ8=

github.com/subosito/gotenv v1.4.1 h1:jyEFiXpy21Wm81FBN71l9VoMMV8H8jG+qIK3GCpY6Qs=

github.com/subosito/gotenv v1.4.1/go.mod h1:ayKnFf/c6rvx/2iiLrJUk1e6plDbT3edrFNGqEflhK0=

github.com/ugorji/go/codec v1.2.8 h1:sgBJS6COt0b/P40VouWKdseidkDgHxYGm0SAglUHfP0=

@@ -219,6 +252,7 @@ github.com/yuin/goldmark v1.1.25/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9de

github.com/yuin/goldmark v1.1.27/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9decYSb74=

github.com/yuin/goldmark v1.1.32/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9decYSb74=

github.com/yuin/goldmark v1.2.1/go.mod h1:3hX8gzYuyVAZsxl0MRgGTJEmQBFcNTphYh9decYSb74=

+github.com/yuin/goldmark v1.4.13/go.mod h1:6yULJ656Px+3vBD8DxQVa3kxgyrAnzto9xy5taEt/CY=

go.opencensus.io v0.21.0/go.mod h1:mSImk1erAIZhrmZN+AvHh14ztQfjbGwt4TtuofqLduU=

go.opencensus.io v0.22.0/go.mod h1:+kGneAE2xo2IficOXnaByMWTGM9T73dGwxeWcUqIpI8=

go.opencensus.io v0.22.2/go.mod h1:yxeiOL68Rb0Xd1ddK5vPZ/oVn4vY4Ynel7k9FzqtOIw=

@@ -231,6 +265,7 @@ golang.org/x/crypto v0.0.0-20190605123033-f99c8df09eb5/go.mod h1:yigFU9vqHzYiE8U

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

golang.org/x/crypto v0.0.0-20200622213623-75b288015ac9/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

golang.org/x/crypto v0.0.0-20210421170649-83a5a9bb288b/go.mod h1:T9bdIzuCu7OtxOm1hfPfRQxPLYneinmdGuTeoZ9dtd4=

+golang.org/x/crypto v0.0.0-20210921155107-089bfa567519/go.mod h1:GvvjBRRGRdwPK5ydBHafDWAxML/pGHZbMvKqRZ5+Abc=

golang.org/x/crypto v0.0.0-20211108221036-ceb1ce70b4fa/go.mod h1:GvvjBRRGRdwPK5ydBHafDWAxML/pGHZbMvKqRZ5+Abc=

golang.org/x/crypto v0.0.0-20211215153901-e495a2d5b3d3/go.mod h1:IxCIyHEi3zRg3s0A5j5BB6A9Jmi73HwBIUl50j+osU4=

golang.org/x/crypto v0.5.0 h1:U/0M97KRkSFvyD/3FSmdP5W5swImpNgle/EHFhOsQPE=

@@ -245,6 +280,8 @@ golang.org/x/exp v0.0.0-20191227195350-da58074b4299/go.mod h1:2RIsYlXP63K8oxa1u0

golang.org/x/exp v0.0.0-20200119233911-0405dc783f0a/go.mod h1:2RIsYlXP63K8oxa1u096TMicItID8zy7Y6sNkU49FU4=

golang.org/x/exp v0.0.0-20200207192155-f17229e696bd/go.mod h1:J/WKrq2StrnmMY6+EHIKF9dgMWnmCNThgcyBT1FY9mM=

golang.org/x/exp v0.0.0-20200224162631-6cc2880d07d6/go.mod h1:3jZMyOhIsHpP37uCMkUooju7aAi5cS1Q23tOzKc+0MU=

+golang.org/x/exp v0.0.0-20221208152030-732eee02a75a h1:4iLhBPcpqFmylhnkbY3W0ONLUYYkDAW9xMFLfxgsvCw=

+golang.org/x/exp v0.0.0-20221208152030-732eee02a75a/go.mod h1:CxIveKay+FTh1D0yPZemJVgC/95VzuuOLq5Qi4xnoYc=

golang.org/x/image v0.0.0-20190227222117-0694c2d4d067/go.mod h1:kZ7UVZpmo3dzQBMxlp+ypCbDeSB+sBbTgSJuh5dn5js=

golang.org/x/image v0.0.0-20190802002840-cff245a6509b/go.mod h1:FeLwcggjj3mMvU+oOTbSwawSJRM1uh48EjtB4UJZlP0=

golang.org/x/lint v0.0.0-20181026193005-c67002cb31c3/go.mod h1:UVdnD1Gm6xHRNCYTkRU2/jEulfH38KcIWyp/GAMgvoE=

@@ -268,6 +305,7 @@ golang.org/x/mod v0.2.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

golang.org/x/mod v0.3.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

golang.org/x/mod v0.4.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

golang.org/x/mod v0.4.1/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

+golang.org/x/mod v0.6.0-dev.0.20220419223038-86c51ed26bb4/go.mod h1:jJ57K6gSWd91VN4djpZkiMVwK6gcyfeH4XE8wZrZaV4=

golang.org/x/net v0.0.0-20180724234803-3673e40ba225/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

golang.org/x/net v0.0.0-20180826012351-8a410e7b638d/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

golang.org/x/net v0.0.0-20190108225652-1e06a53dbb7e/go.mod h1:mL1N/T3taQHkDXs73rZJwtUhF3w3ftmwwsq0BUmARs4=

@@ -300,6 +338,8 @@ golang.org/x/net v0.0.0-20201209123823-ac852fbbde11/go.mod h1:m0MpNAwzfU5UDzcl9v

golang.org/x/net v0.0.0-20201224014010-6772e930b67b/go.mod h1:m0MpNAwzfU5UDzcl9v0D8zg8gWTRqZa9RBIspLL5mdg=

golang.org/x/net v0.0.0-20210226172049-e18ecbb05110/go.mod h1:m0MpNAwzfU5UDzcl9v0D8zg8gWTRqZa9RBIspLL5mdg=

golang.org/x/net v0.0.0-20211112202133-69e39bad7dc2/go.mod h1:9nx3DQGgdP8bBQD5qxJ1jj9UTztislL4KSBs9R2vV5Y=

+golang.org/x/net v0.0.0-20220722155237-a158d28d115b/go.mod h1:XRhObCWvk6IyKnWLug+ECip1KBveYUHfp+8e9klMJ9c=

+golang.org/x/net v0.4.0/go.mod h1:MBQ8lrhLObU/6UmLb4fmbmk5OcyYmqtbGd/9yIeKjEE=

golang.org/x/net v0.5.0 h1:GyT4nK/YDHSqa1c4753ouYCDajOYKTja9Xb/OHtgvSw=

golang.org/x/net v0.5.0/go.mod h1:DivGGAXEgPSlEBzxGzZI+ZLohi+xUj054jfeKui00ws=

golang.org/x/oauth2 v0.0.0-20180821212333-d2e6202438be/go.mod h1:N/0e6XlmueqKjAGxoOufVs8QHGRruUQn6yWY3a++T0U=

@@ -321,6 +361,7 @@ golang.org/x/sync v0.0.0-20200317015054-43a5402ce75a/go.mod h1:RxMgew5VJxzue5/jJ

golang.org/x/sync v0.0.0-20200625203802-6e8e738ad208/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

golang.org/x/sync v0.0.0-20201207232520-09787c993a3a/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

+golang.org/x/sync v0.0.0-20220722155255-886fb9371eb4/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

golang.org/x/sys v0.0.0-20180830151530-49385e6e1522/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

golang.org/x/sys v0.0.0-20190312061237-fead79001313/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

@@ -357,11 +398,18 @@ golang.org/x/sys v0.0.0-20210423082822-04245dca01da/go.mod h1:h1NjWce9XRLGQEsW7w

golang.org/x/sys v0.0.0-20210423185535-09eb48e85fd7/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

golang.org/x/sys v0.0.0-20210615035016-665e8c7367d1/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/sys v0.0.0-20210806184541-e5e7981a1069/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.0.0-20220310020820-b874c991c1a5/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.0.0-20220520151302-bc2c85ada10a/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.0.0-20220715151400-c0bba94af5f8/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.0.0-20220722155257-8c9f86f7a55f/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/sys v0.0.0-20220811171246-fbc7d0a398ab/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/sys v0.0.0-20220908164124-27713097b956/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

-golang.org/x/sys v0.4.0 h1:Zr2JFtRQNX3BCZ8YtxRE9hNJYC8J6I1MVbMg6owUp18=

-golang.org/x/sys v0.4.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.3.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

+golang.org/x/sys v0.5.0 h1:MUK/U/4lj1t1oPg0HfuXDN/Z1wv31ZJ/YcPiGccS4DU=

+golang.org/x/sys v0.5.0/go.mod h1:oPkhp1MJrh7nUepCBck5+mAzfO9JrbApNNgaTdGDITg=

golang.org/x/term v0.0.0-20201126162022-7de9c90e9dd1/go.mod h1:bj7SfCRtBDWHUb9snDiAeCFNEtKQo2Wmx5Cou7ajbmo=

+golang.org/x/term v0.0.0-20210927222741-03fcf44c2211/go.mod h1:jbD1KX2456YbFQfuXm/mYQcufACuNUgVhRMnK/tPxf8=

+golang.org/x/term v0.3.0/go.mod h1:q750SLmJuPmVoN1blW3UFBPREJfb1KmY3vwxfr+nFDA=

golang.org/x/text v0.0.0-20170915032832-14c0d48ead0c/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

golang.org/x/text v0.3.1-0.20180807135948-17ff2d5776d2/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

@@ -370,8 +418,10 @@ golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

golang.org/x/text v0.3.4/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

golang.org/x/text v0.3.6/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

golang.org/x/text v0.3.7/go.mod h1:u+2+/6zg+i71rQMx5EYifcz6MCKuco9NR6JIITiCfzQ=

-golang.org/x/text v0.6.0 h1:3XmdazWV+ubf7QgHSTWeykHOci5oeekaGJBLkrkaw4k=

-golang.org/x/text v0.6.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

+golang.org/x/text v0.3.8/go.mod h1:E6s5w1FMmriuDzIBO73fBruAKo1PCIq6d2Q6DHfQ8WQ=

+golang.org/x/text v0.5.0/go.mod h1:mrYo+phRRbMaCq/xk9113O4dZlRixOauAjOtrjsXDZ8=

+golang.org/x/text v0.8.0 h1:57P1ETyNKtuIjB4SRd15iJxuhj8Gc416Y78H3qgMh68=

+golang.org/x/text v0.8.0/go.mod h1:e1OnstbJyHTd6l/uOt8jFFHp6TRDWZR/bV3emEE/zU8=

golang.org/x/time v0.0.0-20181108054448-85acf8d2951c/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

golang.org/x/time v0.0.0-20190308202827-9d24e82272b4/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

golang.org/x/time v0.0.0-20191024005414-555d28b269f0/go.mod h1:tRJNPiyCQ0inRvYxbN9jk5I+vvW/OXSQhTDSoE431IQ=

@@ -422,6 +472,7 @@ golang.org/x/tools v0.0.0-20201208233053-a543418bbed2/go.mod h1:emZCQorbCU4vsT4f

golang.org/x/tools v0.0.0-20210105154028-b0ab187a4818/go.mod h1:emZCQorbCU4vsT4fOWvOPXz4eW1wZW4PmDk9uLelYpA=

golang.org/x/tools v0.0.0-20210108195828-e2f9c7f1fc8e/go.mod h1:emZCQorbCU4vsT4fOWvOPXz4eW1wZW4PmDk9uLelYpA=

golang.org/x/tools v0.1.0/go.mod h1:xkSsbof2nBLbhDlRMhhhyNLN/zl3eTqcnHD5viDpcZ0=

+golang.org/x/tools v0.1.12/go.mod h1:hNGJHUnrk76NpqgfD5Aqm5Crs+Hm0VOH/i9J2+nxYbc=

golang.org/x/xerrors v0.0.0-20190717185122-a985d3407aa7/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

golang.org/x/xerrors v0.0.0-20191011141410-1b5146add898/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

golang.org/x/xerrors v0.0.0-20191204190536-9bdfabe68543/go.mod h1:I/5z698sn9Ka8TeJc9MKroUUfqBBauWjQqLJ2OPfmY0=

@@ -517,8 +568,6 @@ google.golang.org/protobuf v1.25.0/go.mod h1:9JNX74DMeImyA3h4bdi1ymwjUzf21/xIlba

google.golang.org/protobuf v1.26.0-rc.1/go.mod h1:jlhhOSvTdKEhbULTjvd4ARK9grFBp09yW+WbY/TyQbw=

google.golang.org/protobuf v1.28.1 h1:d0NfwRgPtno5B1Wa6L2DAG+KivqkdutMf1UhdNx175w=

google.golang.org/protobuf v1.28.1/go.mod h1:HV8QOd/L58Z+nl8r43ehVNZIU/HEI6OcFqwMG9pJV4I=

-gopkg.in/Knetic/govaluate.v2 v2.3.0 h1:naJVc9CZlWA8rC8f5mvECJD7jreTrn7FvGXjBthkHJQ=

-gopkg.in/Knetic/govaluate.v2 v2.3.0/go.mod h1:NW0gr10J8s7aNghEg6uhdxiEaBvc0+8VgJjVViHUKp4=

gopkg.in/check.v1 v0.0.0-20161208181325-20d25e280405/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

gopkg.in/check.v1 v1.0.0-20180628173108-788fd7840127/go.mod h1:Co6ibVJAznAaIkqp8huTwlJQCZ016jof/cbN4VW5Yz0=

gopkg.in/check.v1 v1.0.0-20201130134442-10cb98267c6c h1:Hei/4ADfdWqJk1ZMxUNpqntNwaWcugrBjAiHlqqRiVk=

diff --git a/code/handlers/card_ai_mode_action.go b/code/handlers/card_ai_mode_action.go

new file mode 100644

index 00000000..f7f05b82

--- /dev/null

+++ b/code/handlers/card_ai_mode_action.go

@@ -0,0 +1,38 @@

+package handlers

+

+import (

+ "context"

+

+ "start-feishubot/services"

+ "start-feishubot/services/openai"

+

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+)

+

+// AIModeChooseKind is the kind of card action for choosing AI mode

+func NewAIModeCardHandler(cardMsg CardMsg,

+ m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+

+ if cardMsg.Kind == AIModeChooseKind {

+ newCard, err, done := CommonProcessAIMode(cardMsg, cardAction,

+ m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+// CommonProcessAIMode is the common process for choosing AI mode

+func CommonProcessAIMode(msg CardMsg, cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) (interface{},

+ error, bool) {

+ option := cardAction.Action.Option

+ replyMsg(context.Background(), "已选择发散模式:"+option,

+ &msg.MsgId)

+ cache.SetAIMode(msg.SessionId, openai.AIModeMap[option])

+ return nil, nil, true

+}

diff --git a/code/handlers/card_clear_action.go b/code/handlers/card_clear_action.go

new file mode 100644

index 00000000..c1c1ab26

--- /dev/null

+++ b/code/handlers/card_clear_action.go

@@ -0,0 +1,45 @@

+package handlers

+

+import (

+ "context"

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+ "start-feishubot/logger"

+ "start-feishubot/services"

+)

+

+func NewClearCardHandler(cardMsg CardMsg, m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == ClearCardKind {

+ newCard, err, done := CommonProcessClearCache(cardMsg, m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func CommonProcessClearCache(cardMsg CardMsg, session services.SessionServiceCacheInterface) (

+ interface{}, error, bool) {

+ logger.Debugf("card msg value %v", cardMsg.Value)

+ if cardMsg.Value == "1" {

+ session.Clear(cardMsg.SessionId)

+ newCard, _ := newSendCard(

+ withHeader("️🆑 机器人提醒", larkcard.TemplateGrey),

+ withMainMd("已删除此话题的上下文信息"),

+ withNote("我们可以开始一个全新的话题,继续找我聊天吧"),

+ )

+ logger.Debugf("session %v", newCard)

+ return newCard, nil, true

+ }

+ if cardMsg.Value == "0" {

+ newCard, _ := newSendCard(

+ withHeader("️🆑 机器人提醒", larkcard.TemplateGreen),

+ withMainMd("依旧保留此话题的上下文信息"),

+ withNote("我们可以继续探讨这个话题,期待和您聊天。如果您有其他问题或者想要讨论的话题,请告诉我哦"),

+ )

+ return newCard, nil, true

+ }

+ return nil, nil, false

+}

diff --git a/code/handlers/card_common_action.go b/code/handlers/card_common_action.go

new file mode 100644

index 00000000..1f1a7ac8

--- /dev/null

+++ b/code/handlers/card_common_action.go

@@ -0,0 +1,49 @@

+package handlers

+

+import (

+ "context"

+ "encoding/json"

+ "fmt"

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+)

+

+type CardHandlerMeta func(cardMsg CardMsg, m MessageHandler) CardHandlerFunc

+

+type CardHandlerFunc func(ctx context.Context, cardAction *larkcard.CardAction) (

+ interface{}, error)

+

+var ErrNextHandler = fmt.Errorf("next handler")

+

+func NewCardHandler(m MessageHandler) CardHandlerFunc {

+ handlers := []CardHandlerMeta{

+ NewClearCardHandler,

+ NewPicResolutionHandler,

+ NewVisionResolutionHandler,

+ NewPicTextMoreHandler,

+ NewPicModeChangeHandler,

+ NewRoleTagCardHandler,

+ NewRoleCardHandler,

+ NewAIModeCardHandler,

+ NewVisionModeChangeHandler,

+ }

+

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ var cardMsg CardMsg

+ actionValue := cardAction.Action.Value

+ actionValueJson, _ := json.Marshal(actionValue)

+ if err := json.Unmarshal(actionValueJson, &cardMsg); err != nil {

+ return nil, err

+ }

+ //pp.Println(cardMsg)

+ //logger.Debug("cardMsg ", cardMsg)

+ for _, handler := range handlers {

+ h := handler(cardMsg, m)

+ i, err := h(ctx, cardAction)

+ if err == ErrNextHandler {

+ continue

+ }

+ return i, err

+ }

+ return nil, nil

+ }

+}

diff --git a/code/handlers/card_pic_action.go b/code/handlers/card_pic_action.go

new file mode 100644

index 00000000..a077a53f

--- /dev/null

+++ b/code/handlers/card_pic_action.go

@@ -0,0 +1,115 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ larkcore "github.com/larksuite/oapi-sdk-go/v3/core"

+ "start-feishubot/logger"

+

+ "start-feishubot/services"

+

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+)

+

+func NewPicResolutionHandler(cardMsg CardMsg, m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == PicResolutionKind {

+ CommonProcessPicResolution(cardMsg, cardAction, m.sessionCache)

+ return nil, nil

+ }

+ if cardMsg.Kind == PicStyleKind {

+ CommonProcessPicStyle(cardMsg, cardAction, m.sessionCache)

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func NewPicModeChangeHandler(cardMsg CardMsg, m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == PicModeChangeKind {

+ newCard, err, done := CommonProcessPicModeChange(cardMsg, m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func NewPicTextMoreHandler(cardMsg CardMsg, m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == PicTextMoreKind {

+ go func() {

+ m.CommonProcessPicMore(cardMsg)

+ }()

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func CommonProcessPicResolution(msg CardMsg,

+ cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) {

+ option := cardAction.Action.Option

+ fmt.Println(larkcore.Prettify(msg))

+ cache.SetPicResolution(msg.SessionId, services.Resolution(option))

+ //send text

+ replyMsg(context.Background(), "已更新图片分辨率为"+option,

+ &msg.MsgId)

+}

+

+func CommonProcessPicStyle(msg CardMsg,

+ cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) {

+ option := cardAction.Action.Option

+ fmt.Println(larkcore.Prettify(msg))

+ cache.SetPicStyle(msg.SessionId, services.PicStyle(option))

+ //send text

+ replyMsg(context.Background(), "已更新图片风格为"+option,

+ &msg.MsgId)

+}

+

+func (m MessageHandler) CommonProcessPicMore(msg CardMsg) {

+ resolution := m.sessionCache.GetPicResolution(msg.SessionId)

+ style := m.sessionCache.GetPicStyle(msg.SessionId)

+

+ logger.Debugf("resolution: %v", resolution)

+ logger.Debug("msg: %v", msg)

+ question := msg.Value.(string)

+ bs64, _ := m.gpt.GenerateOneImage(question, resolution, style)

+ replayImageCardByBase64(context.Background(), bs64, &msg.MsgId,

+ &msg.SessionId, question)

+}

+

+func CommonProcessPicModeChange(cardMsg CardMsg,

+ session services.SessionServiceCacheInterface) (

+ interface{}, error, bool) {

+ if cardMsg.Value == "1" {

+

+ sessionId := cardMsg.SessionId

+ session.Clear(sessionId)

+ session.SetMode(sessionId,

+ services.ModePicCreate)

+ session.SetPicResolution(sessionId,

+ services.Resolution256)

+

+ newCard, _ :=

+ newSendCard(

+ withHeader("🖼️ 已进入图片创作模式", larkcard.TemplateBlue),

+ withPicResolutionBtn(&sessionId),

+ withNote("提醒:回复文本或图片,让AI生成相关的图片。"))

+ return newCard, nil, true

+ }

+ if cardMsg.Value == "0" {

+ newCard, _ := newSendCard(

+ withHeader("️🎒 机器人提醒", larkcard.TemplateGreen),

+ withMainMd("依旧保留此话题的上下文信息"),

+ withNote("我们可以继续探讨这个话题,期待和您聊天。如果您有其他问题或者想要讨论的话题,请告诉我哦"),

+ )

+ return newCard, nil, true

+ }

+ return nil, nil, false

+}

diff --git a/code/handlers/card_role_action.go b/code/handlers/card_role_action.go

new file mode 100644

index 00000000..6a10150c

--- /dev/null

+++ b/code/handlers/card_role_action.go

@@ -0,0 +1,77 @@

+package handlers

+

+import (

+ "context"

+

+ "start-feishubot/initialization"

+ "start-feishubot/services"

+ "start-feishubot/services/openai"

+

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+)

+

+func NewRoleTagCardHandler(cardMsg CardMsg,

+ m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+

+ if cardMsg.Kind == RoleTagsChooseKind {

+ newCard, err, done := CommonProcessRoleTag(cardMsg, cardAction,

+ m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func NewRoleCardHandler(cardMsg CardMsg,

+ m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+

+ if cardMsg.Kind == RoleChooseKind {

+ newCard, err, done := CommonProcessRole(cardMsg, cardAction,

+ m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func CommonProcessRoleTag(msg CardMsg, cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) (interface{},

+ error, bool) {

+ option := cardAction.Action.Option

+ //replyMsg(context.Background(), "已选择tag:"+option,

+ // &msg.MsgId)

+ roles := initialization.GetTitleListByTag(option)

+ //fmt.Printf("roles: %s", roles)

+ SendRoleListCard(context.Background(), &msg.SessionId,

+ &msg.MsgId, option, *roles)

+ return nil, nil, true

+}

+

+func CommonProcessRole(msg CardMsg, cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) (interface{},

+ error, bool) {

+ option := cardAction.Action.Option

+ contentByTitle, error := initialization.GetFirstRoleContentByTitle(option)

+ if error != nil {

+ return nil, error, true

+ }

+ cache.Clear(msg.SessionId)

+ systemMsg := append([]openai.Messages{}, openai.Messages{

+ Role: "system", Content: contentByTitle,

+ })

+ cache.SetMsg(msg.SessionId, systemMsg)

+ //pp.Println("systemMsg: ", systemMsg)

+ sendSystemInstructionCard(context.Background(), &msg.SessionId,

+ &msg.MsgId, contentByTitle)

+ //replyMsg(context.Background(), "已选择角色:"+contentByTitle,

+ // &msg.MsgId)

+ return nil, nil, true

+}

diff --git a/code/handlers/card_vision_action.go b/code/handlers/card_vision_action.go

new file mode 100644

index 00000000..9e056492

--- /dev/null

+++ b/code/handlers/card_vision_action.go

@@ -0,0 +1,74 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+ larkcore "github.com/larksuite/oapi-sdk-go/v3/core"

+ "start-feishubot/services"

+)

+

+func NewVisionResolutionHandler(cardMsg CardMsg,

+ m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == VisionStyleKind {

+ CommonProcessVisionStyle(cardMsg, cardAction, m.sessionCache)

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+func NewVisionModeChangeHandler(cardMsg CardMsg,

+ m MessageHandler) CardHandlerFunc {

+ return func(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error) {

+ if cardMsg.Kind == VisionModeChangeKind {

+ newCard, err, done := CommonProcessVisionModeChange(cardMsg, m.sessionCache)

+ if done {

+ return newCard, err

+ }

+ return nil, nil

+ }

+ return nil, ErrNextHandler

+ }

+}

+

+func CommonProcessVisionStyle(msg CardMsg,

+ cardAction *larkcard.CardAction,

+ cache services.SessionServiceCacheInterface) {

+ option := cardAction.Action.Option

+ fmt.Println(larkcore.Prettify(msg))

+ cache.SetVisionDetail(msg.SessionId, services.VisionDetail(option))

+ //send text

+ replyMsg(context.Background(), "图片解析度调整为:"+option,

+ &msg.MsgId)

+}

+

+func CommonProcessVisionModeChange(cardMsg CardMsg,

+ session services.SessionServiceCacheInterface) (

+ interface{}, error, bool) {

+ if cardMsg.Value == "1" {

+

+ sessionId := cardMsg.SessionId

+ session.Clear(sessionId)

+ session.SetMode(sessionId,

+ services.ModeVision)

+ session.SetVisionDetail(sessionId,

+ services.VisionDetailLow)

+

+ newCard, _ :=

+ newSendCard(

+ withHeader("🕵️️ 已进入图片推理模式", larkcard.TemplateBlue),

+ withVisionDetailLevelBtn(&sessionId),

+ withNote("提醒:回复图片,让LLM和你一起推理图片的内容。"))

+ return newCard, nil, true

+ }

+ if cardMsg.Value == "0" {

+ newCard, _ := newSendCard(

+ withHeader("️🎒 机器人提醒", larkcard.TemplateGreen),

+ withMainMd("依旧保留此话题的上下文信息"),

+ withNote("我们可以继续探讨这个话题,期待和您聊天。如果您有其他问题或者想要讨论的话题,请告诉我哦"),

+ )

+ return newCard, nil, true

+ }

+ return nil, nil, false

+}

diff --git a/code/handlers/common.go b/code/handlers/common.go

index 9845597b..ffe4912a 100644

--- a/code/handlers/common.go

+++ b/code/handlers/common.go

@@ -1,72 +1,93 @@

package handlers

import (

- "context"

"encoding/json"

"fmt"

- larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

"regexp"

- "start-feishubot/initialization"

+ "strconv"

"strings"

)

-func sendMsg(ctx context.Context, msg string, chatId *string) error {

- //msg = strings.Trim(msg, " ")

- //msg = strings.Trim(msg, "\n")

- //msg = strings.Trim(msg, "\r")

- //msg = strings.Trim(msg, "\t")

- //// 去除空行 以及空行前的空格

- //regex := regexp.MustCompile(`\n[\s| ]*\r`)

- //msg = regex.ReplaceAllString(msg, "\n")

- ////换行符转义

- //msg = strings.ReplaceAll(msg, "\n", "\\n")

- fmt.Println("sendMsg", msg, chatId)

- msg, i := processMessage(msg)

- if i != nil {

- return i

- }

- client := initialization.GetLarkClient()

- content := larkim.NewTextMsgBuilder().

- Text(msg).

- Build()

- fmt.Println("content", content)

-

- resp, err := client.Im.Message.Create(ctx, larkim.NewCreateMessageReqBuilder().

- ReceiveIdType(larkim.ReceiveIdTypeChatId).

- Body(larkim.NewCreateMessageReqBodyBuilder().

- MsgType(larkim.MsgTypeText).

- ReceiveId(*chatId).

- Content(content).

- Build()).

- Build())

-

- // 处理错误

+// func sendCard

+func msgFilter(msg string) string {

+ //replace @到下一个非空的字段 为 ''

+ regex := regexp.MustCompile(`@[^ ]*`)

+ return regex.ReplaceAllString(msg, "")

+}

+

+// Parse rich text json to text

+func parsePostContent(content string) string {

+ var contentMap map[string]interface{}

+ err := json.Unmarshal([]byte(content), &contentMap)

+

if err != nil {

fmt.Println(err)

- return err

}

- // 服务端错误处理

- if !resp.Success() {

- fmt.Println(resp.Code, resp.Msg, resp.RequestId())

- return err

+ if contentMap["content"] == nil {

+ return ""

+ }

+ var text string

+ // deal with title

+ if contentMap["title"] != nil && contentMap["title"] != "" {

+ text += contentMap["title"].(string) + "\n"

}

- return nil

+ // deal with content

+ contentList := contentMap["content"].([]interface{})

+ for _, v := range contentList {

+ for _, v1 := range v.([]interface{}) {

+ if v1.(map[string]interface{})["tag"] == "text" {

+ text += v1.(map[string]interface{})["text"].(string)

+ }

+ }

+ // add new line

+ text += "\n"

+ }

+ return msgFilter(text)

}

-func msgFilter(msg string) string {

- //replace @到下一个非空的字段 为 ''

- regex := regexp.MustCompile(`@[^ ]*`)

- return regex.ReplaceAllString(msg, "")

+func parsePostImageKeys(content string) []string {

+ var contentMap map[string]interface{}

+ err := json.Unmarshal([]byte(content), &contentMap)

+

+ if err != nil {

+ fmt.Println(err)

+ return nil

+ }

+

+ var imageKeys []string

+

+ if contentMap["content"] == nil {

+ return imageKeys

+ }

+

+ contentList := contentMap["content"].([]interface{})

+ for _, v := range contentList {

+ for _, v1 := range v.([]interface{}) {

+ if v1.(map[string]interface{})["tag"] == "img" {

+ imageKeys = append(imageKeys, v1.(map[string]interface{})["image_key"].(string))

+ }

+ }

+ }

+

+ return imageKeys

}

-func parseContent(content string) string {

+

+func parseContent(content, msgType string) string {

//"{\"text\":\"@_user_1 hahaha\"}",

//only get text content hahaha

+ if msgType == "post" {

+ return parsePostContent(content)

+ }

+

var contentMap map[string]interface{}

err := json.Unmarshal([]byte(content), &contentMap)

if err != nil {

fmt.Println(err)

}

+ if contentMap["text"] == nil {

+ return ""

+ }

text := contentMap["text"].(string)

return msgFilter(text)

}

@@ -83,6 +104,60 @@ func processMessage(msg interface{}) (string, error) {

if len(msgStr) >= 2 {

msgStr = msgStr[1 : len(msgStr)-1]

}

-

return msgStr, nil

}

+

+func processNewLine(msg string) string {

+ return strings.Replace(msg, "\\n", `

+`, -1)

+}

+

+func processQuote(msg string) string {

+ return strings.Replace(msg, "\\\"", "\"", -1)

+}

+

+// 将字符中 \u003c 替换为 < 等等

+func processUnicode(msg string) string {

+ regex := regexp.MustCompile(`\\u[0-9a-fA-F]{4}`)

+ return regex.ReplaceAllStringFunc(msg, func(s string) string {

+ r, _ := regexp.Compile(`\\u`)

+ s = r.ReplaceAllString(s, "")

+ i, _ := strconv.ParseInt(s, 16, 32)

+ return string(rune(i))

+ })

+}

+

+func cleanTextBlock(msg string) string {

+ msg = processNewLine(msg)

+ msg = processUnicode(msg)

+ msg = processQuote(msg)

+ return msg

+}

+

+func parseFileKey(content string) string {

+ var contentMap map[string]interface{}

+ err := json.Unmarshal([]byte(content), &contentMap)

+ if err != nil {

+ fmt.Println(err)

+ return ""

+ }

+ if contentMap["file_key"] == nil {

+ return ""

+ }

+ fileKey := contentMap["file_key"].(string)

+ return fileKey

+}

+

+func parseImageKey(content string) string {

+ var contentMap map[string]interface{}

+ err := json.Unmarshal([]byte(content), &contentMap)

+ if err != nil {

+ fmt.Println(err)

+ return ""

+ }

+ if contentMap["image_key"] == nil {

+ return ""

+ }

+ imageKey := contentMap["image_key"].(string)

+ return imageKey

+}

diff --git a/code/handlers/event_audio_action.go b/code/handlers/event_audio_action.go

new file mode 100644

index 00000000..e01bce22

--- /dev/null

+++ b/code/handlers/event_audio_action.go

@@ -0,0 +1,69 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ "os"

+

+ "start-feishubot/initialization"

+ "start-feishubot/utils/audio"

+

+ larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

+)

+

+type AudioAction struct { /*语音*/

+}

+

+func (*AudioAction) Execute(a *ActionInfo) bool {

+ check := AzureModeCheck(a)

+ if !check {

+ return true

+ }

+

+ // 只有私聊才解析语音,其他不解析

+ if a.info.handlerType != UserHandler {

+ return true

+ }

+

+ //判断是否是语音

+ if a.info.msgType == "audio" {

+ fileKey := a.info.fileKey

+ //fmt.Printf("fileKey: %s \n", fileKey)

+ msgId := a.info.msgId

+ //fmt.Println("msgId: ", *msgId)

+ req := larkim.NewGetMessageResourceReqBuilder().MessageId(

+ *msgId).FileKey(fileKey).Type("file").Build()

+ resp, err := initialization.GetLarkClient().Im.MessageResource.Get(context.Background(), req)

+ //fmt.Println(resp, err)

+ if err != nil {

+ fmt.Println(err)

+ return true

+ }

+ f := fmt.Sprintf("%s.ogg", fileKey)

+ resp.WriteFile(f)

+ defer os.Remove(f)

+

+ //fmt.Println("f: ", f)

+ output := fmt.Sprintf("%s.mp3", fileKey)

+ // 等待转换完成

+ audio.OggToWavByPath(f, output)

+ defer os.Remove(output)

+ //fmt.Println("output: ", output)

+

+ text, err := a.handler.gpt.AudioToText(output)

+ if err != nil {

+ fmt.Println(err)

+

+ sendMsg(*a.ctx, fmt.Sprintf("🤖️:语音转换失败,请稍后再试~\n错误信息: %v", err), a.info.msgId)

+ return false

+ }

+

+ replyMsg(*a.ctx, fmt.Sprintf("🤖️:%s", text), a.info.msgId)

+ //fmt.Println("text: ", text)

+ a.info.qParsed = text

+ return true

+ }

+

+ return true

+

+}

diff --git a/code/handlers/event_common_action.go b/code/handlers/event_common_action.go

new file mode 100644

index 00000000..190d66bd

--- /dev/null

+++ b/code/handlers/event_common_action.go

@@ -0,0 +1,169 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+

+ "start-feishubot/initialization"

+ "start-feishubot/services/openai"

+ "start-feishubot/utils"

+

+ larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

+)

+

+type MsgInfo struct {

+ handlerType HandlerType

+ msgType string

+ msgId *string

+ chatId *string

+ qParsed string

+ fileKey string

+ imageKey string

+ imageKeys []string // post 消息卡片中的图片组

+ sessionId *string

+ mention []*larkim.MentionEvent

+}

+type ActionInfo struct {

+ handler *MessageHandler

+ ctx *context.Context

+ info *MsgInfo

+}

+

+type Action interface {

+ Execute(a *ActionInfo) bool

+}

+

+type ProcessedUniqueAction struct { //消息唯一性

+}

+

+func (*ProcessedUniqueAction) Execute(a *ActionInfo) bool {

+ if a.handler.msgCache.IfProcessed(*a.info.msgId) {

+ return false

+ }

+ a.handler.msgCache.TagProcessed(*a.info.msgId)

+ return true

+}

+

+type ProcessMentionAction struct { //是否机器人应该处理

+}

+

+func (*ProcessMentionAction) Execute(a *ActionInfo) bool {

+ // 私聊直接过

+ if a.info.handlerType == UserHandler {

+ return true

+ }

+ // 群聊判断是否提到机器人

+ if a.info.handlerType == GroupHandler {

+ if a.handler.judgeIfMentionMe(a.info.mention) {

+ return true

+ }

+ return false

+ }

+ return false

+}

+

+type EmptyAction struct { /*空消息*/

+}

+

+func (*EmptyAction) Execute(a *ActionInfo) bool {

+ if len(a.info.qParsed) == 0 {

+ sendMsg(*a.ctx, "🤖️:你想知道什么呢~", a.info.chatId)

+ fmt.Println("msgId", *a.info.msgId,

+ "message.text is empty")

+

+ return false

+ }

+ return true

+}

+

+type ClearAction struct { /*清除消息*/

+}

+

+func (*ClearAction) Execute(a *ActionInfo) bool {

+ if _, foundClear := utils.EitherTrimEqual(a.info.qParsed,

+ "/clear", "清除"); foundClear {

+ sendClearCacheCheckCard(*a.ctx, a.info.sessionId,

+ a.info.msgId)

+ return false

+ }

+ return true

+}

+

+type RolePlayAction struct { /*角色扮演*/

+}

+

+func (*RolePlayAction) Execute(a *ActionInfo) bool {

+ if system, foundSystem := utils.EitherCutPrefix(a.info.qParsed,

+ "/system ", "角色扮演 "); foundSystem {

+ a.handler.sessionCache.Clear(*a.info.sessionId)

+ systemMsg := append([]openai.Messages{}, openai.Messages{

+ Role: "system", Content: system,

+ })

+ a.handler.sessionCache.SetMsg(*a.info.sessionId, systemMsg)

+ sendSystemInstructionCard(*a.ctx, a.info.sessionId,

+ a.info.msgId, system)

+ return false

+ }

+ return true

+}

+

+type HelpAction struct { /*帮助*/

+}

+

+func (*HelpAction) Execute(a *ActionInfo) bool {

+ if _, foundHelp := utils.EitherTrimEqual(a.info.qParsed, "/help",

+ "帮助"); foundHelp {

+ sendHelpCard(*a.ctx, a.info.sessionId, a.info.msgId)

+ return false

+ }

+ return true

+}

+

+type BalanceAction struct { /*余额*/

+}

+

+func (*BalanceAction) Execute(a *ActionInfo) bool {

+ if _, foundBalance := utils.EitherTrimEqual(a.info.qParsed,

+ "/balance", "余额"); foundBalance {

+ balanceResp, err := a.handler.gpt.GetBalance()

+ if err != nil {

+ replyMsg(*a.ctx, "查询余额失败,请稍后再试", a.info.msgId)

+ return false

+ }

+ sendBalanceCard(*a.ctx, a.info.sessionId, *balanceResp)

+ return false

+ }

+ return true

+}

+

+type RoleListAction struct { /*角色列表*/

+}

+

+func (*RoleListAction) Execute(a *ActionInfo) bool {

+ if _, foundSystem := utils.EitherTrimEqual(a.info.qParsed,

+ "/roles", "角色列表"); foundSystem {

+ //a.handler.sessionCache.Clear(*a.info.sessionId)

+ //systemMsg := append([]openai.Messages{}, openai.Messages{

+ // Role: "system", Content: system,

+ //})

+ //a.handler.sessionCache.SetMsg(*a.info.sessionId, systemMsg)

+ //sendSystemInstructionCard(*a.ctx, a.info.sessionId,

+ // a.info.msgId, system)

+ tags := initialization.GetAllUniqueTags()

+ SendRoleTagsCard(*a.ctx, a.info.sessionId, a.info.msgId, *tags)

+ return false

+ }

+ return true

+}

+

+type AIModeAction struct { /*发散模式*/

+}

+

+func (*AIModeAction) Execute(a *ActionInfo) bool {

+ if _, foundMode := utils.EitherCutPrefix(a.info.qParsed,

+ "/ai_mode", "发散模式"); foundMode {

+ SendAIModeListsCard(*a.ctx, a.info.sessionId, a.info.msgId, openai.AIModeStrs)

+ return false

+ }

+ return true

+}

diff --git a/code/handlers/event_msg_action.go b/code/handlers/event_msg_action.go

new file mode 100644

index 00000000..f9e10f71

--- /dev/null

+++ b/code/handlers/event_msg_action.go

@@ -0,0 +1,222 @@

+package handlers

+

+import (

+ "encoding/json"

+ "fmt"

+ "log"

+ "strings"

+ "time"

+

+ "start-feishubot/services/openai"

+)

+

+func setDefaultPrompt(msg []openai.Messages) []openai.Messages {

+ if !hasSystemRole(msg) {

+ msg = append(msg, openai.Messages{

+ Role: "system", Content: "You are ChatGPT, " +

+ "a large language model trained by OpenAI. " +

+ "Answer in user's language as concisely as" +

+ " possible. Knowledge cutoff: 20230601 " +

+ "Current date" + time.Now().Format("20060102"),

+ })

+ }

+ return msg

+}

+

+//func setDefaultVisionPrompt(msg []openai.VisionMessages) []openai.VisionMessages {

+// if !hasSystemRole(msg) {

+// msg = append(msg, openai.VisionMessages{

+// Role: "system", Content: []openai.ContentType{

+// {Type: "text", Text: "You are ChatGPT4V, " +

+// "You are ChatGPT4V, " +

+// "a large language and picture model trained by" +

+// " OpenAI. " +

+// "Answer in user's language as concisely as" +

+// " possible. Knowledge cutoff: 20230601 " +

+// "Current date" + time.Now().Format("20060102"),

+// }},

+// })

+// }

+// return msg

+//}

+

+type MessageAction struct { /*消息*/

+}

+

+func (*MessageAction) Execute(a *ActionInfo) bool {

+ if a.handler.config.StreamMode {

+ return true

+ }

+ msg := a.handler.sessionCache.GetMsg(*a.info.sessionId)

+ // 如果没有提示词,默认模拟ChatGPT

+ msg = setDefaultPrompt(msg)

+ msg = append(msg, openai.Messages{

+ Role: "user", Content: a.info.qParsed,

+ })

+

+ // get ai mode as temperature

+ aiMode := a.handler.sessionCache.GetAIMode(*a.info.sessionId)

+ fmt.Println("msg: ", msg)

+ fmt.Println("aiMode: ", aiMode)

+ completions, err := a.handler.gpt.Completions(msg, aiMode)

+ if err != nil {

+ replyMsg(*a.ctx, fmt.Sprintf(

+ "🤖️:消息机器人摆烂了,请稍后再试~\n错误信息: %v", err), a.info.msgId)

+ return false

+ }

+ msg = append(msg, completions)

+ a.handler.sessionCache.SetMsg(*a.info.sessionId, msg)

+ //if new topic

+ if len(msg) == 3 {

+ //fmt.Println("new topic", msg[1].Content)

+ sendNewTopicCard(*a.ctx, a.info.sessionId, a.info.msgId,

+ completions.Content)

+ return false

+ }

+ if len(msg) != 3 {

+ sendOldTopicCard(*a.ctx, a.info.sessionId, a.info.msgId,

+ completions.Content)

+ return false

+ }

+ err = replyMsg(*a.ctx, completions.Content, a.info.msgId)

+ if err != nil {

+ replyMsg(*a.ctx, fmt.Sprintf(

+ "🤖️:消息机器人摆烂了,请稍后再试~\n错误信息: %v", err), a.info.msgId)

+ return false

+ }

+ return true

+}

+

+//判断msg中的是否包含system role

+func hasSystemRole(msg []openai.Messages) bool {

+ for _, m := range msg {

+ if m.Role == "system" {

+ return true

+ }

+ }

+ return false

+}

+

+type StreamMessageAction struct { /*消息*/

+}

+

+func (m *StreamMessageAction) Execute(a *ActionInfo) bool {

+ if !a.handler.config.StreamMode {

+ return true

+ }

+ msg := a.handler.sessionCache.GetMsg(*a.info.sessionId)

+ // 如果没有提示词,默认模拟ChatGPT

+ msg = setDefaultPrompt(msg)

+ msg = append(msg, openai.Messages{

+ Role: "user", Content: a.info.qParsed,

+ })

+ //if new topic

+ var ifNewTopic bool

+ if len(msg) <= 3 {

+ ifNewTopic = true

+ } else {

+ ifNewTopic = false

+ }

+

+ cardId, err2 := sendOnProcess(a, ifNewTopic)

+ if err2 != nil {

+ return false

+ }

+

+ answer := ""

+ chatResponseStream := make(chan string)

+ done := make(chan struct{}) // 添加 done 信号,保证 goroutine 正确退出

+ noContentTimeout := time.AfterFunc(10*time.Second, func() {

+ log.Println("no content timeout")

+ close(done)

+ err := updateFinalCard(*a.ctx, "请求超时", cardId, ifNewTopic)

+ if err != nil {

+ return

+ }

+ return

+ })

+ defer noContentTimeout.Stop()

+

+ go func() {

+ defer func() {

+ if err := recover(); err != nil {

+ err := updateFinalCard(*a.ctx, "聊天失败", cardId, ifNewTopic)

+ if err != nil {

+ return

+ }

+ }

+ }()

+

+ //log.Printf("UserId: %s , Request: %s", a.info.userId, msg)

+ aiMode := a.handler.sessionCache.GetAIMode(*a.info.sessionId)

+ //fmt.Println("msg: ", msg)

+ //fmt.Println("aiMode: ", aiMode)

+ if err := a.handler.gpt.StreamChat(*a.ctx, msg, aiMode,

+ chatResponseStream); err != nil {

+ err := updateFinalCard(*a.ctx, "聊天失败", cardId, ifNewTopic)

+ if err != nil {

+ return

+ }

+ close(done) // 关闭 done 信号

+ }

+

+ close(done) // 关闭 done 信号

+ }()

+ ticker := time.NewTicker(700 * time.Millisecond)

+ defer ticker.Stop() // 注意在函数结束时停止 ticker

+ go func() {

+ for {

+ select {

+ case <-done:

+ return

+ case <-ticker.C:

+ err := updateTextCard(*a.ctx, answer, cardId, ifNewTopic)

+ if err != nil {

+ return

+ }

+ }

+ }

+ }()

+ for {

+ select {

+ case res, ok := <-chatResponseStream:

+ if !ok {

+ return false

+ }

+ noContentTimeout.Stop()

+ answer += res

+ //pp.Println("answer", answer)

+ case <-done: // 添加 done 信号的处理

+ err := updateFinalCard(*a.ctx, answer, cardId, ifNewTopic)

+ if err != nil {

+ return false

+ }

+ ticker.Stop()

+ msg := append(msg, openai.Messages{

+ Role: "assistant", Content: answer,

+ })

+ a.handler.sessionCache.SetMsg(*a.info.sessionId, msg)

+ close(chatResponseStream)

+ log.Printf("\n\n\n")

+ jsonByteArray, err := json.Marshal(msg)

+ if err != nil {

+ log.Println(err)

+ }

+ jsonStr := strings.ReplaceAll(string(jsonByteArray), "\\n", "")

+ jsonStr = strings.ReplaceAll(jsonStr, "\n", "")

+ log.Printf("\n\n\n")

+ return false

+ }

+ }

+}

+

+func sendOnProcess(a *ActionInfo, ifNewTopic bool) (*string, error) {

+ // send 正在处理中

+ cardId, err := sendOnProcessCard(*a.ctx, a.info.sessionId,

+ a.info.msgId, ifNewTopic)

+ if err != nil {

+ return nil, err

+ }

+ return cardId, nil

+

+}

diff --git a/code/handlers/event_pic_action.go b/code/handlers/event_pic_action.go

new file mode 100644

index 00000000..ca08d216

--- /dev/null

+++ b/code/handlers/event_pic_action.go

@@ -0,0 +1,110 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ "os"

+ "start-feishubot/logger"

+

+ "start-feishubot/initialization"

+ "start-feishubot/services"

+ "start-feishubot/services/openai"

+ "start-feishubot/utils"

+

+ larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

+)

+

+type PicAction struct { /*图片*/

+}

+

+func (*PicAction) Execute(a *ActionInfo) bool {

+ check := AzureModeCheck(a)

+ if !check {

+ return true

+ }

+ // 开启图片创作模式

+ if _, foundPic := utils.EitherTrimEqual(a.info.qParsed,

+ "/picture", "图片创作"); foundPic {

+ a.handler.sessionCache.Clear(*a.info.sessionId)

+ a.handler.sessionCache.SetMode(*a.info.sessionId,

+ services.ModePicCreate)

+ a.handler.sessionCache.SetPicResolution(*a.info.sessionId,

+ services.Resolution1024)

+ sendPicCreateInstructionCard(*a.ctx, a.info.sessionId,

+ a.info.msgId)

+ return false

+ }

+

+ mode := a.handler.sessionCache.GetMode(*a.info.sessionId)

+ //fmt.Println("mode: ", mode)

+ logger.Debug("MODE:", mode)

+ // 收到一张图片,且不在图片创作模式下, 提醒是否切换到图片创作模式

+ if a.info.msgType == "image" && mode != services.ModePicCreate {

+ sendPicModeCheckCard(*a.ctx, a.info.sessionId, a.info.msgId)

+ return false

+ }

+

+ if a.info.msgType == "image" && mode == services.ModePicCreate {

+ //保存图片

+ imageKey := a.info.imageKey

+ //fmt.Printf("fileKey: %s \n", imageKey)

+ msgId := a.info.msgId

+ //fmt.Println("msgId: ", *msgId)

+ req := larkim.NewGetMessageResourceReqBuilder().MessageId(

+ *msgId).FileKey(imageKey).Type("image").Build()

+ resp, err := initialization.GetLarkClient().Im.MessageResource.Get(context.Background(), req)

+ //fmt.Println(resp, err)

+ if err != nil {

+ //fmt.Println(err)

+ replyMsg(*a.ctx, fmt.Sprintf("🤖️:图片下载失败,请稍后再试~\n 错误信息: %v", err),

+ a.info.msgId)

+ return false

+ }

+

+ f := fmt.Sprintf("%s.png", imageKey)

+ resp.WriteFile(f)

+ defer os.Remove(f)

+ resolution := a.handler.sessionCache.GetPicResolution(*a.

+ info.sessionId)

+

+ openai.ConvertJpegToPNG(f)

+ openai.ConvertToRGBA(f, f)

+

+ //图片校验

+ err = openai.VerifyPngs([]string{f})

+ if err != nil {

+ replyMsg(*a.ctx, fmt.Sprintf("🤖️:无法解析图片,请发送原图并尝试重新操作~"),

+ a.info.msgId)

+ return false

+ }

+ bs64, err := a.handler.gpt.GenerateOneImageVariation(f, resolution)

+ if err != nil {

+ replyMsg(*a.ctx, fmt.Sprintf(

+ "🤖️:图片生成失败,请稍后再试~\n错误信息: %v", err), a.info.msgId)

+ return false

+ }

+ replayImagePlainByBase64(*a.ctx, bs64, a.info.msgId)

+ return false

+

+ }

+

+ // 生成图片

+ if mode == services.ModePicCreate {

+ resolution := a.handler.sessionCache.GetPicResolution(*a.

+ info.sessionId)

+ style := a.handler.sessionCache.GetPicStyle(*a.

+ info.sessionId)

+ bs64, err := a.handler.gpt.GenerateOneImage(a.info.qParsed,

+ resolution, style)

+ if err != nil {

+ replyMsg(*a.ctx, fmt.Sprintf(

+ "🤖️:图片生成失败,请稍后再试~\n错误信息: %v", err), a.info.msgId)

+ return false

+ }

+ replayImageCardByBase64(*a.ctx, bs64, a.info.msgId, a.info.sessionId,

+ a.info.qParsed)

+ return false

+ }

+

+ return true

+}

diff --git a/code/handlers/event_vision_action.go b/code/handlers/event_vision_action.go

new file mode 100644

index 00000000..ae67873b

--- /dev/null

+++ b/code/handlers/event_vision_action.go

@@ -0,0 +1,160 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ "os"

+ "start-feishubot/initialization"

+ "start-feishubot/services"

+ "start-feishubot/services/openai"

+ "start-feishubot/utils"

+

+ larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

+)

+

+type VisionAction struct { /*图片推理*/

+}

+

+func (va *VisionAction) Execute(a *ActionInfo) bool {

+ if !AzureModeCheck(a) {

+ return true

+ }

+

+ if isVisionCommand(a) {

+ initializeVisionMode(a)

+ sendVisionInstructionCard(*a.ctx, a.info.sessionId, a.info.msgId)

+ return false

+ }

+

+ mode := a.handler.sessionCache.GetMode(*a.info.sessionId)

+

+ if a.info.msgType == "image" {

+ if mode != services.ModeVision {

+ sendVisionModeCheckCard(*a.ctx, a.info.sessionId, a.info.msgId)

+ return false

+ }

+

+ return va.handleVisionImage(a)

+ }

+

+ if a.info.msgType == "post" && mode == services.ModeVision {

+ return va.handleVisionPost(a)

+ }

+

+ return true

+}

+

+func isVisionCommand(a *ActionInfo) bool {

+ _, foundPic := utils.EitherTrimEqual(a.info.qParsed, "/vision", "图片推理")

+ return foundPic

+}

+

+func initializeVisionMode(a *ActionInfo) {

+ a.handler.sessionCache.Clear(*a.info.sessionId)

+ a.handler.sessionCache.SetMode(*a.info.sessionId, services.ModeVision)

+ a.handler.sessionCache.SetVisionDetail(*a.info.sessionId, services.VisionDetailHigh)

+}

+

+func (va *VisionAction) handleVisionImage(a *ActionInfo) bool {

+ detail := a.handler.sessionCache.GetVisionDetail(*a.info.sessionId)

+ base64, err := downloadAndEncodeImage(a.info.imageKey, a.info.msgId)

+ if err != nil {

+ replyWithErrorMsg(*a.ctx, err, a.info.msgId)

+ return false

+ }

+

+ return va.processImageAndReply(a, base64, detail)

+}

+

+func (va *VisionAction) handleVisionPost(a *ActionInfo) bool {

+ detail := a.handler.sessionCache.GetVisionDetail(*a.info.sessionId)

+ var base64s []string

+

+ for _, imageKey := range a.info.imageKeys {

+ if imageKey == "" {

+ continue

+ }

+ base64, err := downloadAndEncodeImage(imageKey, a.info.msgId)

+ if err != nil {

+ replyWithErrorMsg(*a.ctx, err, a.info.msgId)

+ return false

+ }

+ base64s = append(base64s, base64)

+ }

+

+ if len(base64s) == 0 {

+ replyMsg(*a.ctx, "🤖️:请发送一张图片", a.info.msgId)

+ return false

+ }

+

+ return va.processMultipleImagesAndReply(a, base64s, detail)

+}

+

+func downloadAndEncodeImage(imageKey string, msgId *string) (string, error) {

+ f := fmt.Sprintf("%s.png", imageKey)

+ defer os.Remove(f)

+

+ req := larkim.NewGetMessageResourceReqBuilder().MessageId(*msgId).FileKey(imageKey).Type("image").Build()

+ resp, err := initialization.GetLarkClient().Im.MessageResource.Get(context.Background(), req)

+ if err != nil {

+ return "", err

+ }

+

+ resp.WriteFile(f)

+ return openai.GetBase64FromImage(f)

+}

+

+func replyWithErrorMsg(ctx context.Context, err error, msgId *string) {

+ replyMsg(ctx, fmt.Sprintf("🤖️:图片下载失败,请稍后再试~\n 错误信息: %v", err), msgId)

+}

+

+func (va *VisionAction) processImageAndReply(a *ActionInfo, base64 string, detail string) bool {

+ msg := createVisionMessages("解释这个图片", base64, detail)

+ completions, err := a.handler.gpt.GetVisionInfo(msg)

+ if err != nil {

+ replyWithErrorMsg(*a.ctx, err, a.info.msgId)

+ return false

+ }

+ sendVisionTopicCard(*a.ctx, a.info.sessionId, a.info.msgId, completions.Content)

+ return false

+}

+

+func (va *VisionAction) processMultipleImagesAndReply(a *ActionInfo, base64s []string, detail string) bool {

+ msg := createMultipleVisionMessages(a.info.qParsed, base64s, detail)

+ completions, err := a.handler.gpt.GetVisionInfo(msg)

+ if err != nil {

+ replyWithErrorMsg(*a.ctx, err, a.info.msgId)

+ return false

+ }

+ sendVisionTopicCard(*a.ctx, a.info.sessionId, a.info.msgId, completions.Content)

+ return false

+}

+

+func createVisionMessages(query, base64Image, detail string) []openai.VisionMessages {

+ return []openai.VisionMessages{

+ {

+ Role: "user",

+ Content: []openai.ContentType{

+ {Type: "text", Text: query},

+ {Type: "image_url", ImageURL: &openai.ImageURL{

+ URL: "data:image/jpeg;base64," + base64Image,

+ Detail: detail,

+ }},

+ },

+ },

+ }

+}

+

+func createMultipleVisionMessages(query string, base64Images []string, detail string) []openai.VisionMessages {

+ content := []openai.ContentType{{Type: "text", Text: query}}

+ for _, base64Image := range base64Images {

+ content = append(content, openai.ContentType{

+ Type: "image_url",

+ ImageURL: &openai.ImageURL{

+ URL: "data:image/jpeg;base64," + base64Image,

+ Detail: detail,

+ },

+ })

+ }

+ return []openai.VisionMessages{{Role: "user", Content: content}}

+}

diff --git a/code/handlers/handler.go b/code/handlers/handler.go

new file mode 100644

index 00000000..d7622f1b

--- /dev/null

+++ b/code/handlers/handler.go

@@ -0,0 +1,140 @@

+package handlers

+

+import (

+ "context"

+ "fmt"

+ larkcore "github.com/larksuite/oapi-sdk-go/v3/core"

+ "start-feishubot/logger"

+ "strings"

+

+ "start-feishubot/initialization"

+ "start-feishubot/services"

+ "start-feishubot/services/openai"

+

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

+ larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

+)

+

+// 责任链

+func chain(data *ActionInfo, actions ...Action) bool {

+ for _, v := range actions {

+ if !v.Execute(data) {

+ return false

+ }

+ }

+ return true

+}

+

+type MessageHandler struct {

+ sessionCache services.SessionServiceCacheInterface

+ msgCache services.MsgCacheInterface

+ gpt *openai.ChatGPT

+ config initialization.Config

+}

+

+func (m MessageHandler) cardHandler(ctx context.Context,

+ cardAction *larkcard.CardAction) (interface{}, error) {

+ messageHandler := NewCardHandler(m)

+ return messageHandler(ctx, cardAction)

+}

+

+func judgeMsgType(event *larkim.P2MessageReceiveV1) (string, error) {

+ msgType := event.Event.Message.MessageType

+

+ switch *msgType {

+ case "text", "image", "audio", "post":

+ return *msgType, nil

+ default:

+ return "", fmt.Errorf("unknown message type: %v", *msgType)

+ }

+}

+

+func (m MessageHandler) msgReceivedHandler(ctx context.Context, event *larkim.P2MessageReceiveV1) error {

+ handlerType := judgeChatType(event)

+ logger.Debug("handlerType", handlerType)

+ if handlerType == "otherChat" {

+ fmt.Println("unknown chat type")

+ return nil

+ }

+ logger.Debug("收到消息:", larkcore.Prettify(event.Event.Message))

+

+ msgType, err := judgeMsgType(event)

+ if err != nil {

+ fmt.Printf("error getting message type: %v\n", err)

+ return nil

+ }

+

+ content := event.Event.Message.Content

+ msgId := event.Event.Message.MessageId

+ rootId := event.Event.Message.RootId

+ chatId := event.Event.Message.ChatId

+ mention := event.Event.Message.Mentions

+

+ sessionId := rootId

+ if sessionId == nil || *sessionId == "" {

+ sessionId = msgId

+ }

+ msgInfo := MsgInfo{

+ handlerType: handlerType,

+ msgType: msgType,

+ msgId: msgId,

+ chatId: chatId,

+ qParsed: strings.Trim(parseContent(*content, msgType), " "),

+ fileKey: parseFileKey(*content),

+ imageKey: parseImageKey(*content),

+ imageKeys: parsePostImageKeys(*content),

+ sessionId: sessionId,

+ mention: mention,

+ }

+ data := &ActionInfo{

+ ctx: &ctx,

+ handler: &m,

+ info: &msgInfo,

+ }

+ actions := []Action{

+ &ProcessedUniqueAction{}, //避免重复处理

+ &ProcessMentionAction{}, //判断机器人是否应该被调用

+ &AudioAction{}, //语音处理

+ &ClearAction{}, //清除消息处理

+ &VisionAction{}, //图片推理处理

+ &PicAction{}, //图片处理

+ &AIModeAction{}, //模式切换处理

+ &RoleListAction{}, //角色列表处理

+ &HelpAction{}, //帮助处理

+ &BalanceAction{}, //余额处理

+ &RolePlayAction{}, //角色扮演处理

+ &MessageAction{}, //消息处理

+ &EmptyAction{}, //空消息处理

+ &StreamMessageAction{}, //流式消息处理

+ }

+ chain(data, actions...)

+ return nil

+}

+

+var _ MessageHandlerInterface = (*MessageHandler)(nil)

+

+func NewMessageHandler(gpt *openai.ChatGPT,

+ config initialization.Config) MessageHandlerInterface {

+ return &MessageHandler{

+ sessionCache: services.GetSessionCache(),

+ msgCache: services.GetMsgCache(),

+ gpt: gpt,

+ config: config,

+ }

+}

+

+func (m MessageHandler) judgeIfMentionMe(mention []*larkim.

+ MentionEvent) bool {

+ if len(mention) != 1 {

+ return false

+ }

+ return *mention[0].Name == m.config.FeishuBotName

+}

+

+func AzureModeCheck(a *ActionInfo) bool {

+ if a.handler.config.AzureOn {

+ //sendMsg(*a.ctx, "Azure Openai 接口下,暂不支持此功能", a.info.chatId)

+ return false

+ }

+ return true

+}

diff --git a/code/handlers/init.go b/code/handlers/init.go

index d90316e5..25ab953a 100644

--- a/code/handlers/init.go

+++ b/code/handlers/init.go

@@ -2,11 +2,18 @@ package handlers

import (

"context"

+ "start-feishubot/logger"

+

+ "start-feishubot/initialization"

+ "start-feishubot/services/openai"

+

+ larkcard "github.com/larksuite/oapi-sdk-go/v3/card"

larkim "github.com/larksuite/oapi-sdk-go/v3/service/im/v1"

)

type MessageHandlerInterface interface {

- handle(ctx context.Context, event *larkim.P2MessageReceiveV1) error

+ msgReceivedHandler(ctx context.Context, event *larkim.P2MessageReceiveV1) error

+ cardHandler(ctx context.Context, cardAction *larkcard.CardAction) (interface{}, error)

}

type HandlerType string

@@ -17,14 +24,52 @@ const (

)

// handlers 所有消息类型类型的处理器

-var handlers map[HandlerType]MessageHandlerInterface

+var handlers MessageHandlerInterface

-func init() {

- handlers = make(map[HandlerType]MessageHandlerInterface)

- //handlers[GroupHandler] = NewGroupMessageHandler()

- handlers[UserHandler] = NewPersonalMessageHandler()

+func InitHandlers(gpt *openai.ChatGPT, config initialization.Config) {

+ handlers = NewMessageHandler(gpt, config)

}

func Handler(ctx context.Context, event *larkim.P2MessageReceiveV1) error {

- return handlers[UserHandler].handle(ctx, event)

+ return handlers.msgReceivedHandler(ctx, event)

+}

+

+func ReadHandler(ctx context.Context, event *larkim.P2MessageReadV1) error {